Modern AI development requires different patterns than traditional software. By combining familiar engineering practices with new approaches for handling the probabilistic nature of AI, teams can successfully scale their AI products into production.

In the middle of the journey of my life I came to myself within a dark wood where the straight way was lost. - Dante Alighieri

Traditional software development - with its well-worn best practices and established patterns - disappears when we develop with AI. But this uncertainty isn't a sign we're lost; it's a signal that we're pioneering new ground. While the technology is revolutionary, we're discovering that successful AI implementation relies on familiar engineering foundations. Through my work in developer relations at Dagster, I've observed how teams navigate the seemingly dark woods and noticed some illuminating patterns.

Understand the Technology

When it comes to Artificial Intelligence, the hype and marketing machines behind it can make it difficult to ground the capabilities and limitations. Artificial intelligence is a probabilistic process, not a deterministic one. This is the most important thing to understand when you build with these tools.

You'll always get the same output in a deterministic system. In traditional software, given the same input, you can expect the same output. It's predictable and rule-based - if you enter "2 + 2" into a calculator, you'll get "4" every time. A deterministic process follows a fixed path from input to output.

But AI systems work differently. They deal in probabilities, patterns, and distributions rather than fixed rules. When an AI model generates text or makes a prediction, it makes an educated guess based on patterns it has learned, selecting from a distribution of possible outputs. Different models also have different levels of randomness and the variance itself is what makes them useful.

This is why:

- You can ask ChatGPT the same question twice and get different answers.

- Image generation models create slightly different images from the same prompt.

- Speech recognition might interpret the same audio differently under different conditions.

This probabilistic foundation reshapes how we approach building AI applications. Rather than seeking absolute correctness, we must think about confidence levels. Traditional testing transforms into a statistical endeavor, where success isn't binary but measured across distributions of outcomes. This shift demands a more nuanced system architecture that embraces variability and gracefully handles ambiguity as a feature rather than an edge case. When designing these systems, we need to anticipate and account for uncertainty, building safeguards and fallbacks into our core logic.

The Right Tool for the Job

When automating a process, you need to consider where the bottlenecks are. For AI use cases, you need to consider whether the bottleneck is “intelligence,” making decisions, recalling information, or synthesizing.

These functions suit themselves well to the AI applications:

- Support: Generating conversations to help guide users through issues.

- Transcribing/summarizing: Transcription and information synthesis are the bread-and-butter applications for Language models

- Troubleshooting technical problems: Processing errors often require specific knowledge that an LLM can easily retrieve using RAG.

The following functions are still best left to People:

- Design: AI generated images have a certain look to them that give off a cheap/half baked aesthetic.

- Software Architecture: System architecture requires the synthesis of stakeholder requirements, technical capabilities/limitation, and the existing codebase.

- Anything New: AI is heavily biased towards older tools that have large amounts of training data. If you're at the cutting edge, you're better suited with good old-fashioned natural intelligence.

Agents represent the next frontier in AI. Workers who can autonomously execute complex, multi-step tasks that traditionally require human oversight. While a standard AI model might answer a single question, an agent can break down a complex goal into subtasks, execute them sequentially, and adjust its strategy based on intermediate results.

This power comes with significant challenges. Each step in an agent's chain of actions introduces new opportunities for errors or drift from the intended outcome. Since agents operate probabilistically, like all AI systems, these uncertainties compound with each decision. Managing this complexity requires robust orchestration and monitoring.You need to track the final output and the entire decision chain that led there. Getting these right are the impediments to so-called “Artificial General Intelligence”.

We recently explored these challenges in a deep dive with Olivier Dupuis, who demonstrated how to orchestrate research agents using Dagster and Hex. His implementation showed how proper orchestration can make agent systems more reliable and debuggable by providing visibility into each step while maintaining the clear lineage of decisions and data transformations.

Use Case Example: Building a RAG With Dagster

While AI presents many exciting possibilities, one of the most practical and immediately valuable implementations is using it to enhance knowledge within organizations. At Dagster, we've implemented this with Scout, our RAG-powered assistant helps users navigate Dagster-related questions, troubleshoot code, and resolve errors. This service exemplifies how AI can be powerful and practical when given the proper context.

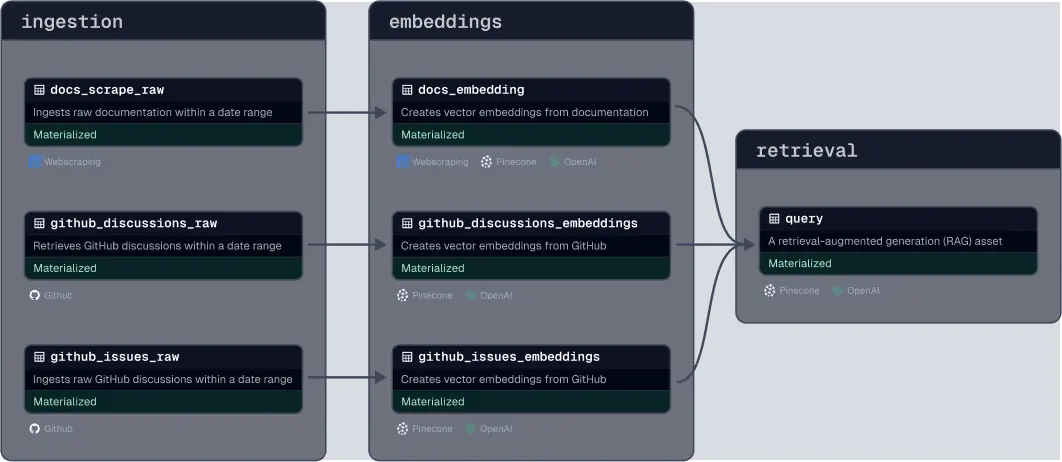

I recently built a simplified version of this system to demonstrate how Dagster's abstractions make such implementations surprisingly straightforward:

- Resources: Managing connections to Pinecone (Vector database), GitHub, and Web scraping to build reusable blocks.

- Partitions: Ingestion data is partitioned weekly to manage embedding context limits better and improve performance.

- The Graph: We manage the output from the process, not the process itself. This simplifies debugging and understanding.

- OpenAI integration: Shows credit and token usage as asset metadata, enhancing observability

- Declarative Automation: Downstream Assets automatically keep themselves up to date with one line of code.

You can watch the full video of me building it end-to-end here:

AI Development with Dagster

At its core, AI engineering is data engineering, and data engineering is software engineering. What's particularly interesting is that modern AI engineering can often be more straightforward than traditional ML engineering, as cloud-based models and services handle much of the complex computational heavy lifting.

AI's effectiveness ultimately depends on context, which is why approaches like RAG have become so powerful. These systems recognize that context equals data, allowing organizations to leverage domain-specific knowledge while benefiting from general-purpose AI models. The challenge shifts from model development to data management and engineering, focusing on effectively providing AI systems with the proper context at the right time.

Current state of AI pic.twitter.com/6UREsbqztP

— Igor Momentum (@igormomentum) January 18, 2025

You can put yourself pretty far ahead of the curve by doing proper orchestration

This is where Dagster's abstractions become particularly valuable for building production-grade AI implementations. By providing robust frameworks for ETL processes, model training, inference, versioning, testing, and local development, Dagster transforms complex AI workflows into manageable software engineering tasks. The platform's approach to managing model versions, datasets, and embeddings reflects an understanding of what production AI systems need.

Dagster's ecosystem brings additional capabilities crucial for AI systems. Features like insights, catalog management, partitions, resources, and asset checks provide the infrastructure needed for reliable AI systems. The platform's emphasis on lineage and graph-based thinking helps teams focus on outputs while maintaining visibility into the entire data pipeline. Integrations with services like OpenAI, Gemini, Anthropic, Chroma, and Weaviate further streamline development. These abstractions don't just make AI development easier; they make it more reliable, maintainable, and production-ready.

Get started today with your AI applications with Dagster?.