Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

October 23, 2025

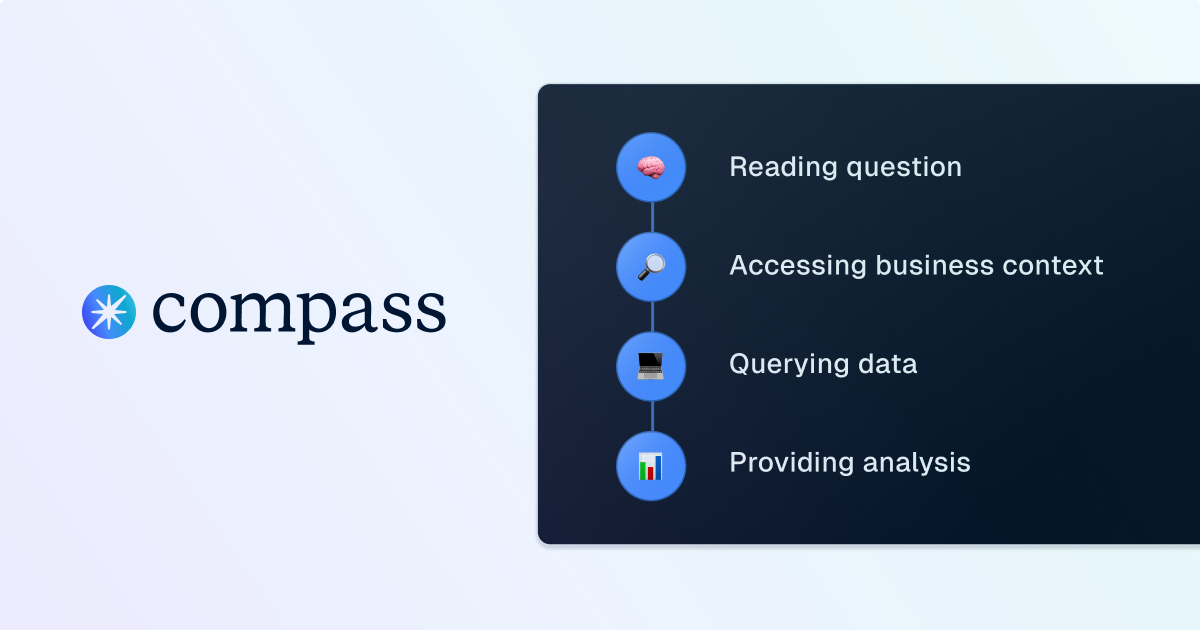

How Compass Turns Questions Into Queries

Go behind the scenes of Compass, Dagster’s analyst copilot, to see how it transforms plain-language questions into precise, optimized SQL queries. Learn how each step of the query-generation process helps analysts move faster and stay focused on insights.

Product

Dagster Newsletter

Get updates delivered to your inbox

.png)

.png)