Ahead of Launch Week, we are proud to be rolling out some exciting new capabilities.

In the run-up to our Fall Launch Week, our 1.5 release brings some major new enhancements to Dagster. So, with a nod to Whitney Houston, here is what is in store with this release, along with a recap of major enhancements in the seventeen sub-releases and 877 commits between 1.4.0 and 1.5.0.

- Embed data quality steps in your data pipeline with Dagster Asset Checks

- Expand Dagster's span-of-control with Dagster Pipes

- Monitor Cloud costs with Dagster Insights

- Get your team ramped up quickly with Dagster University

- Check out Dagster UI performance improvements

- And thanks to all the community contributors since 1.4

Looking for a more granular list of enhancements?

Check out the full Dagster Changelog.

Data Quality with Dagster Asset Checks:

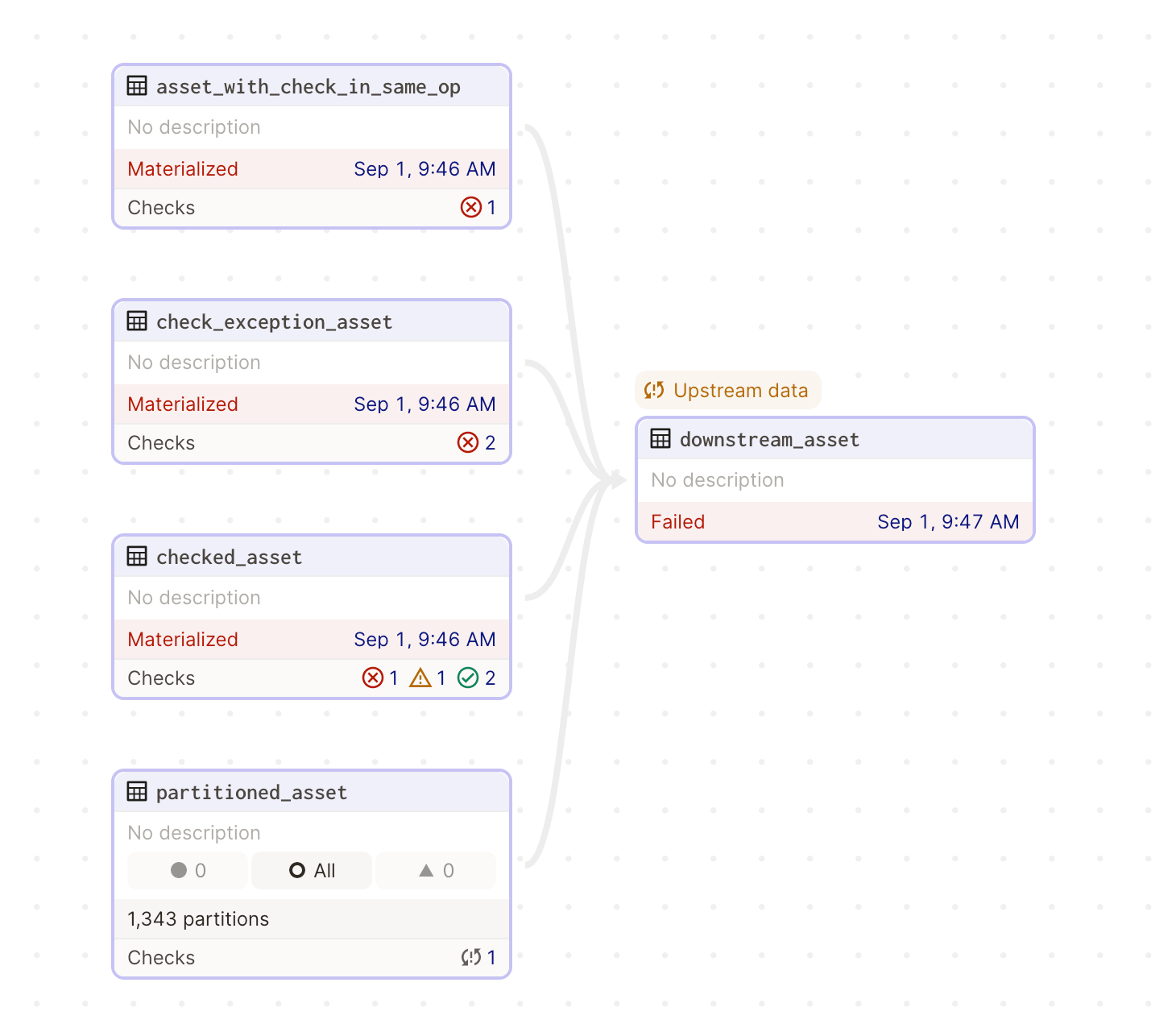

How will I know if my data meets quality standards? Well now you can - directly from the orchestration layer. With Dagster Asset Checks (introduced as experimental in Dagster 1.4.12), you no longer need to juggle different solutions to define and run data quality checks. You can now drop in a checkpoint at any stage of your data pipeline, run an asset through a check that you define, and then build orchestration logic based on the test outcome.

With Dagster’s Asset-centric framework, the results of these checks are now surfaced in the UI:

Screengrab of the Dagster UI, demonstrating an asset graph with successful, failed, and unexecuted asset checks.

For more details on Dagster’s Asset Checks, see the docs. Note that Asset Checks remain “experimental” until we have had the chance to field test this feature some more.

Sandy Ryza will be presenting on Asset Checks on day two of Launch Week (Oct 9th).

Dagster Pipes

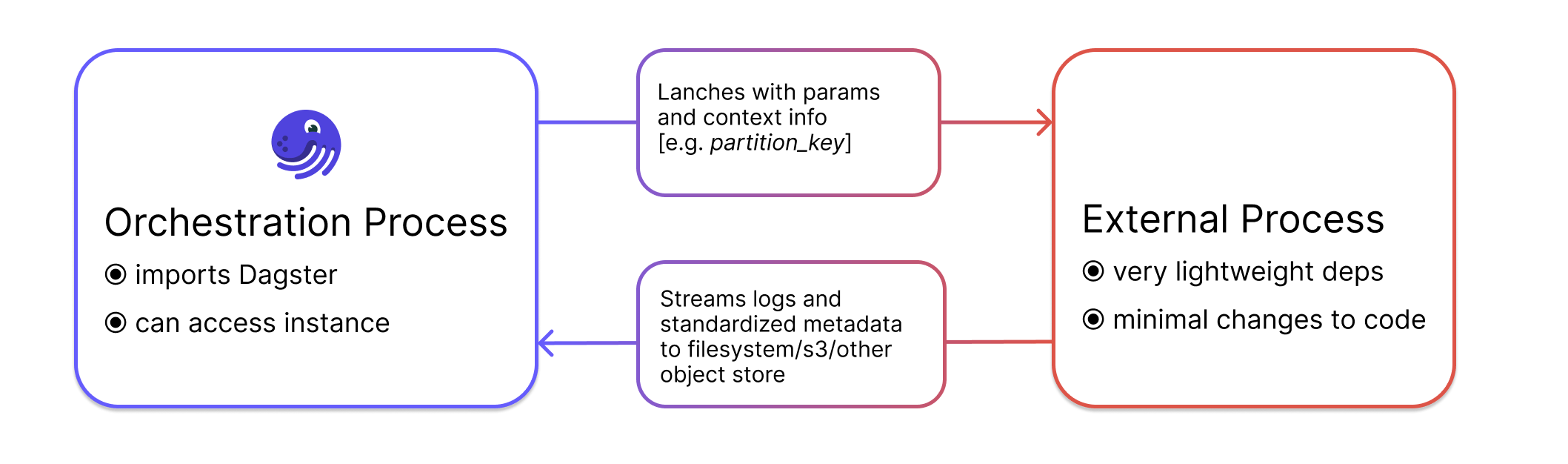

Dagster has traditionally integrated business logic and orchestration, and for simple data pipelines where data fits in memory and is directly processed within the orchestrator, this works well.

However this approach falls flat in a number of important contexts:

- When dealing with pre-existing code and python environments.

- When writing business logic in remote or hosted execution environments, such as Spark.

- When dealing with business logic written in other programming languages.

Dagster 1.5 introduces a new protocol designed to address these situations. We call it Pipes - short for "Protocol for Inter-Process Execution with Streaming logs and metadata."

With Pipes, Dagster becomes the ubiquitous, composable data control plane for all data teams in the organization.

With Dagster Pipes, you can:* Incorporate existing code into Dagster without huge refactors* Onboard stakeholder teams onto Dagster incrementally* Run code in external environments and stream log and structured metadata back to Dagster* Separate orchestration and business logic environments* Use languages other than Python with Dagster* Forget about chasing down dependency conflicts, as Dagster Pipes is dependency-free

A lot more context and details on dagster-pipes can be found in the Github discussion.

Nick Schrock will be presenting on Dagster Pipes on the final day of Launch Week (Oct 13th).

Monitor Cloud Costs with Dagster Insights (Dagster Cloud)

We have released an experimental dagster_cloud.dagster_insights module that contains utilities for capturing and submitting external metrics about data operations to Dagster Cloud via an API. Dagster Cloud Insights provides improved visibility into usage and cost metrics such as run duration and Snowflake credits in the Cloud UI.

Jarred Colli and Ben Pankow will be discussing and demoing Dagster Insights on day three of Dagster Launch Week (Oct 10th).

Dagster Docs and Dagster University

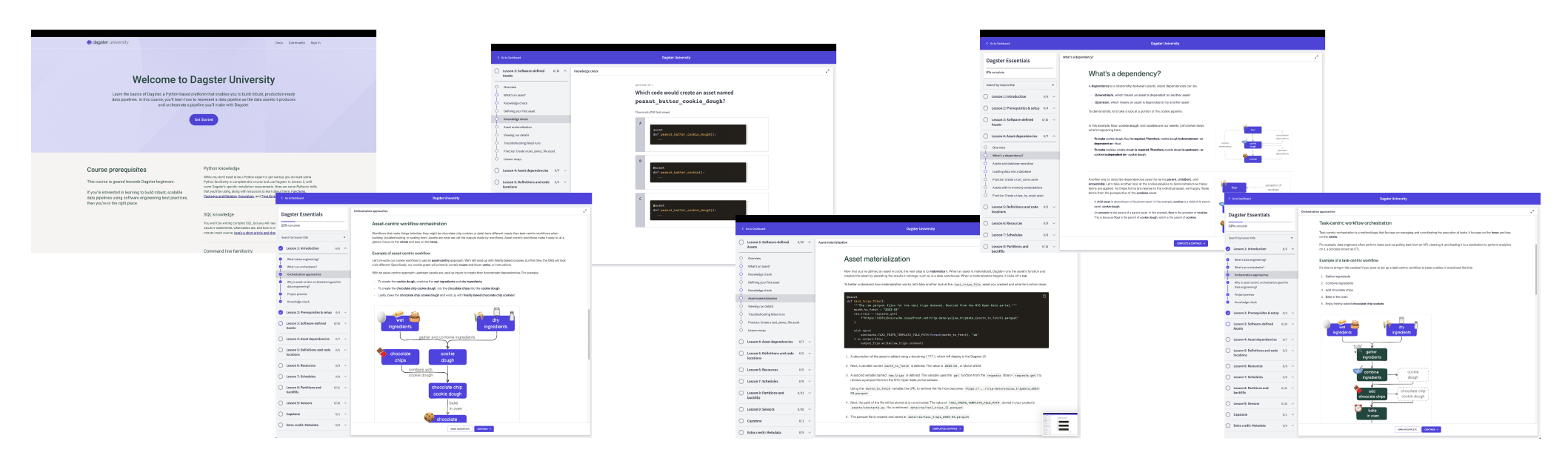

A few months ago, Dagster CEO Pete Hunt talked about the learning curve for Dagster in his Masterplan blog post, saying:

> Dagster has a reputation for being extremely powerful. However, the learning curve is still too steep.

To address this, we’ve created Dagster University.

The University’s first course, Dagster Essentials, is geared towards creating a solid foundation for Dagster beginners to build on. With detailed explanations, quizzes, and practice problems, this course will help you and your team get to your “Aha!” moment in no time.

Check it out, give us your feedback, and look out for more learning content in the future.

Erin Cochran will be unveiling Dagster University on day four of Dagster Launch Week (Oct 11th).

Dagster UI performance improvements:

Global Asset Graph performance has been dramatically improved for graphs over 50 assets - the first time you load the graph, it will be cached to disk, and subsequently, the graph should load instantly.Furthermore, we have made changes that make navigating even the largest graphs (1,000+ assets) a smooth experience.

Contributors since 1.4.0:

We would like to thank all of the community members who have contributed to Dagster since the 1.4 release, building up to this week's 1.5 launch.

Peng Wang | Janos Roden | Chris Histe | Sonny Arora, Ph.D. | Zach Paden | tnk-dev | Sergey Mezentsev | Christian Hollinger | zyd14 | Judah Rand | Markus Werner | motuzov | Casper Weiss Bang | L. D. Nicolas May | Edvard Lindelof | Tadas Malinauskas | harrylojames | Francisco García | Tambe Tabitha Achere | Sethu Sabarish | Alex Kan | Michel Rouly | Rui | Divyansh Tripathi | Abdó Roig-Maranges | Klim Lyapin | Daniel Gafni | Sirawat S.

Community Contributions Highlights

has_dynamic_partitionimplementation has been optimized. Thanks @edvardlindelof!- [dagster-airbyte] Added an optional

stream_to_asset_mapargument tobuild_airbyte_assetsto support the Airbyte prefix setting with special characters. Thanks @chollinger93! - [dagster-k8s] Moved “labels” to a lower precedence. Thanks @jrouly!

- [dagster-k8s] Improved handling of failed jobs. Thanks @Milias!

- [dagster-databricks] Fixed an issue where

DatabricksPysparkStepLauncherfails to get logs whenjob_rundoesn’t havecluster_idat root level. Thanks @PadenZach! - Docs type fix from @sethusabarish, thank you!