Ida revolutionized fresh produce ordering by building a multi-tenant Dagster architecture that processes millions of rows across hundreds of stores in minutes daily, turning chaotic retailer data into AI forecasting that prevents thousands of tons of food waste.

KEY RESULTS

- Processes millions of rows across hundreds of stores in under a few minutes daily

- 30-minute feature deployments from idea to production

- Zero downtime tolerance - stores receive predictions before opening every morning

- Preventing thousands of tons of food waste daily and optimizing customer operations for a 10x ROI

The Challenge

Ida is a proposal and order management software dedicated to fresh produce. Today, more than 150 million tons of fresh foods—fruits and vegetables, meat, fish, and poultry—are discarded every year. This startup is on a mission to eradicate food waste: Ida aims to revolutionize fresh produce supply chains by providing AI-powered demand forecasting and order management for perishable goods that expire in days.

.png)

.jpg)

It’s a huge challenge because fresh produce ordering is still highly analogue: A manager walks through manually counting apples and scribbling order quantities on a clipboard. This scene plays out across thousands of Ida customer stores daily, generating data that's fragmented, inconsistent, and changes without warning:

The Data Chaos Reality

"These aren't edge cases – they're Tuesday," explains Louis Bertolotti, Ida's founding engineer. The company faces constant data chaos:

- Carrefour's data looks nothing like Système U's data - different column names, business logic, and edge cases

- API formats change overnight without notification, breaking existing integrations

- Someone deciding that "KG" now means something different than it did yesterday

- Accidentally receiving last year's sales file

- A new product category appearing with completely different units of measurement

- Data quality varies dramatically - from sophisticated APIs to manual CSV uploads

Previous Architecture Pain Points

Ida's platform team initially built a serverless, event-driven pipeline based on GCP and cloud run. "It was really cost-efficient for us, but very slow for the data pipeline itself," said Mathieu Grosso, an AI engineer who is also Ida's co-founder and CTO. "Every time we had a new client, we had to do another Lambda. We had to do it over and over again; it was really painful."

The team needed an architecture that could handle:

- Multi-tenant data with different formats from each retailer

- Extremely fast iteration (clients changing API formats overnight)

- Tight 6 AM deployment deadlines when retailers called with urgent issues

- The need to "fix and deploy before 8 AM" when retailers have broken ordering processes

The Solution: Multi-Tenant Magic

The Ida team rebuilt what they describe as "something that probably shouldn't work, according to conventional wisdom, but handles millions of rows across hundreds of stores in under a few minutes every morning." Their unconventional decisions are driven by the unique challenges of ingesting chaotic data from an analog market:

- Multiple dbt projects in a single Dagster instance (multi-tenant by design)

- Running dbt in production (instead of just for analytics)

- ELT data transformation of "chaotic data" from many sources using BigQuery

- Branch deployments instead of a dedicated staging environment for ELT

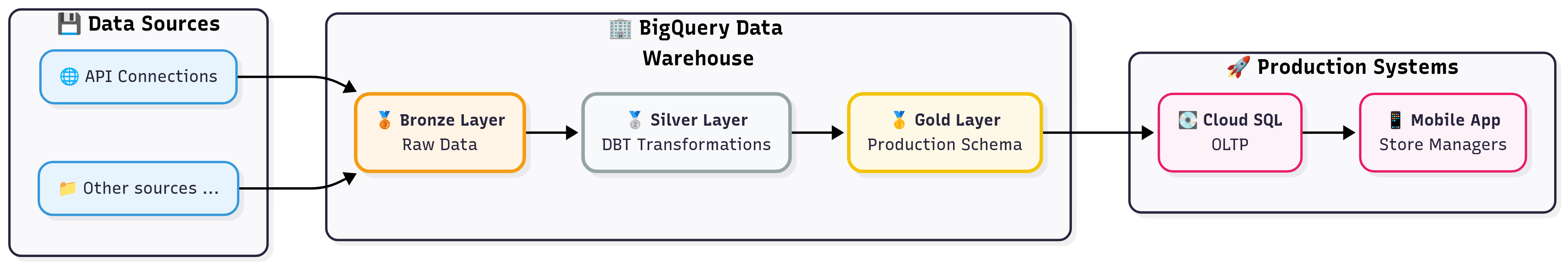

The Architecture: Medallion with Asset-Aware Orchestration

Their data flow follows a medallion architecture (Bronze → Silver → Gold), orchestrated through Dagster's asset-aware model:

🥉 Bronze Layer: Embrace the Chaos Data comes in through many different channels. Everything lands raw and unprocessed in BigQuery tables. No ETL magic—just ELT reality with BigQuery as their central data warehouse.

🥈 Silver Layer: DBT as Production Middleware This is where the magic happens. They use DBT not as an analytics tool, but as production middleware—what their team calls "modeling clay for data"—all running directly on BigQuery's compute engine.

- Raw → Staging (STG): Clean, standardize, and deduplicate. Column names get normalized, data types get fixed, obvious errors get flagged.

- Staging → Dimensions & Facts: Transform into their "Ida format":

- DIM tables: Product catalogs, stores, suppliers (descriptive, time-independent)

- FACT tables: Sales, orders, stock movements (events and measurements)

🥇 Gold Layer: Production-Ready Data Clean, tested data flows into Cloud SQL (OLTP database) and powers the mobile app that produce managers use every morning.

Multi-Tenant Project Structure

harvest_flow/

├── dbt/

│ ├── cool-retailer/

│ │ ├── models/staging/stg_sales.sql

│ │ ├── models/transform/fact_sales.sql

│ │ └── schema.yml

│ └── fancy-retailer/

│ └── ...

└── dagster_etl/

├── cool-retailer/assets/load_gold.py

└── fancy-retailer/assets/...

Dagster automatically converts:

dbt/cool-retailer/models/staging/stg_sales.sql → Dagster asset cool-retailer/dbt/stg_sales

dbt/cool-retailer/models/transform/fact_sales.sql → Dagster asset cool-retailer/dbt/fact_sales

Automatic Dependency Resolution

# dbt/cool-retailer/models/transform/fact_sales.sql

SELECT ... FROM {{ ref('stg_sales') }} -- This ref() creates the dependency!

# In cool-retailer/assets/load_gold.py

@asset(deps=[AssetKey(["cool-retailer", "dbt", "fact_sales"])])

def load_sales_cool_retailer():

# This Python asset depends on the DBT model above!

Complete Execution Flow

When Cool-Retailer sends new sales data, here's what Dagster automatically executes:

- Raw data ingestion

- cool-retailer/dbt/stg_sales (cleans the raw data)

- cool-retailer/dbt/fact_sales (depends on stg_sales)

- load_sales_cool_retailer (Python asset depending on DBT model)

Why this order? Dagster reads the {{ ref('stg_sales') }} in the DBT files and automatically builds the dependency graph. No manual scheduling—pure dependency-driven execution.

The Results

Performance Magic: BigQuery + DBT + Dagster

The challenge: Cool-Retailer's daily processing requires handling hundreds of stores, millions of sales rows, and complex aggregations.

The solution: BigQuery's columnar storage + partitioning + DBT's incremental models = linear scalability. Full DBT runs complete in under a few minutes for hundreds of stores processing millions of sales rows daily.

Real-World War Stories

The 30-Minute Feature Last month, CoolRetailer told them about a new product flag indicating seasonal inventory rotation. In a traditional setup, this would be a multi-day engineering effort.

With their DBT setup? "I added a few lines to the dedicated dbt model, and let Dagster handle the execution of all the impacted assets. Thirty minutes later, our models were categorizing seasonal products across hundreds of locations. Two days later, they told us the logic missed winter items. I updated it in 5 minutes."

This is the "modeling clay" philosophy in action—DBT lets them reshape data transformations as quickly as they can think of them.

Safety Net: Production DBT Tests Against Data Chaos

To handle the unexpected, DBT tests are their lifeline. With their ELT approach, they catch anomalies through comprehensive DBT testing that runs every single morning before any predictions go live:

From simple uniqueness tests:

# dbt/cool-retailer/models/schema.yml

- name: fact_sales

columns:

- name: id

tests:

- unique

- not_null

# dbt/cool-retailer/tests/dbt_test.sql

SELECT

...

CASE

WHEN condition_A

THEN 'Error message A'

WHEN condition_B

THEN 'Error message B'

END AS issue

FROM {{ ref('stg_sales') }}Every morning, before any predictions run, Dagster executes hundreds of these tests across all retailer projects. If cool-retailer/dbt/stg_sales fails a uniqueness test, the entire downstream pipeline stops. No ML models get bad data, no store managers get broken recommendations.

Multi-Tenant Magic Implementation

The Dagster implementation that the team is particularly proud of, Grosso said, is their multi-tenant dbt setup where "every dbt model automatically becomes a Dagster asset with full lineage tracking" and the automatic dependency resolution from dbt ref() functions. They call it their "multi-tenant magic" – separate dbt projects for each food supplier, all orchestrated in a single Dagster instance.

This approach handles the reality that different retailers have completely different data formats while ensuring identical outputs for ML models and production applications. "Now we can model the data to always have the same internal schema with a medallion architecture. So we end up with clean tables, which are all really clean and tidy, and it was really thanks to the Dagster integration."

Different inputs, identical outputs. That's the multi-tenant magic.

Branch Deployment Success

“The really interesting part for us about Dagster’s branch deployment is the fact that we can connect it to our agent. Because we're on GCP, we have a Kubernetes agent in place to do all of the processing, which is vastly easier for us.“

Looking ahead

Ida's success with Dagster has positioned them for aggressive expansion across European fresh produce markets. The team is currently adapting their architecture for a major new retailer that will send sales data in real time — transforming their batch-oriented approach into rapid micro-batches while maintaining Dagster's asset-aware dependency model.

"We're implementing comprehensive Dagster asset checks beyond our dbt tests," explained Bertolotti. "When a micro-batch receives anomalous data, Dagster can automatically pause downstream processing and alert our team."

The company is also building metadata-driven anomaly detection using Dagster's rich metadata system to track data volumes, processing times, and business metrics. This system automatically flags when patterns break in the now-digitized world of fresh produce data.

They're redesigning their asset interface around Dagster's partitioning system for store-level partitions for parallel processing across hundreds of locations.

Key takeaways

- Zero downtime tolerance achieved: Store managers now receive ML-powered demand predictions every morning instead of relying on manual clipboard counting, enabling data-driven ordering decisions.

- Comprehensive data anomaly testing: Use Pandera and table integrations in Dagster to ensure that every received dataset is okay, then use dbt tests as asset checks to catch data anomalies before predictions go live.

- Immediate failure tracing: Asset lineage shows exactly which assets failed and which downstream systems were affected. Rollback is quick because Dagster tracks asset versions.

- Linear Scalability: The combination of BigQuery's columnar storage, dbt's incremental models, and Dagster's orchestration allows full DBT to complete in minutes for hundreds of stores processing millions of sales rows daily.

- Development velocity: DBT becomes "modeling clay for data" - rapid reshaping of transformations allows 30-minute feature deployments from idea to production

- Saved from the garbage: Ida helps prevent thousands of tons of food from being wasted every day with AI demand predictions for fresh products that expire quickly