How the next step in the evolution of the Data Engineering role requires a platform approach.

Pedram Navid is the Head of Data Engineering and Developer Relations at Dagster Labs. The following article was first published on Pedram's Data Based blog.

Of Data Scientists and Data Engineers…

In the late 2010s, when I was first advancing my career, the rise of the Data Scientist was everywhere. It was once the sexiest job of the 21st century, but like all inflationary things, the bubble popped, and soon it was relegated from A Status Job to Yet Another Crummy Job (YACJ).

Soon, companies realized that a team of 20 Data Scientists couldn’t be effective without access to good data, and the role of the Data Engineer was brought to the forefront. Data Engineers would be responsible for the ingestion and transformation of data and the platform that enables Data Scientists, while the Data Scientists would become consumers of that data.

While on paper, this seemed like a great division of labor, engineers famously do not want to write ETL pipelines. So far back as 2016, Jeff Magnusson at Stitch Fix suggested that engineers build platforms, services, and frameworks and not ETL pipelines.

This largely did not happen.

A New Generation of Tools

Back in 2016, Hadoop clusters were still status quo. Spark and the JVM were the best we had. Scala was cool. What soon changed wasn’t that Data Scientists and Data Engineers ended up listening to Jeff, but a new breed of software was born.

Cloud Data Warehouses were just becoming a natural replacement for the existing data systems. Instead of requiring a team of dedicated Infrastructure Engineers to scale your data requirements, you just needed a dedicated credit card.

Instead of assigning Data Scientists the task of writing ETL pipelines, we gave that task to Fivetran, Stitch, and other SaaS providers. The birth of the Modern Data Stack was just around the corner with Snowflake’s IPO in 2020.

Meanwhile, Data Scientists sat unhappy that they were using their PhDs to create dashboards. Consultants were picking up the slack until a little company called Fishtown Analytics open-sourced a tool they were using for transforming data in the warehouse. dbt was born and exploded in popularity, giving rise to the Analytics Engineer role. This role supplanted the Data Scientist, and soon the Data Scientists were freed of the chains of answering Yet Another Stakeholder Question (YASQ) and were able to move on to more important work, like creating flashcards, founding startups, and getting into fights on Twitter.

Data Engineering: It ain't much, but it's honest work

Data Engineers, however, were stuck writing ETL pipelines. Sure, you could pay Fivetran to sync your Salesforce data, and maybe Stripe had a native Snowflake connector, but there was no escaping the long tail of data needs. Cost constraints meant that more and more companies were looking to bring some of the offloaded work back in-house. It was harder and harder to justify spending your pennies on every row that changed in a database.

As the dust settled, and interest rates rose, and VCs got bored of data and moved on to AI, we finally moved toward some sense of normalcy in data. Instead of hot takes, the data people continued to do the work it took to help make a business operate. We came to terms with the fact that Data Work is often just Blue Collar Work.

Instead of hiring 20 data scientists and asking them to ‘find insights’, we had smaller more focused teams that worked against delivering actual value to different lines of business. From building data models that made it easier to self-serve using modern BI tools, to creating recommendation models or predicting churn, the bread-and-butter stuff continued.

As teams matured and the frenzy of SaaS died down, we’ve started to return to the dilemma posed by Magnusson back in 2016: What should Data Engineers be working on?

The Second Coming of the Data Platform Engineer

I believe we’ve passed the trough of disillusionment and are entering the plateau of productivity. We’ve made a lot of progress in the last ten to fifteen years in data. The tooling is better than it has ever been, and it’s possible to do so much more with much less. DuckDB on a laptop is replacing MS Access on a corporate desktop. This is a good thing.

With that rise of productivity among data professionals of all kinds, from ML Engineers to Analytics Engineers, to Data Scientists and beyond, pressure is starting to build on Data Engineers.

There are two ways to react to that pressure. The easiest is to hire more Data Engineers to support your business, but we are fortunate that we live in a (relatively) high-interest-rate era.

High interest rates cure all ailments.

From Data Engineer to Data Platform Engineer

Instead, Data Engineers are coming back to the original sin of Data Engineering, building bespoke custom pipelines for your downstream consumers, and they’re solving it the same way we were trying to solve it 10 years ago: building platforms, frameworks, and services.

Part of the problem, I think, is the title Data Engineer simply beckons you to build pipelines. The next evolution of the role is more akin to a Data Platform Engineer.

This is someone who is tasked not with building ETL pipelines, but with making it possible for their various consumers to build any pipeline they need without having to resort to a complex higher language.

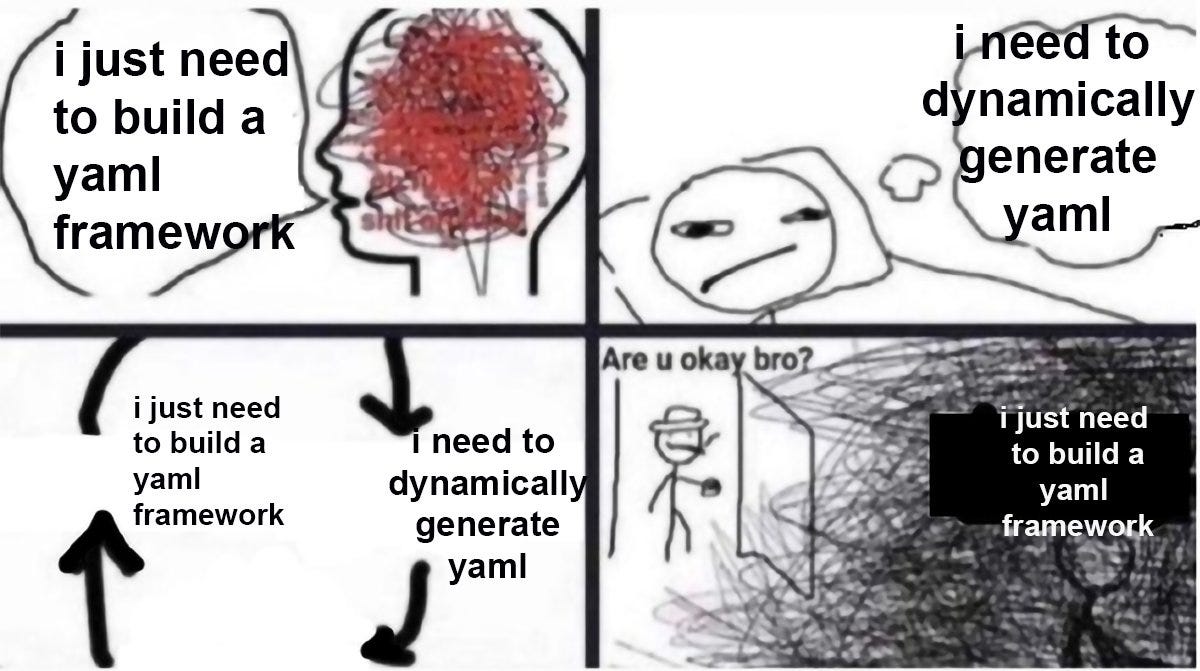

How to do that well is still not a solved problem: whether it’s custom bespoke yaml-to-pipeline factories, or something more purpose-built remains to be seen. But what I am seeing is more and more companies starting to move toward a framework approach to data platforms. It’s the only way to scale the demands of a data platform without scaling up the number of Data Engineers supporting your analysts.

What I like the most about this is that it finally gives Data Engineers something to look forward to. Career progression for Data Engineers often felt like it was simply bigger data and more complex pipelines, but most Data Engineers I know prefer software engineering to data analysis, and pipeline building is by its very nature closer to data analysis than building software.

While building pipelines will never go away, being able to see some light at the end of the tunnel is sometimes all we need.

What is a Data Platform Engineer?

Data Platform Engineering takes data engineering one step further. Instead of engineering the pipelines themselves, a data platform engineer builds a platform that enables users to wind up their own pipelines on demand. Rather than building out an opaque backend, data platform engineering provides transparency, so the entire org can be engaged with data pipelines.

How to do that well is still not a solved problem: whether it’s custom bespoke yaml-to-pipeline factories, or something more purpose-built remains to be seen. But what I am seeing is more and more companies starting to move toward a framework approach to data platforms. It’s the only way to scale the demands of a data platform without scaling up the number of Data Engineers supporting your analysts.

What I like the most about this is that it finally gives Data Engineers something to look forward to. Career progression for Data Engineers often felt like it was simply bigger data and more complex pipelines, but most Data Engineers I know prefer software engineering to data analysis, and pipeline building is by its very nature closer to data analysis than building software.

While building pipelines will never go away, being able to see some light at the end of the tunnel is sometimes all we need.

FAQs about data platform engineering

How does a Data Platform Engineer differ from a traditional Data Engineer?

A traditional data engineer primarily focuses on building and maintaining individual ETL pipelines to move and transform data for specific analytics. In contrast, a data platform engineer shifts to building the underlying platforms, frameworks, and services that enable other data professionals to create their own pipelines and access data in a self-service manner. The role of Data Platform Engineer emphasizes architectural design, scalability, and providing reusable tools.

Why is the Data Platform Engineer role gaining prominence now?

The increasing volume and complexity of data is setting up major scaling challenges for companies. They need to expand data operations efficiently without a linear increase in data engineering headcount. By hiring a Data Platform Engineer, companies can move beyond bespoke pipeline development.

Data Platform Engineers foster productivity among data scientists and analysts by providing them with robust, self-service data infrastructure. This strategic shift enables faster insights and more effective data utilization across the enterprise.

What are the primary responsibilities of a Data Platform Engineer?

Data Platform Engineers are responsible for designing, building, and maintaining the core infrastructure that supports all data-intensive operations within an organization. This includes selecting appropriate technologies, establishing data governance, implementing security measures, and optimizing data storage and retrieval systems. Their main goal is to create a reliable and scalable data ecosystem that empowers various data consumers.

How do Data Platform Engineers support self-service data initiatives?

Data Platform Engineers support self-service by developing internal developer platforms, reusable APIs, and standardized tools that abstract away complexity. They create an environment where data professionals can independently ingest, transform, and analyze data without constant reliance on a centralized engineering team. This approach reduces bottlenecks, accelerates development cycles, and fosters greater autonomy for data consumers.