Learn the fundamentals of a healthy data engineering lifecycle to optimize pipeline and asset production.

The way you work matters.

If your job title is data engineer, machine learning engineer, analytics engineer, data scientist, or data platform engineer, there’s a good chance that a hefty part of it is building production data assets. A production data asset is a table, file, folder, ML model, or any other persistent object that captures some understanding of the world and that other people or software depend on.

The way you go about building these data assets – the practices, processes, and tools that you use – have a huge impact on:

- Your Development Velocity: How quickly you can ship and evolve them.

- Their Reliability: How much you can trust your data assets to be available when needed and to contain quality and timely data.

So how should you work? This post aims to lay out the fundamentals of a healthy Data Engineering Lifecycle, the process that the best data teams use to author, evolve, and maintain data pipelines and the data assets that those pipelines produce. Teams that adopt more of this process reap the rewards of faster development velocity and more reliable data assets. Teams that adopt less get mired in slow progress and a whack-a-mole of broken data pipelines.

Principles Backing the Data Engineering Lifecycle

The practices of the Data Engineering Lifecycle rest upon a few principles.

Data Comes From Code

Data describes facts about the real world, but every byte in every data file and record in every table was written there by software. Because of this, effective data management rests on tracking what code is responsible for generating your data and managing that code using software engineering practices. These practices don’t need to be too fancy, but a few basics:

- The code that produces data assets is version-controlled, using software like git.

- Production data is built using the code that’s on the main branch of the version control system.

- When looking at code, you have a way – e.g. with documentation, software, or naming convention – of finding out what data assets it’s meant to produce.

- For any production data asset, you have a way of finding out what code produced it and is responsible for keeping it up-to-date.

Vet Changes Before Deploying Them

A huge portion of the failures, outages, and data quality issues in data pipelines occur immediately after a change is deployed – e.g. a change to a SQL query includes a typo or makes invalid assumptions about the data it depends on.

To prevent these kinds of issues from affecting the people and software that depend on your data assets, you need to be able to catch them before they make it to production. Catching issues usually requires a mix of manual and automatic verification. Manual verification means running your pipeline and inspecting the data it produces to make sure it looks like you expect. Automatic verification means writing unit tests and data quality tests, and having a system automatically execute them before deploying a change.

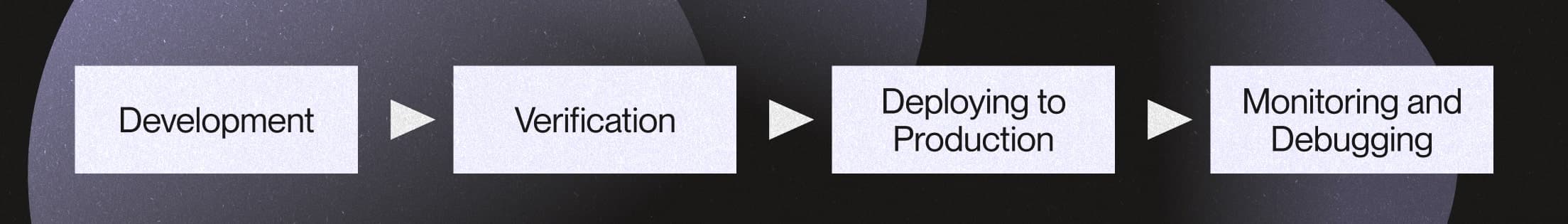

The phases of the Data Engineering Lifecycle

The lifecycle covers the phases of a single “act” of data engineering, adding to or changing your data pipelines. For example:

- Adding a new table

- Adding a feature and retraining an ML model

- Fixing a bug in how a table is derived

Data practitioners move through this lifecycle many times, sometimes multiple times per day, as they build and evolve their data pipelines.

Development

Data engineering starts with development: you write code that improves or expands your data pipeline.

During this phase, you edit code, execute it, observe its impact on the data assets that it produces, and repeat this process until it produces what you expect it to.

Nothing that happens during this phase should impact production data.

During this phase, you also lay the grounds for automated testing: you write unit tests to encode your expectations about how your code functions, and you write data quality tests to encode your expectations about what the data produced looks like.

From a tooling perspective, setting yourself up for success during this phase requires focusing on iteration speed. The faster you can move through the edit-execute-observe loop, the faster you can move on to the next phase.

Verification

The goal of this phase is to catch problems – like errors or data quality issues – before they make it to production and impact stakeholders.

Testing is also useful during the development phase, but, during this phase, you take a wider, more comprehensive approach to testing the implications of your change. For example:

- Running it on full production data instead of sample data

- Refreshing all downstream data assets to see how your change affects them

- Running a full suite of unit tests and data quality tests

The more realistic your test environment is, the more likely you are to catch problems before they make it to production. But it’s critical that the test environment is different from production, or your stakeholders become the guinea pigs for your changes.

As discussed in the principles section above, catching issues usually requires a mix of manual and automatic verification:

- Automated unit tests verify that data transformation code behaves as expected on small, controlled inputs.

- Automated data quality tests verify that data transformation code produces results that are expected, when provided full, uncontrolled inputs.

- Manual testing is the most flexible and lets you explore of the outputs of your revised data pipeline in an unstructured way.

Last, but not least, another way to catch problems before they make it into production is by having others review your changes. This isn’t always possible, e.g. if you’re a solo practitioner, but it can have a huge impact.

From a tooling perspective, setting yourself up for success during this phase requires a couple things:

- A way to isolate your test environment from your production environment. Ideally, during this phase, the data pipeline runs on production inputs, but write outputs to a sandbox where they won’t impact production.

- Continuous integration (CI) infrastructure that automatically runs tests against your modified data pipeline.

Deploying to Production

In this phase, you get your new code running in production and get your data assets in production to reflect that code. When you’re done, decision-makers and applications can start depending on the improved data assets.

Getting code running in production usually involves at least these steps:

- Merging your changes into the main branch

- Bundling up the code that’s in the main branch into an artifact, such as a Docker container

- Copying that artifact to a location where it can be accessed by the software you use to run your production data pipelines

- Telling the software you use to run your production data pipelines about the new artifact

In addition, you often need to take steps to get your data in production to reflect your changes:

- If you’re working with data that’s normally updated incrementally, you might need to run a backfill to update historical data.

- You might need to issue DDL statements to your database to add, remove, or rename columns in your tables.

From a tooling perspective, setting yourself up for success during this phase is all about automating deployment and data reconciliation:

- Automating deployment - Ideally, after merging a change to your version control system’s main branch, your continuous deployment (CD) system can do the rest of the “Getting code running in production” steps automatically.

- Data reconciliation - Tooling can help you understand what historical partitions of your data need to be backfilled after merging a change, as well as what tables have columns that are out of sync with the columns expected by the latest code.

Monitoring and Debugging

In the final phase of the lifecycle, your pipeline is running in production, but that doesn’t mean everything is hunky dory:

- Your testing environment might not accurately represent your production environment, so you might run into errors that you didn’t catch during testing.

- The source data that feeds your pipeline can change.

- External services that your pipeline depends on can become unavailable.

- Changes that other people make to upstream parts of the pipeline can cause the parts of the pipeline that you maintain to fail.

The consequences for stakeholders: unavailable data, out-of-date data, inaccurate data, or data that just doesn’t match their expectations.

During this phase, you need to discover the existence of issues and determine their root causes. Ideally, you’re able to discover them before your stakeholders encounter them. Data quality checks are a very powerful tool here. The same data quality checks that helped catch problems during the verification phase are also very useful on production data.

After you’ve discovered issues, you typically address them by fixing upstream data source, re-executing pipelines, or fixing code, which takes you back to the first phase of the lifecycle.

From a tooling perspective, setting yourself up for success during this phase is all about observability. You want your tools to automatically track what’s happening and centralize information so that you can use it to discover problems and delve into the causes of those problems. And you want alerts that separate the signal from the noise, so that you don’t get desensitized with false alarms.

That’s It

Just four measly stages. An unhealthy data engineering lifecycle erodes trust in the data and the people responsible for maintaining it. But a healthy data engineering lifecycle allows shipping and improving data pipelines quickly and often, without impacting the quality or timeliness of production data.

If you’re not doing everything laid out in this post, you’re in a category with almost everyone else. Start small! Add an automated step to your deployment process. Write a data quality test for a particularly troublesome table. Find a way to run a part of your pipeline on your laptop. You don’t need to adopt the lifecycle all at once. Incremental improvements can make a big difference, on their own, as well as compounded together in the long run.