What is a Primary Key in Data Engineering?

A Primary Key is a unique identifier for a record in a database table. It is a specific field (or a combination of fields) that ensures each record is distinct, preventing duplicate entries. The Primary Key is crucial for maintaining data integrity, enabling efficient data retrieval, and establishing relationships between tables in relational databases.

In this Glossary entry:

- What is a Primary Key in Data Engineering?

- SQL Example: Using a Primary Key to Define a Table:

- Key Functions and Benefits of Using Primary Keys

- Best Practices in Working with Primary Keys

- How Dagster helps manage primary keys

- Dagster Code Example

SQL Example: Using a Primary Key to Define a Table:

CREATE TABLE Users (

UserID INT PRIMARY KEY NOT NULL, -- Primary Key with NOT NULL constraint

Username VARCHAR(50) NOT NULL UNIQUE,

Email VARCHAR(100) NOT NULL UNIQUE,

CreatedAt TIMESTAMP DEFAULT CURRENT_TIMESTAMP

); This Users table would look like this:

UserIDUsernameEmailCreatedAt1dagsterdagster@glossary.com2024-08-19 10:15:002airbyteairbyte@glossary.com2024-08-19 10:30:003cubecube@glossary.com2024-08-19 11:00:004GXgx@glossary.com2024-08-19 11:45:00

As the Primary Key, the column UserID: contains unique identifiers for each user (1, 2, 3, 4).The UserID column serves as the primary key, ensuring that each row in the table is uniquely identifiable.

Key Functions and Benefits of Using Primary Keys:

Primary Keys are required to deliver several critical features of modern databases:

Uniqueness and Data Integrity: The primary function of a Primary Key is to enforce the uniqueness of records in a table, preventing duplicate records and, in turn, data inconsistencies, errors in data processing, and challenges in data management.

Efficient Data Retrieval: Primary Keys significantly enhance the efficiency of data retrieval operations in large datasets. Database systems use Primary Keys to quickly locate and retrieve data without scanning the entire table.

Relationships Between Tables: In relational databases, tables are linked through relationships, such as one-to-one, one-to-many, or many-to-many relationships. The Primary Key in one table can be referenced as a Foreign Key in another table, establishing a link between the two tables. This relationship allows for complex queries that can combine data from multiple tables, enabling a more comprehensive analysis and reporting.

Referential Integrity: By using Primary Keys and Foreign Keys, databases enforce referential integrity, ensuring that relationships between tables are consistent. For example, a Foreign Key in one table must match a Primary Key in another table, preventing orphaned records or broken links between related data. This integrity is crucial for maintaining accurate and reliable data.

Indexing and Performance Optimization: Most database management systems automatically create an index on the Primary Key column(s). Indexing improves the speed of query operations, especially for search, sort, and join operations that involve the Primary Key. As a result, databases can handle large volumes of data more effectively, providing faster response times and better performance.

Consistency in Data Models: In the broader context of data engineering, the use of Primary Keys contributes to the consistency and standardization of data models. By defining clear, unique identifiers for each table, data engineers can create more structured and predictable data architectures, facilitating data integration, migration, and scalability across systems.

Best Practices in Working with Primary Keys:

When selecting a Primary Key, it is important to choose a column (or combination of columns) that will always have a unique value for each record. Common choices include unique IDs, serial numbers, or other natural identifiers like email addresses. In cases where a natural unique identifier does not exist, a surrogate key, often an auto-incrementing number, is used.

In summary, Primary Keys are essential for maintaining the structure, integrity, and efficiency of relational databases in data engineering. They ensure that each record is unique, enable quick and reliable data retrieval, and support the complex relationships and operations that are central to data-driven applications.

Databases without Primary Keys

So, is it conceivable to have a database that does not use Primary Keys? Surprisingly, the answer is yes.

It is worth noting that modern DBMSs like MySQL, PostgreSQL, SQL Server, Oracle, etc. do allow you to create tables without primary keys, but it’s generally not advised for the many reasons mentioned above.

NoSQL databases like MongoDB do not strictly require a primary key, although they automatically create a unique identifier for each document (_id field in MongoDB).In Key-Value Stores (e.g., Redis), the key itself acts as a unique identifier for the value, somewhat analogous to a primary key, but the concept differs from traditional relational databases.Column Stores (e.g., Cassandra) allow for more flexible schema designs, and while you can define a partition key, it doesn’t function the same way as a traditional primary key.

As for flat-file Databases (opening the age-old debate of "Is Excel a database?"), we should note that CSV Files, Excel Sheets, etc. don’t inherently have primary keys. However, users often designate a column manually to act as a unique identifier.

How Dagster Helps Manage Primary Keys

Dagster provides a robust framework for data orchestration. Database interactions in a data pipeline benefit from proper management of primary keys. Here's how Dagster helps in this context:

Data Quality and Validation: Dagster allows data engineers to implement data quality checks within their pipelines. This includes validating primary keys to ensure they are unique and not null, which is crucial for maintaining data integrity.

Asset-Oriented Approach: Dagster's asset-based model allows data engineers to define and manage data assets explicitly. When dealing with primary keys, this means you can create assets that represent tables or datasets with primary keys, ensuring that the relationships and constraints are well-defined and maintained.

Traceability and Lineage: Dagster provides features for tracking the lineage of data assets. This helps data engineers trace how primary keys are used and transformed across different stages of the data pipeline. Understanding the lineage of primary keys can be critical for debugging and ensuring data consistency.

Version Control: By storing SQL queries and transformations as code, Dagster enables version control for database operations. This includes operations involving primary keys, allowing data engineers to track changes and revert to previous versions if necessary.

Integration with Databases: Dagster integrates seamlessly with various databases and data warehouses. Data engineers can use Dagster to orchestrate tasks that involve creating, updating, or validating primary keys in these databases.

Scheduling and Automation: Dagster's scheduling capabilities allow data engineers to automate tasks that involve primary keys. For example, you can schedule regular checks to ensure primary keys are unique and consistent across your datasets.

Error Handling and Retries: Dagster's execution engine handles errors and retries, which is useful when dealing with primary keys. If a task fails due to a primary key constraint violation, Dagster can retry the task or alert the data engineer to address the issue.

By leveraging these features, data engineers can ensure that Primary Keys are correctly managed and maintained throughout their data pipelines, leading to more reliable and consistent data operations.

Dagster Code Example:

Let's build a simple step-by-step workflow to illustrate all this:

from dagster import asset, IOManager, io_manager, OutputContext, InputContext, Definitions

import sqlite3

class MyDatabaseIOManager(IOManager):

def __init__(self, db_path):

self.db_path = db_path

def _connect(self):

return sqlite3.connect(self.db_path)

def handle_output(self, context: OutputContext, obj):

conn = self._connect()

cursor = conn.cursor()

# Assuming obj is a list of dictionaries representing rows

table_name = context.asset_key.path[-1]

columns = obj[0].keys()

placeholders = ', '.join('?' * len(columns))

column_names = ', '.join(columns)

# Create table if it doesn't exist

cursor.execute(f"""

CREATE TABLE IF NOT EXISTS {table_name} (

id INTEGER PRIMARY KEY,

{', '.join([f'{col} TEXT' for col in columns if col != 'id'])}

)

""")

# Insert or update rows

for row in obj:

values = tuple(row[col] for col in columns)

cursor.execute(f"""

INSERT INTO {table_name} ({column_names})

VALUES ({placeholders})

ON CONFLICT(id) DO UPDATE SET

{', '.join([f'{col}=excluded.{col}' for col in columns if col != 'id'])}

""", values)

conn.commit()

conn.close()

def load_input(self, context: InputContext):

conn = self._connect()

cursor = conn.cursor()

table_name = context.asset_key.path[-1]

cursor.execute(f"SELECT * FROM {table_name}")

rows = cursor.fetchall()

conn.close()

return rows

@io_manager

def my_database_io_manager():

return MyDatabaseIOManager(db_path='my_database.db')

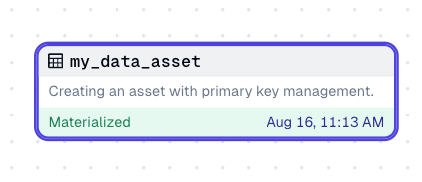

@asset(io_manager_key="my_database_io_manager", description="Creating an asset with primary key management.")

def my_data_asset():

# Example data with primary key 'id'

data = [

{'id': 1, 'name': 'Alice', 'email': 'alice@example.com'},

{'id': 2, 'name': 'Bob', 'email': 'bob@example.com'},

{'id': 3, 'name': 'Charlie', 'email': 'charlie@example.com'}

]

return data

defs = Definitions(

assets=[my_data_asset],

resources={

"my_database_io_manager": my_database_io_manager

}

)This Dagster code provides a basic illustration of how to manage Primary Keys and use IO Managers to handle data storage and retrieval within a data pipeline.

Here is what the code does, in a nutshell:

- Asset Execution:

- When

my_data_assetis executed, it generates the example data. - The data is passed to the

handle_outputmethod ofMyDatabaseIOManager. - Data Storage:

- The

handle_outputmethod connects to the SQLite database. - It creates the table if it doesn't exist, with

idas the primary key. - It inserts or updates rows in the table based on the

idcolumn. - Data Retrieval:

- When data needs to be read from the database, the

load_inputmethod is called. - It connects to the SQLite database, retrieves all rows from the table, and returns them.

Let's break down the key components:

Dagster Components and Their Roles

- Custom IO Manager (

MyDatabaseIOManager): - This class is responsible for managing the connection to a database or external resource (in this case SQLite) and handling the input and output operations for assets.

- Initialization: The

__init__method initializes the IO Manager with the path to the SQLite database. - Connection Handling: The

_connectmethod establishes a connection to the database. - Output Handling (

handle_output):- This method takes the output data (assumed to be a list of dictionaries) and writes it to the database.

- It creates a table if it doesn't exist, with an

idcolumn as the primary key. - It uses an

INSERT INTO ... ON CONFLICT(id) DO UPDATESQL statement to handle upserts, ensuring that if a row with the same primary key (id) already exists, it will be updated instead of inserted.

- Input Handling (

load_input):- This method reads data from the database table and returns it.

- IO Manager Definition (

my_database_io_manager): - This function returns an instance of

MyDatabaseIOManagerwith the specified database path. - Asset Definition (

my_data_asset): - This asset generates example data with a primary key (

id) and uses the custom IO Manager for storage. - The data is a list of dictionaries, each representing a row with an

id,name, andemail. - Definitions (

defs): - This object ties together the asset and the IO Manager, making them available for execution within the Dagster framework.

What our Example Illustrates Regarding Primary Keys:

- Primary Key Management:

- The

handle_outputmethod inMyDatabaseIOManagerensures that theidcolumn is treated as the primary key. - The SQL statement

INSERT INTO ... ON CONFLICT(id) DO UPDATEensures that if a row with the sameidalready exists, it will be updated rather than inserted. - IO Manager Usage:

- The custom IO Manager (

MyDatabaseIOManager) abstracts away the details of how data is stored and retrieved from the SQLite database. - The

handle_outputmethod handles the logic for writing data to the database, including creating the table if it doesn't exist and performing upserts. - The

load_inputmethod handles the logic for reading data from the database, making it available for downstream assets or operations.