What Are ETL Tools?

ETL (Extract, Transform, Load) tools are software solutions that help organizations manage and process data from multiple sources. They follow a three-step process: extracting data from different systems, transforming it into a structured format, and loading it into a central data repository, such as a database or data warehouse.

In the extraction phase, ETL tools gather raw data from diverse sources such as databases, APIs, or flat files. The transformation phase involves cleaning, filtering, and reformatting this data to meet business or analytics requirements. The load phase transfers the transformed data into its destination, whether that be a data warehouse, cloud storage, or analytics platform.

ETL tools are useful for integrating and preparing data for analysis, enabling companies to make informed decisions based on consolidated, high-quality data. They are especially important in environments where data is scattered across multiple systems and needs to be combined for unified reporting or analysis.

Types of ETL Tools

ETL tools can be categorized based on their licensing and access models.

Open-Source ETL Tools

Open-source ETL tools offer comprehensive capabilities without the licensing fees of proprietary solutions. Users benefit from a collaborative development environment, where community-contributed plugins and features continuously enhance the tool's functionality. These tools also allow users to modify and extend the source code.

However, relying on community support may require technical expertise to troubleshoot and customize setups. Organizations must weigh these tools against the potential need for internal expertise or external consultants to support system customization and troubleshooting.

Cloud-Based ETL Tools

Cloud-based ETL tools offer significant benefits in terms of scalability, reliability, and reduced IT overhead. They enable organizations to manage large volumes of data efficiently without investing in expensive physical infrastructure.

These tools typically integrate with other cloud services, providing a simplified approach to data processing across distributed environments. Advanced solutions support real-time data streaming, a critical requirement for modern analytics solutions.

Enterprise ETL Tools

Enterprise ETL tools are useful for organizations handling complex, large-scale data environments. They are typically deployed on-premises, and offer features such as advanced data transformation capabilities, comprehensive data integration, and extensive support.

Enterprise tools also provide scalable architecture, which can handle vast volumes of data while maintaining high performance levels. These tools also emphasize security and compliance, ensuring that sensitive data is protected across the ETL pipeline. The investment in enterprise ETL tools often comes with dedicated vendor support.

Custom ETL Solutions

Custom ETL solutions are tailored to meet organizational needs, offering precision and flexibility that standard tools may not provide. These solutions can be developed in-house or by specialized third-party vendors to address unique data processes, integration requirements, and business objectives.

Custom tools are particularly beneficial for industries with complex regulatory requirements or highly specialized data handling needs. However, developing and maintaining custom ETL solutions can be resource-intensive.

Key Features to Consider When Choosing an ETL Tool

When evaluating ETL tools, organizations should consider the following aspects.

Scalability and Performance

A scalable solution can adjust to increasing data requirements without compromising speed or efficiency, crucial for businesses looking to grow. High-performance ETL tools ensure data processes are completed within desired timeframes, supporting timely analytics and decision-making.

Ease of Use and Learning Curve

A tool with an intuitive interface reduces the learning curve, allowing users to become proficient quickly. Modern ETL tools offer visual interfaces, extensive documentation, and user-friendly design, enabling smoother onboarding and reducing training costs. For companies without specialized IT departments, these features are particularly beneficial.

Integration Capabilities

An ETL tool should integrate with various data sources, including databases, applications, and legacy systems, to centralize data efficiently. Organizations should seek ETL solutions that offer extensive connectivity options, including existing data connectors, as well as API integrations and support for integrating with proprietary and legacy systems.

Data Transformation Functions

Data transformation functions enable raw data to be converted into actionable insights. Effective transformation includes data cleaning, formatting, aggregation, and enrichment, ensuring data meets business intelligence requirements. Tools with strong transformation capabilities can execute complex logic to prepare data efficiently for analysis. Some ETL tools provide scripting languages or advanced transformation libraries for advanced scenarios.

Security and Compliance

Tools must ensure data protection through strong encryption, access control, and audit trails across data processing stages. For industries like healthcare and finance, compliance with regulations such as GDPR, HIPAA, or industry-specific standards is vital. Tools should support adherence to these requirements, reducing the burden on organizations to remain compliant.

Notable ETL Tools

1. Dagster

Dagster is an open-source data orchestration platform for the development, production, and observation of data assets across their development lifecycle, with a declarative programming model, integrated lineage and observability, data validation checks, and best-in-class testability.

License: Apache-2.0 Repo: https://github.com/dagster-io/dagster/ GitHub stars: 11K+ Contributors: 400+

Key features:

- Data asset-centric: Focuses on representing data pipelines in terms of the data assets (e.g., tables, machine learning models, etc.) that they generate, yielding an intuitive, declarative mechanism for building ETL pipelines.

- Observability and monitoring: Features a robust logging system, with built-in data catalog, asset lineage, and quality checks.

- Cloud-native and cloud-ready: Provides a managed offering with robust, managed infrastructure, elegant CI/CD capability, and multiple deployment options for custom infrastructure.

- Integrations: Extensive library of integrations with the most popular data tools, including the leading cloud providers (AWS, GCP, Azure) and ETL tools (Fivetran, Airbyte, dlt, Sling, etc.).

- Flexible: As a Python-based data orchestrator, Dagster affords you the full power of Python. It also lets you execute any arbitrary code in other programming languages and on external (remote) environments, while retaining Dagster’s best-in-class observability, lineage, cataloging, and debugging capabilities.

- Declarative Automation: This feature lets you go beyond cron-based scheduling and intelligently orchestrate your pipelines using event-driven conditions that consider the overall state of your pipeline and upstream data assets. This reduces redundant computations and ensures your data is always up-to-date based on business requirements and SLAs, instead of arbitrary time triggers.

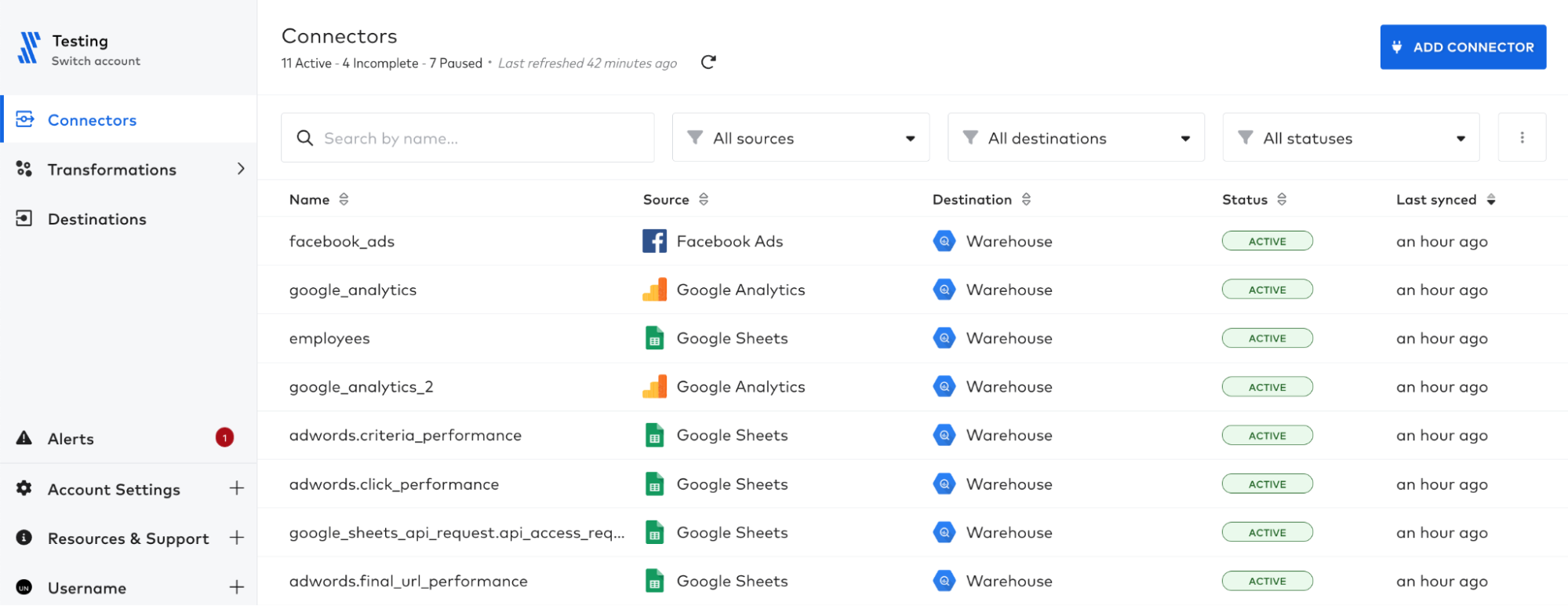

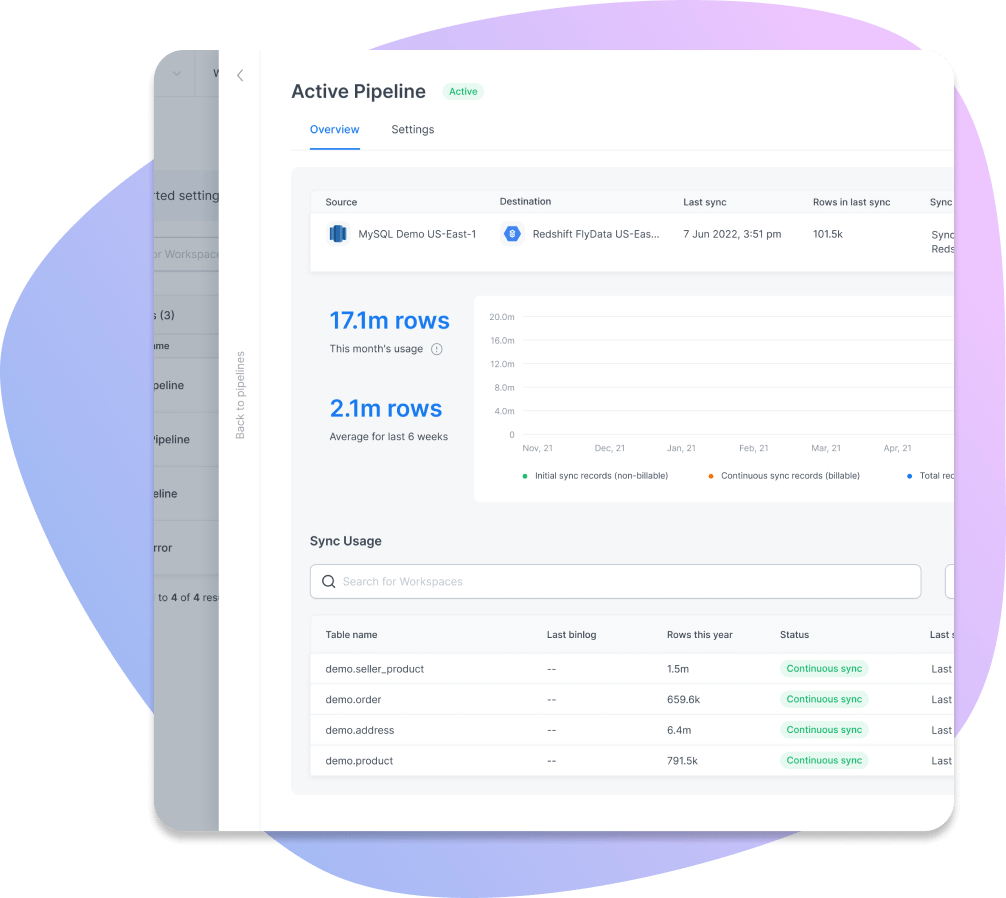

2. Fivetran

Fivetran is a fully managed ETL and ELT platform that simplifies data integration by automating the extraction and loading of data from diverse sources into a central location. With its focus on ease of use, Fivetran eliminates the need for manual intervention in managing data pipelines.

License: Commercial

Key features:

- Prebuilt connectors: Provides access to over 400 prebuilt connectors for integration with various data environments. Users can also build custom connectors or use Lite connectors for specialized needs.

- Automated schema management: Fivetran automatically adjusts to schema changes (schema drift), keeping your data clean and organized without manual effort.

- Fully managed pipelines: Handles issues like source changes or connectivity problems automatically, reducing the need for internal IT involvement.

- High-performance data movement: Ensures fast and reliable data transfer, even during high-volume processing, minimizing latency and system impact.

- Security and transparency: Enhances security with encryption and provides visibility into data flow, ensuring data integrity throughout the process.

3. Matillion

Matillion is a cloud-native data integration platform designed to help data teams build and manage data pipelines efficiently in modern cloud environments. It supports both code-first and visual development, enabling any source to be connected to any Snowflake, Redshift, or Databricks.

License: Commercial

Key features:

- Cloud-native ELT: Executes transformations in-database for scalability, performance, and cost control.

- Flexible development options: Offers both a visual workflow designer and support high-code ETL with Python, SQL, and dbt core.

- Broad data connectivity: Includes pre-built, custom, and community connectors to ingest data from virtually any source.

- Reverse ETL: Built-in data activation by pushing transformed data back into business tools and operational systems.

- Virtual Data Engineering: Supercharge all the above with Maia, the purpose-built AI data workforce.

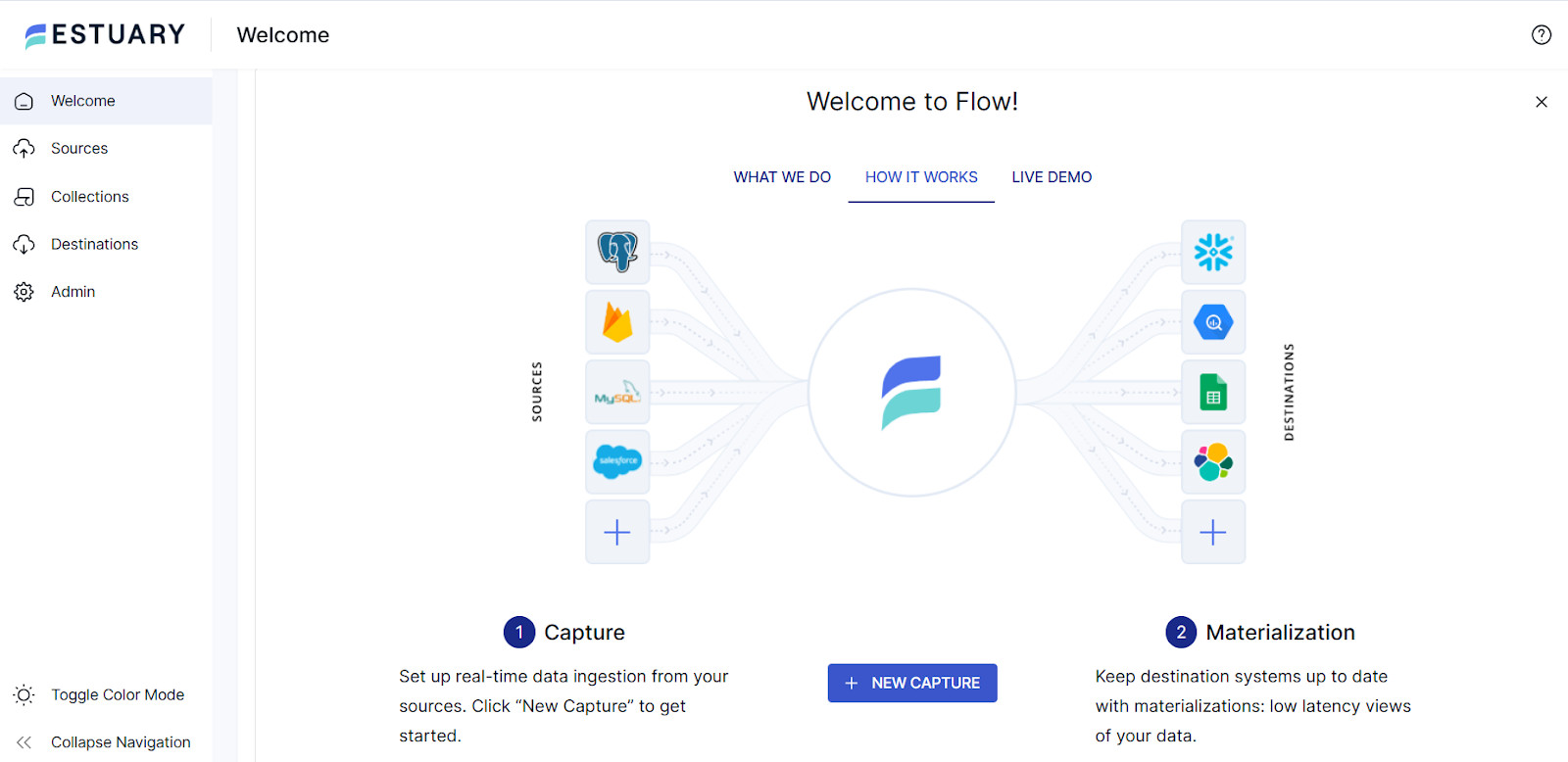

4. Estuary

Estuary Flow is a real-time data integration platform designed to unify batch and streaming ETL into a single, scalable system. Built for performance and flexibility, it enables data engineers to capture, transform, and move data with millisecond latency using a combination of SQL, TypeScript, and low-code configurations.

License: Business Source License (BSL) + Apache 2.0 (partial) Repo: https://github.com/estuary/flow GitHub stars: 700+

Key features:

- Millisecond-latency pipelines: Estuary enables real-time ingestion and delivery of data with millisecond-level latency, making it ideal for powering operational analytics, automation, and AI use cases. Users can also configure custom batch intervals for predictable scheduling.

- Unified streaming + batch ETL: Unlike traditional ETL tools, Estuary Flow treats streaming and batch data as first-class citizens in the same pipeline, helping teams consolidate tooling and reduce complexity.

- Optimized in-house connectors: Estuary offers a growing library of production-grade, in-house connectors for major databases, APIs, and cloud platforms. These are deeply optimized for each system, reducing ingestion costs and maximizing reliability when syncing with destinations like Snowflake, BigQuery, and Databricks.

- Schema evolution + resilience: Pipelines are automatically resilient to schema drift, thanks to built-in schema inference and evolution. This allows seamless handling of changes in source data without downtime or manual intervention.

- Robust transformation layer: Transform and validate data in real time using standard SQL or statically typed TypeScript. Developers can also integrate custom logic and control pipelines programmatically using the flowctl CLI or the intuitive web UI.

- Flexible deployments: Estuary Flow supports fully managed SaaS, Private Cloud, and Bring Your Own Cloud (BYOC) deployments, offering data residency, compliance, and security options for enterprise use cases.

- Transparent pricing: Pricing is based on data volume and the number of active connector instances, with a free plan available for small-scale pipelines and evaluations.

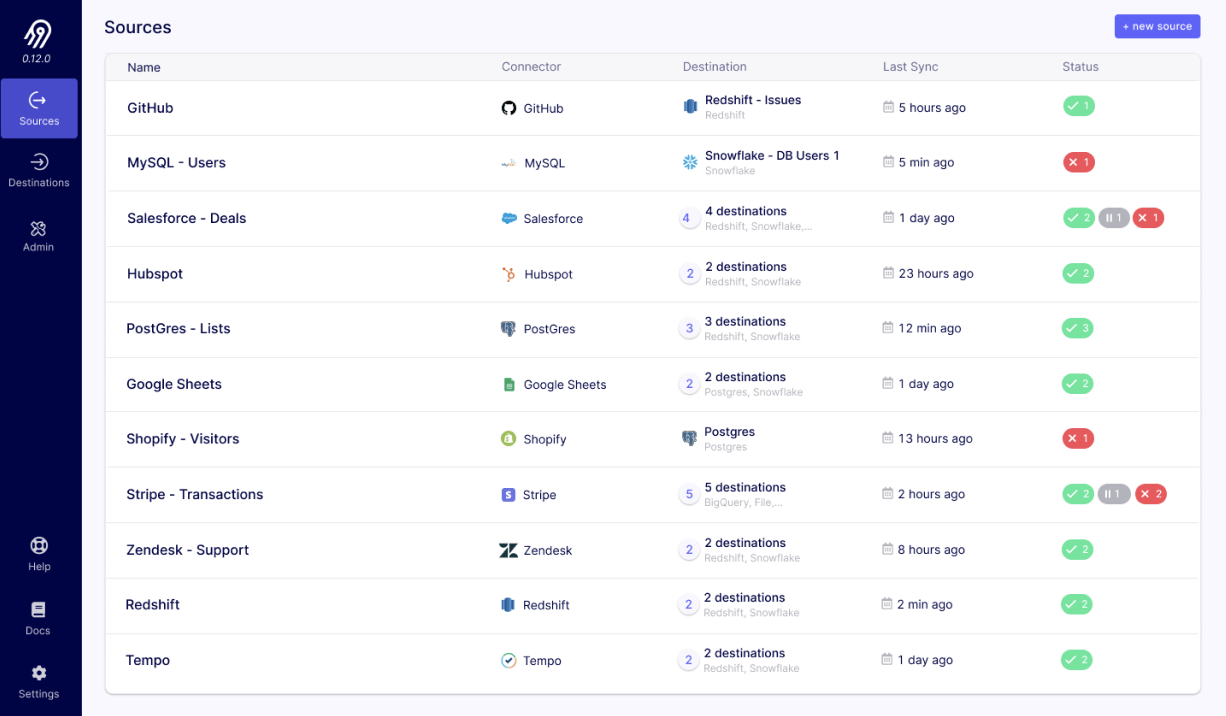

5. Airbyte

Airbyte is an open-source ELT platform designed to handle a range of data integration needs. It simplifies the process of extracting, loading, and transforming data by offering over 400 connectors that support various sources and destinations.

License: MIT, ELv2 Repo: https://github.com/airbytehq/airbyte GitHub stars: 16K+ Contributors: 900+

Key features:

- Self-serve data extraction: Airbyte makes it possible to extract data from sources without writing any code and without complex integration efforts.

- Predictable data loading: Users can load data into multiple destinations like warehouses, lakes, and databases. Handles data typing, deduplication, and integrates seamlessly with tools like dbt, Airflow, and Dagster for streamlined data processing.

- No-code and low-code connector builder: Users can build custom connectors in minutes using Airbyte’s no-code or low-code Connector Development Kit (CDK), allowing non-engineers to create integrations without needing a local development environment.

- Scalable ELT platform: Offers flexible synchronization frequencies (from 5 minutes to 24 hours) and manages source schema changes.

- Built-in integrations: Extracts data from over 400 sources, including APIs, databases, and files, with high-speed data replication. Supports incremental updates and captures changes from databases, making it suitable for continuous data synchronization.

6. Meltano

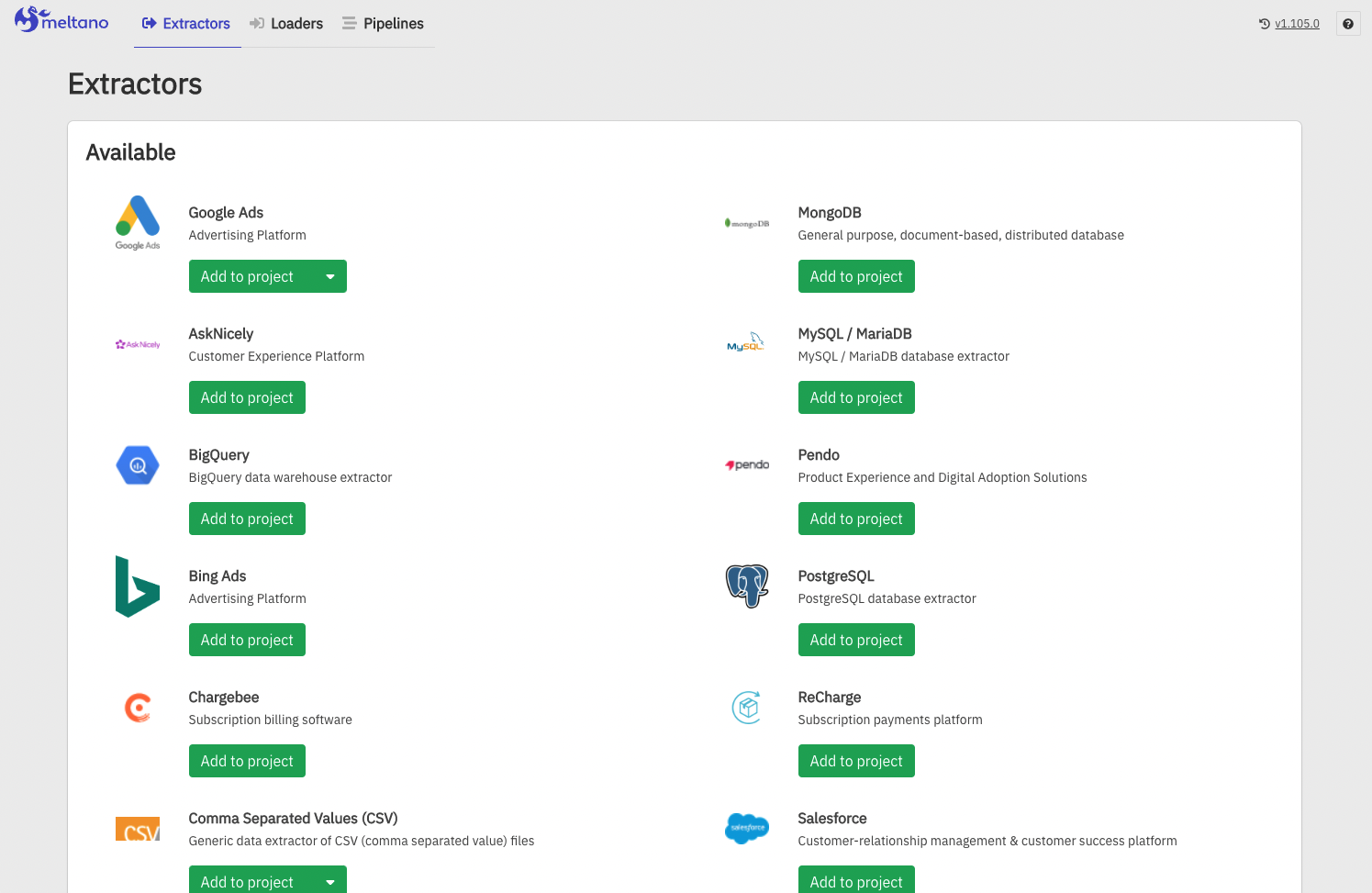

Meltano is an open-source ELT platform that allows data engineers to build, manage, and customize data pipelines with full visibility and control. Its CLI-driven approach integrates with version control, offering a transparent and testable environment for managing data workflows.

License: MIT Repo: https://github.com/meltano/meltano GitHub stars: 1K+ Contributors: 100+

Key features:

- Source and destination support: Offers over 600 connectors for databases, SaaS APIs, files, and custom sources, ensuring data can be extracted and loaded into any destination, including data lakes, warehouses, and vector databases.

- Customizable connectors: Users can build and modify connectors using the Meltano SDK, allowing for rapid creation of connectors tailored to niche or internal systems without waiting on external support.

- Centralized data pipeline management: Manages all data pipelines in a single place, integrating databases, SaaS apps, Python scripts, and data tools like dbt.

- CLI-driven workflow: Helps create, test, and manage pipelines as code through a command-line interface that integrates with version control for easy collaboration, rollback, and debugging.

- Transparent pipeline monitoring: Provides detailed logs and visibility into pipeline operations, allowing for quick debugging.

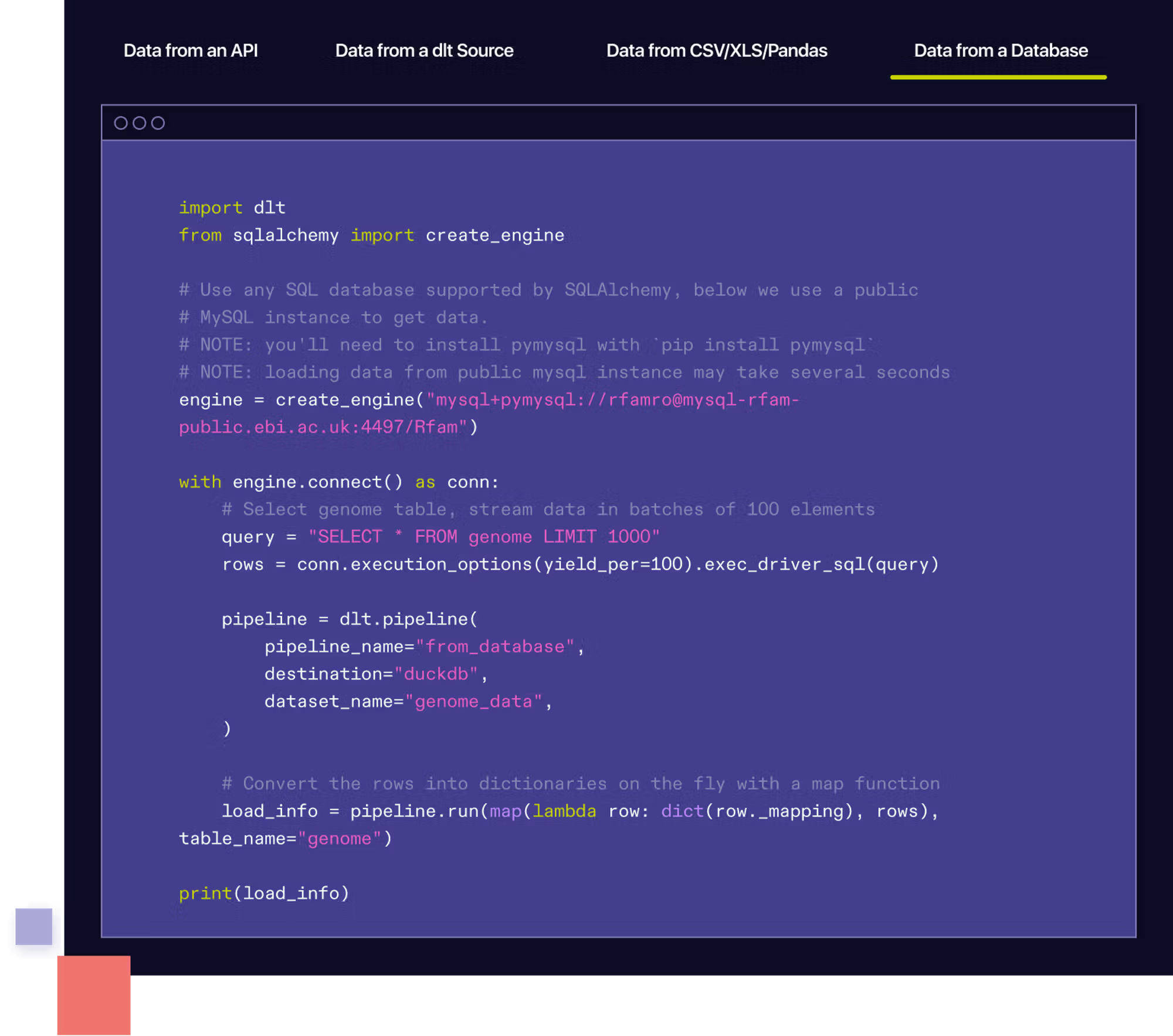

7. DLT

DLT (Data Load Tool) is an open-source solution to simplify the ELT process by offering scalability and ease of use for data engineers and analysts. Built with a focus on simplifying ETL workflows, DLT helps users efficiently manage data pipelines, from extraction to transformation and loading into various destinations.

License: Apache-2.0 Repo: https://github.com/dlt-hub/dlt GitHub stars: 2K+ Contributors: 70+

Key features:

- Ease of use: Can be deployed anywhere Python runs, without the need to configure Docker containers or set up complex infrastructure.

- Prebuilt connectors: Offers a range of prebuilt connectors for common data sources and destinations, minimizing setup time and effort.

- Code-driven workflows: Enables users to define workflows programmatically, ensuring flexibility and control over data processing pipelines.

- Flexible: Native support for schema evolution and data contracts ensures your pipelines can support rapidly evolving sources with changing schemas.

- Error handling: Includes detailed error tracking and recovery mechanisms, reducing pipeline downtime and ensuring data integrity.

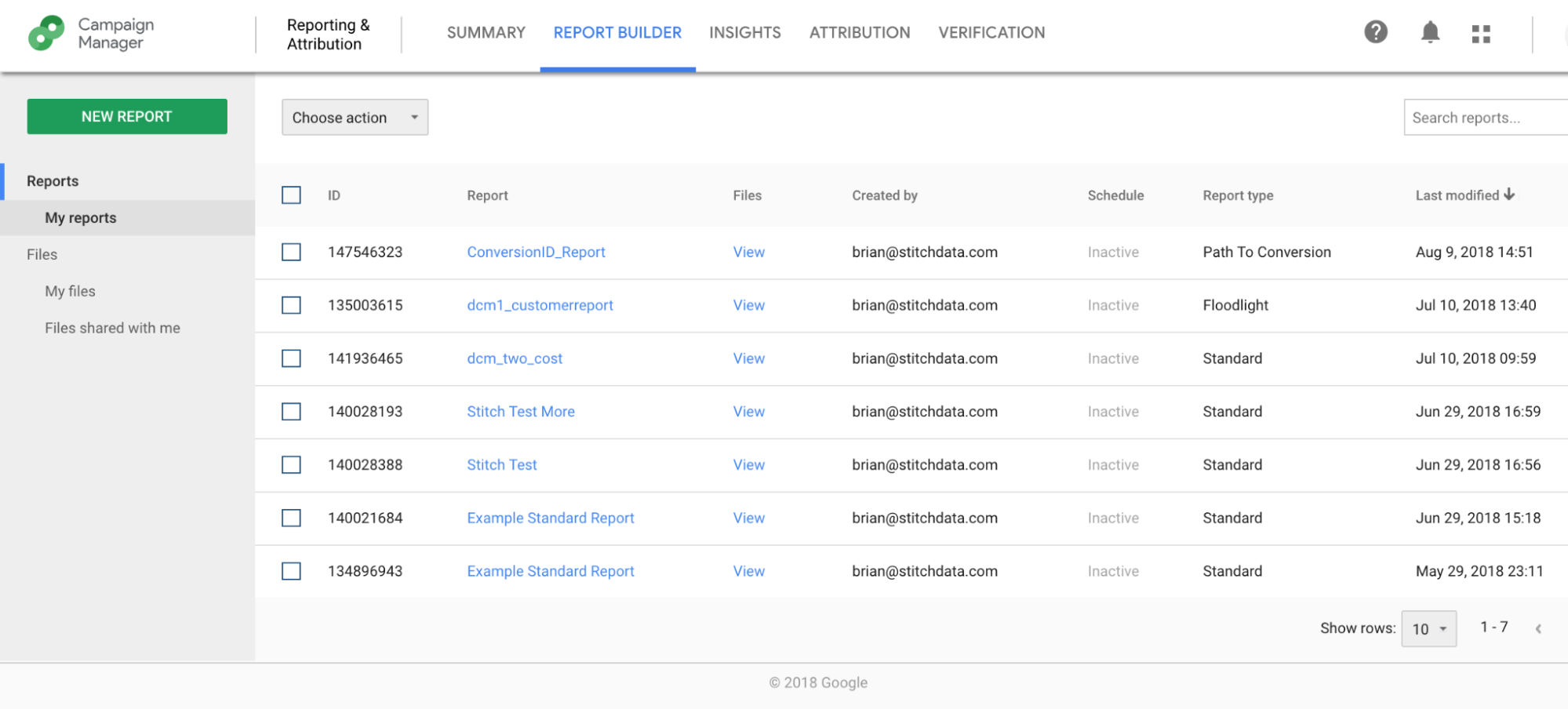

8. Stitch

Stitch is a cloud-based ETL platform that automates the extraction and loading of data, enabling organizations to centralize their data from various sources into a single data repository. With an emphasis on ease of use, Stitch allows data teams to set up and manage data pipelines without requiring IT expertise.

License: Commercial

Key features:

- Prebuilt data connectors: Connects to over 140 popular data sources, including Google Analytics, Salesforce, HubSpot, and Shopify, with no coding required.

- Automated data pipelines: Automatically updates and maintains data pipelines, eliminating the need for ongoing maintenance and freeing teams to focus on analysis.

- Centralized data for insights: Consolidates data from multiple systems into a single destination, creating a reliable and analysis-ready single source of truth.

- Fast setup: Deploys data pipelines in minutes, helping quickly move data from source to destination.

- Enterprise-grade security: SOC 2 Type II certified and compliant with HIPAA, GDPR, CCPA, and ISO/IEC 27001, ensuring data security and compliance.

9. Integrate.io

Integrate.io is a low-code ETL platform designed to automate data processes, helping businesses efficiently integrate, prepare, and transform data. It allows users to automate manual tasks like file preparation, Salesforce data integration, and database replication.

License: Commercial

Key features:

- Low-code data pipelines: Automates complex data preparation and transformation processes through a drag-and-drop interface, eliminating the need for extensive coding or manual effort.

- Bi-directional Salesforce integration: Integrates and syncs Salesforce data with other systems through a bi-directional connector.

- Automated file data preparation: Automates the ingestion, cleaning, and normalization of file-based data for smooth integration into business workflows.

- REST API ingestion: Offers API ingestion capabilities, allowing users to pull data from virtually any API without technical limitations.

- 60-second CDC database replication: Supports data products with continuous, real-time Change Data Capture (CDC) replication, ensuring up-to-the-minute data accuracy.

10. IBM Datastage

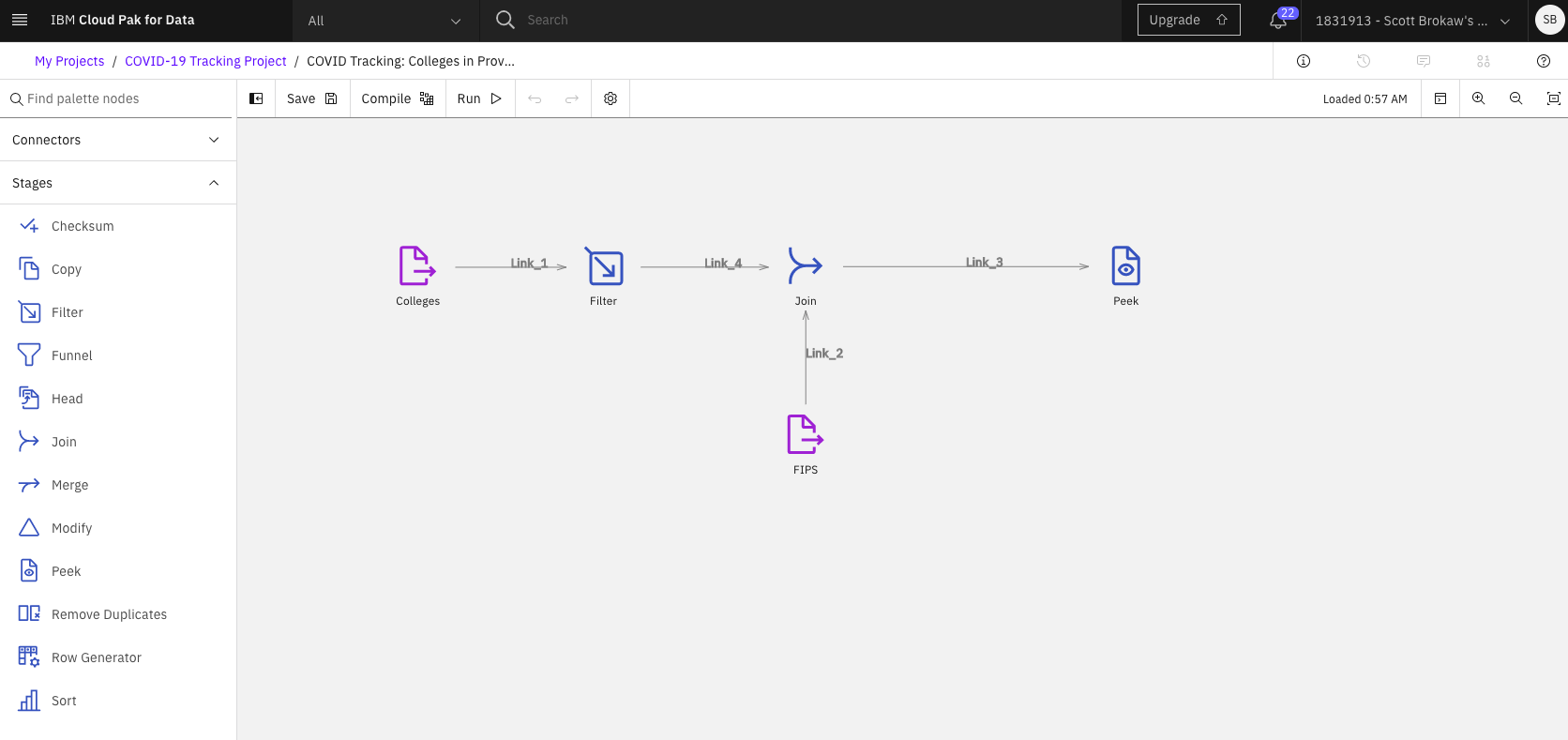

IBM DataStage is an AI-powered data integration platform that enables businesses to design, develop, and execute ETL and ELT pipelines efficiently. Built for multi-cloud and on-premises environments, DataStage integrates seamlessly with IBM Cloud Pak for Data, allowing for high-performance data transformations, scalability, and data governance.

License: Commercial

Key features:

- Multi-cloud and on-premises deployment: Runs ETL/ELT jobs on any cloud or on-premises with a hybrid architecture.

- AI-powered automation: Leverages machine learning-assisted design to automate data pipeline development and deployment, improving productivity and reducing development costs.

- Parallel processing engine: Processes large volumes of data at scale with IBM’s parallel engine, optimizing workload execution and balancing resources for high throughput.

- In-flight data quality: Ensures data integrity by automatically resolving quality issues using IBM InfoSphere QualityStage as data moves through the pipeline.

- Extensive connectivity: Offers prebuilt connectors to integrate data from cloud sources and data warehouses, including IBM Db2 and Netezza, reducing the need for custom connectors.

Bonus - Oracle Data Integrator

Oracle Data Integrator (ODI) is a data integration platform that supports both ETL and ELT processes. Designed for complex data environments, ODI offers high-performance data movement, transformation, and governance while leveraging existing database resources.

License: Commercial

Key features:

- Declarative design: Uses declarative rules like mappings, joins, filters, and constraints to simplify development and enhance productivity.

- ELT architecture: Minimizes data movement by pushing transformations to the target database, eliminating the need for a middle-tier engine and reducing network traffic.

- Knowledge modules: Offers prebuilt and customizable templates to handle various data integration tasks such as extraction, loading, integration, and data quality management.

- Connectivity: Integrates data from a range of sources including databases, files, message queues, and APIs through a unified interface.

- Change data capture (CDC): Automatically captures and integrates data changes in real time using triggers or log-based mechanisms, ensuring timely updates for mission-critical data.

Conclusion

ETL tools play a critical role in helping organizations manage the complexity of data integration from various sources, ensuring the data is clean, consistent, and ready for analysis. By automating the extract, transform, and load processes, these tools enable businesses to make data-driven decisions more efficiently and reduce the burden of manual data handling.

As a data-centric data orchestrator, Dagster allows data teams to augment the capabilities of their ETL tools by providing a rich set of observability, cataloging, lineage, and orchestration features, connecting your ETL tools and the downstream processes that depend on them.

It also features integrations with leading providers like Fivetran and Airbyte, and a built-in toolkit for data ingestion using the most popular open-source data integration libraries.

To learn more about building ETL pipelines with Dagster, see this rundown of ELT Options in Dagster.