The future of data platforms are composable, unified, and leveraged

Every company has a data platform. All of the production data pipelines that produce all of the data assets that drive your business are managed by this platform. Heterogeneity is the norm: many tools, many sources of data, many storage engines, many personas, many use cases. - Nick Schrock, Founder and CTO of Dagster Labs

Data platform Week Keynote from Nick Schrock , Founder and CTO of Dagster Labs

We recently hosted Data Platform Week, a series of online events where our friends and partners joined us in sharing our vision for data platforms and how we are working to make that vision a reality.

Successful data platforms require both technological and organizational changes, moving away from fragmented or overly restrictive approaches toward a balanced model that enables both practitioner autonomy and a unified control plane.

We included several features in our Dagster 1.9 release that make our vision of the Unified Control Plane a reality.

- Core Orchestration with General Availability of Declarative Automation

- The Integrations Ecosystem and The General Availability of Pipes

- Orchestration Unification Federation and the experimental release of Airlift

- Data platform system of record and the release of Business Intelligence tool integrations.

Orchestration is the backbone of any scalable data platform. It's not just about running jobs - it's about coordinating data assets, managing state, and maintaining control as your platform grows. Without orchestration, data teams get trapped in endless cycles of firefighting and manual intervention, while infrastructure costs spiral upward as systems become increasingly inefficient.

Core Orchestration

Watch the full talk here.

Data pipeline complexity results in tribal knowledge and interwoven dependencies that are difficult to maintain. Without proper orchestration, teams find themselves bogged down by impedance mismatches and manual intervention, making it increasingly difficult to scale their data operations effectively.

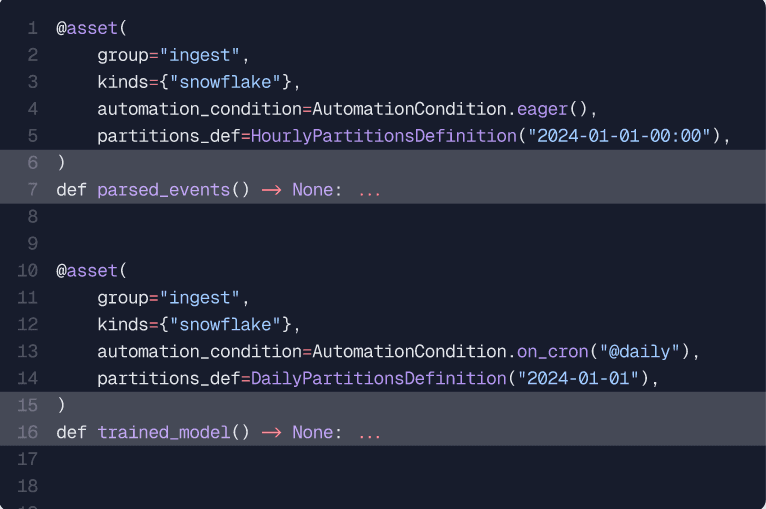

Declarative automation provides composable building blocks for common pipeline scenarios while maintaining complete visibility and control. By intelligently managing state and dependencies, Dagster can automatically trigger runs when parent assets update while offering customizable conditions for more granular control. This approach simplifies pipeline management and enables automated quality checks with the same flexibility as asset processing, ultimately minimizing development overhead and allowing teams to focus on building value rather than managing complexity.

Integrations Ecosystem

Watch the full session here.

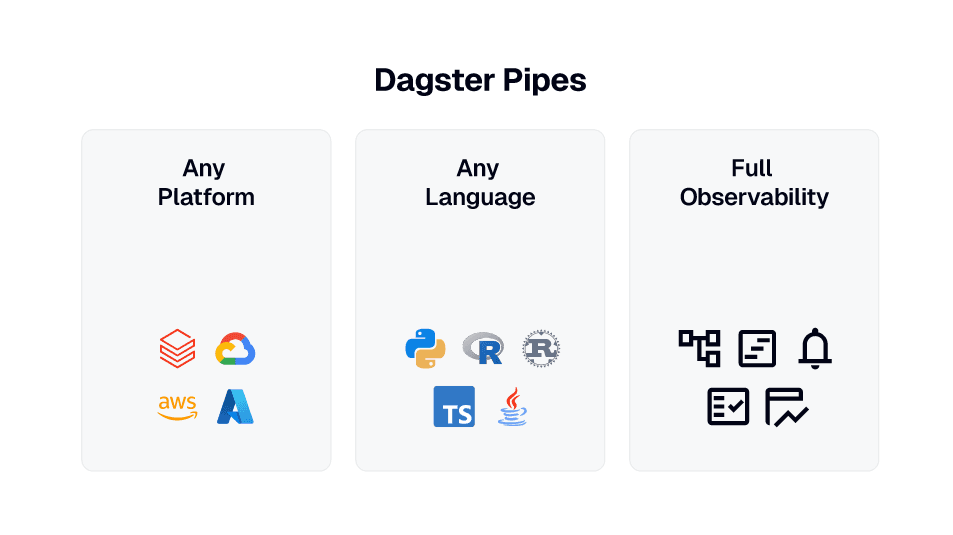

Data platforms are heterogeneous. The right tool for the job requires purpose-built solutions and interacting with external systems that run in specific runtimes that often don't play well with legacy orchestration tools.

Pipes is now Generally Available and unlocks a whole host of capabilities for data platform teams. Its standardized interface to launch code in external environments with minimal additional dependencies while still maintaining full visibility through parameter passing, streaming logs, and structured metadata makes it particularly powerful for incremental adoption. This flexibility is further enhanced by Dagster's strict separation of orchestration and execution, along with its first-class multi-language support, allowing teams to maintain their existing tech stacks while gradually incorporating Dagster's orchestration benefits.

Additionally, we are continuously investing in purpose-built open-source tooling to make it easier than ever for data teams to use the right tool for the job. With our embedded ELT package, we incorporated ingestion tools Sling and dlt so data teams can get data into their platforms cost-effectively. When we switched to using embedded ELT internally, we saved $40K annually on managed solution spending.

Transformation is the lynch-pin process in data platforms. SQL frameworks have become the practitioner's favorite way of accomplishing this function. In addition to our dbt integration we are excited to announce an integration with SDF. At Dagster, we are also committed to being open-source driven and supported. Open-source observer’s sqlmesh integration is another great example of the community coming together and shipping in ways we can all benefit from.

Orchestration Unification Federation & Airlift

Watch the full session here.

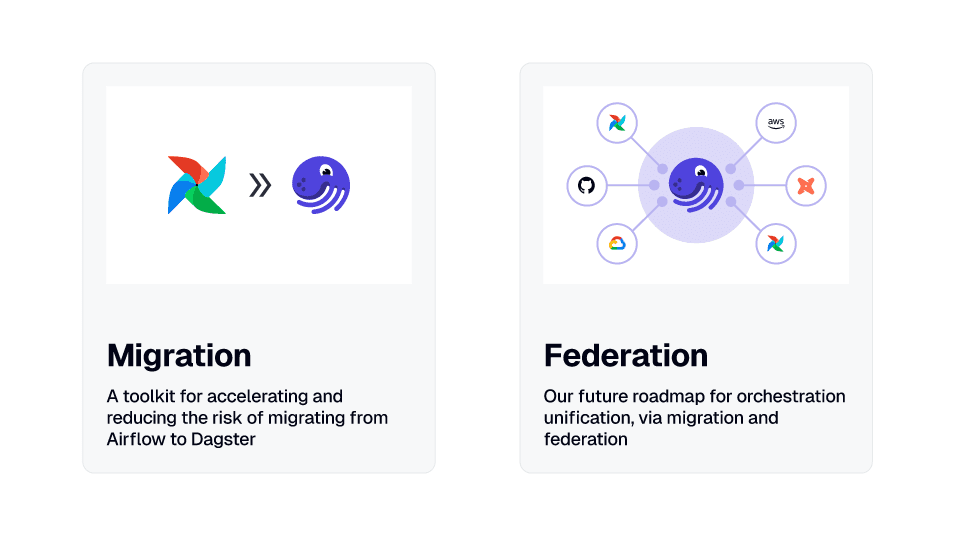

The evolution of systems mirrors construction projects, requiring specialized tools and scaffolding to ensure smooth transitions. It often isn’t feasible for organizations to lose functionality during a migration. Software migrations must be broken into manageable pieces that maintain system sustainability while building momentum.

Data Platforms are a going concern, and migration from legacy tools needs to be done in parallel so downstream processes are not impacted. We are excited to announce our Airflow observability and migration Tool Airlift.

The Airlift migration tool exemplifies this approach by allowing teams to peer into existing Airflow systems, observe and model data lineage, and gradually transition workflows to Dagster through API integration.

At Dagster, we recognize that data environments are complex and that is a full migration isn't always possible or necessary. With Airlift, we offer federation federation capabilities that enable organizations to unify and schedule orchestration across multiple orchestrators. So teams to maintain existing systems while selectively adopting new ones where most beneficial.

System of Record - BI assets

Watch the full session here.

Business Intelligence tools are the primary data consumption tool for most organizations. When there are data quality problems or inconsistencies in the underlying data, they typically become apparent when users attempt to create or review reports. Bringing these tools into your asset graph is crucial for establishing a data platform system of record.

The scale of modern data platforms demands a system of record. With the inclusion of Business intelligence tools, you now have full end-to-end lineage and observability of your entire data platform. This is a huge development for Data teams to have this observability without being siloed in a single tech stack. When you build with Dagster you get unified command and control while being able to use the best tool for the job.

Implementation Showcases

In addition to hearing from Dagster experts, we had showcases from several Dagster power users to showcase how they use Dagster to power their organizations:

Declarative Automation

Orchestrating SMB data

Tian Xie - Enimga

Enigma Collects data from 100s of disparate sources to provide their SMB clients with a single rich dataset. They chose Dagster to improve the velocity of data platform improvements with branch deployments and declarative automation to handle the complexity involved in reconciling the data sources together.

Declarative Automation at scale

Stig-Martin Liavåg - Kahoot!

Kahoot! Is a global learning engagement platform that processes large volumes of data for product analytics. They utilize declarative automation with custom automation conditions to keep the assets on different schedules up to date while minimizing costs.

Pipes

Cost effective alternative to Databricks

Georg Hieler - Magenta & ASCII

Georg utilized Pipes with Dagster to manage optimized spark runtimes on Databricks and AWS EMR for different jobs while maintaining a single pane of glass view over the process and reducing costs by 40%.

Airlift

Changing the car while driving it

Alex Launi - Red ventures

Red Ventures utilized Airlift to assist in a dual migration from Scala to dbt and Airflow to Dagster without the headaches usually associated with system migrations.

Data Engineering Panel Discussion

Data Platform Week wrapped up with a fun and wide-ranging conversation about Data Engineering with several leaders in the space.

Panel Members:

- Dagster Founder and Chief Technology Officer - Nick Schrock

- Head of Data at Sigma - Jake Hannan

- Cofounder and Product at SDF - Elias DeFaria

- Product & Engineering at Atlan - Eric Veleker

Topics of discussion

- The challenges Data engineers face teams today

- Dealing with legacy technologies

- Data Quality

- Artificial Intelligence

Closing Thoughts

Modern organizations run on data platforms, with unique patterns for generating and consuming data. Data practitioners come with strong preferences for specific tools and technologies, creating a complex landscape for platform owners to navigate.

Traditionally, this has forced difficult tradeoffs: either give developers the flexibility to use their preferred tools at the cost of comprehensive observability, or standardize on a limited toolset that sacrifices capabilities and productivity. Neither option fully serves the needs of modern data teams.