We are pleased to announce Elementl's $33M Series B and share our vision for what's next for Dagster and the practice of data engineering.

Elementl is pleased to announce our Series B financing, raising a total of 33 million dollars led by Georgian and joined by new investors 8VC, Human Capital, and Hanover, along with existing investors Amplify Partners, Index Ventures, Slow Ventures, and Sequoia Capital. You can read the official announcement on our blog.

Georgian believes in the power of data and criticality of data orchestration, demonstrated by how they have built an extensive data platform and practice to work with their portfolio. Beyond that, they have deep conviction on Dagster, as they are enthusiastic users of the product. Given their deep domain expertise, we are thrilled to add them as partners.

Big Complexity is the challenge, not Big Data

In November 2021, we published a blog post which laid out our belief that we were in the “Decade of Data” and that “Big Complexity” is the challenge problem of this decade. Those words have stood the test of time. Data is transforming the way organizations operate. Data assets drive human decision and automated decision-making at organizations of all sizes through analytics, machine learning, and AI. As a result, building and maintaining trusted data assets is an imperative at every organization of any size.

The last five to ten years have seen an explosion of tools and point solutions—often called the Modern Data Stack—emerge to support this imperative, and there has been enormous progress. But most organizations are still drowning in the complexity of managing their production data assets. Many targeted tools and point solutions lead to early wins and better localized productivity, but contribute to more organization-wide chaos, as practitioners across the organization create huge numbers of assets with disparate processes and tools.

Taming this chaos, and empowering organizations to develop and operate their data assets confidently and efficiently is one of the most critical infrastructure challenges of the decade. We call it Big Complexity. In software, the art of managing complexity is software engineering. In data, it is data engineering. The decade of data can only be mastered by becoming the decade of data engineering.

Data Engineering is a discipline, not a job title

Data engineering is the practice of designing and building software for collecting, storing, and managing data.

The lynchpin discipline for the Big Complexity problem is data engineering. Many think of data engineering as something that only data engineers do. We instead consider data engineering as a cross-cutting discipline. If data engineering is defined as keeping a production set of trusted data assets up-to-date and of high quality, then nearly all data practitioners do data engineering as a critical part of their job.

ML Engineering is largely data engineering, as they directly build or support others that build production ML models, which are just another data asset. Data science—when it includes end-to-end responsibility for production ML models—also has a substantial data engineering component. Analytics engineering is a subspecialty of data engineering focused on SQL-based analytics use cases. Data platform engineers—an emerging role heavily represented in the Dagster community—scale data engineering across all of these roles.

...nearly all data practitioners do data engineering as a critical part of their job.

This isn't a theory. We see this emergent behavior in our fast-growing Cloud customer base, as over half of our customers use Dagster Cloud for ML use cases alongside analytics. Another fifty percent utilize Dagster to create assets for use in production applications. dbt—the definitive tool of analytics engineering—is our most used integration, and Dagster Cloud is the most powerful, best-in-class dbt-native orchestration solution. The use of Dagster for multiple use cases that span roles is the norm rather than the exception.

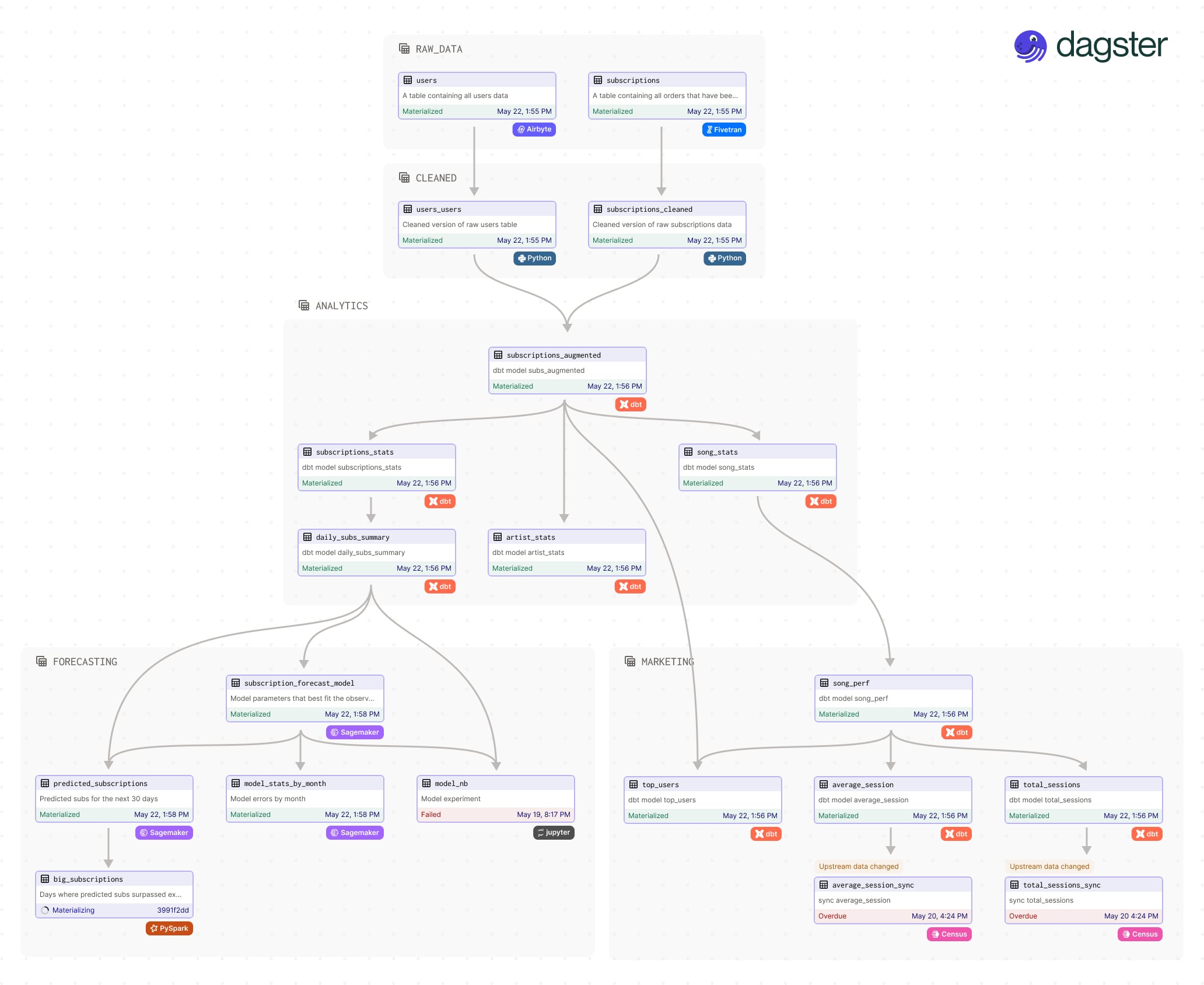

Data engineering is the discipline that unites these roles. And this is reflected in their work product, not just their process. ML engineers depend on the assets created by analytics engineers. Analytics engineers depend on the assets created by data engineers. Through a common discipline, they create an interconnected, interdependent graph of data assets.

Dagster manifests this graph directly within the product. The asset graph is the center of the programming model, the subject of the development lifecycle, the seat of metadata, and the operational single pane of glass.

A world of layers, not silos

When you start to view data engineering as a discipline rather than a job title, you start to see it across the organization, and it becomes clear that there should be a software layer to unify that activity across titles.

The data world today is siloed across a number of domains.

- There is organizational siloing across teams, as ML, analytics, and product teams too often use completely independent stacks to perform that same essential activity.

- Teams adopt myriad point solutions and create operational silos, as practitioners are forced to navigate several disconnected tools to accomplish basic operational and development tasks.

- The counterweight to these trends is a new tendency toward large vendors—typically hyperscaler cloud and data platforms—providing a vertically integrated stack of tools. These are vendor-specific, vertical silos resulting in lock-in and inflexibility.

We believe the data ecosystem must think in layers, not silos. Layering means the unification of capabilities across an org while still allowing the flexibility to adopt and use heterogeneous tools on top of that layer. For example, there should be a unified scheduling layer rather than isolated schedulers in a number of hosted services. For ML teams, MLOps tools are a layer of specialized tools on top of a layer of pipelining and orchestration tools across the data organization rather than a completely isolated stack of tools.

In this framework, Dagster is the cross-cutting layer for data engineering and a unified control plane for assets and the data pipelines that create them.

The exciting future of orchestration: a data control plane, not a workflow scheduler

Bringing order to chaos through data engineering rigor on a unified, asset-oriented control plane.

The legacy view of orchestration—that it is a system solely for the scheduling and ordering of tasks in production—is holding the ecosystem back as data teams are forced to expensively integrate an entire suite of tools to have basic data management capabilities.

We believe orchestration is a critical component of a new layer: the data control plane. The purpose of this control plane is to house and manage the asset graph. Teams that adopt Dagster report getting three core benefits:

- Productivity: Development of the graph is driven by a software engineering process, with a focus on testing and fast feedback loops. It provides leverage for platform engineering to make all of their stakeholders more efficient and productive.

- Context: By its nature, it has built-in data lineage, observability, and cataloging, and therefore provides unprecedented context to practitioners about the state of their assets and the platform.

- Consolidation: These consolidated capabilities enable data teams to get to scale without bringing in a number of tools for basic data management. Data quality, cost management, and governance capabilities are natural additions to the layer.

This is not a world where there is “one tool to rule them all”. There will still be a rich ecosystem of tools in this vision. The layer is programmable and pluggable, and these capabilities are accessible and consumable via well-defined APIs. This empowers other tools in the ecosystem to build at a higher level that can deliver more value to customers rather than rebuild the asset graph over and over again.

In our new constrained economic climate, efficiency is paramount, and bringing software engineering practices and operational rigor to data platforms is growing in importance. The demands on data teams will increase even more as data is used to find efficiencies and waste in organizations. They will have to do more with less, so it is more critical than ever that practitioners are more productive and leveraged. And all of this must happen while understanding and managing the consumption of resources. Integrating this capability directly into the control plane with a principled approach to change management will empower data teams to control costs as never before.

The path forward

With this new funding, we will scale our organization, grow our community, and bring these new capabilities to data teams across the industry. If you’re excited by this mission, we are hiring across Sales, Marketing, Growth, Design, and Operations—you can check out our open roles here.