We built a light weight evaluation framework to quantiatively measure the effectiveness of the Dagster Skills, and these are our findings.

So you've written a skill. How do you know if the agent is actually using it? Is the correct context being referenced when you ask a question? Is it doing anything at all…?

We recently published our own collection of skills for working with the Dagster framework and the vast library of integrations that we support, and we had a similar question. We wanted to better understand what was going on under-the-hood when we asked Claude, "Put my dbt models on a schedule to run every 15 minutes". So we set out to create a light-weight evaluation framework to ensure users of our skills get the result they need.

The Dagster Skills

Before we dig in to how we evaluated our skills, let's talk about the skills that we provide.

First, we have dagster-expert which is the cornerstone of context for ensuring your project adheres to the patterns and architectural decisions for working with the Dagster framework. This includes information like whether or not an asset should be partitioned, which automation strategy is best to use (declarative automation, schedules, sensors), the patterns for defining a resource, design patterns in data engineering, and how to organize your project(s). Additionally, this skill makes heavy use of the dg command-line utility which is how we provide deterministic actions to the agent. This means you can do things like scaffold a new project, launch assets to be materialized, view logs, and troubleshoot failures in both your local and deployed instances of Dagster.

Next up, we have the dagster-integrations skill, which has the largest surface area by far. These skills are broken down by category: AI & ML, ETL tools, storage options, validation frameworks, etc. As an example, if you are trying to solve a specific data engineering problem, this skill will be able to provide you with suggested tools, how to initialize the integration, and how you would configure it within you Dagster project. It's worth pointing out that an additional complexity of creating a skill for integrations is that many of these tools have their own context, or documentation, that is required to ensure the tool is being used correctly. For that reason, we are trying to encode as many of the “quirks” and “gotchas” that an engineer might run into while working with these tools.

And finally, we have dignified-python. While not specifically designed for data engineering, this skills was created to promote what we consider to be production-ready Python. It is the skill we use internally while building with Python, and it includes many of the important concepts we promote, such as: modern type annotations, exception handling patterns, API design principles, and more.

Evaluating Skills

So now that you have an idea of what we're trying to validate, let's talk about how we've built a light weight evaluation framework for ensuring that our skills are providing the correct context, being invoked when we would expect, and are most importantly, producing correct outputs. Additionally, by having a light weight testing framework, it has enabled us to get quick and repeatable feedback on workflows with context on what does and doesn’t work. This allowed us to iterate quickly and drastically improve the Dagster skills

All of the source code discussed in this post can be found in the dagster-io/skills repository in the dagster-skills-evals directory.

The basic architecture of this framework is a simple utility that allows us to run Claude headlessly with prompts covering common use-cases, and a selection of plugins (skills) to use. From that we are able to create snapshots of tool and skill usage, token utilization, and of course the output that is produced. These snapshots are persisted in version control, which allows us to then monitor how they change over time as we modify the underlying skills.

Here is an example snapshot that is produced from a prompt requested to create an asset in an existing Dagster project. As expected, we can see that the dagster-expert skill was referenced, and we can see a narrative summary of what occurred during this request, and the tools that were used. We can see that thedg scaffold defs command was utilized, and that validation occurred by using the dg list defs command as we would expect!

{

"__class__": "ClaudeExecutionResultSummary",

"execution_time_ms": 59191,

"input_tokens": 56,

"narrative_summary": [

"- User requested to add a new Dagster asset named 'dwh_asset' with an empty body",

"- Invoked the **dagster-skills:dagster-expert** skill to understand project structure and how to add new assets",

"- Discovered the project uses `dg scaffold` command for creating new definitions",

"- Explored the project structure to find the `src/acme_co_dataeng/defs/` directory where assets should be placed",

"- Ran `uv run dg scaffold defs dagster.asset defs/dwh_asset.py` to generate the asset file (created nested path issue)",

"- Fixed the incorrect nested directory structure by moving the file to the correct location",

"- Read the scaffolded file which contained a template with type hints and return annotations",

"- Modified the asset to have a simple empty body with just `pass` statement using the Edit tool",

"- Verified the asset was successfully registered by running `uv run dg list defs`, which showed `dwh_asset` in the Assets section"

],

"output_tokens": 2431,

"skills_used": [

"dagster-skills:dagster-expert"

],

"tools_used": [

"Skill",

"Bash",

"Glob",

"Bash",

"Glob",

"Bash",

"Read",

"Read",

"Bash",

"Bash",

"Read",

"Bash",

"Edit",

"Read",

"Edit",

"Read",

"Bash",

"Bash"

]

}The underlying code for this evaluation looks like the following. We are doing more than just validating that skills are being used, but we are also validating the definitions that are produced by using the dg list defs command, and validating the resulting metadata. This drastically increases our confidence in the accuracy and utility of our skills.

def test_scaffold_asset(baseline_manager: BaselineManager):

project_name = "acme_co_dataeng"

asset_name = "dwh_asset"

prompt = f"Add a new asset with an empty body named '{asset_name}'"

# Run with skills enabled

with unset_virtualenv(), tempfile.TemporaryDirectory() as tmp_dir:

subprocess.run(

["uvx", "create-dagster", "project", project_name, "--uv-sync"],

cwd=tmp_dir,

check=False,

)

project_dir = Path(tmp_dir) / project_name

result = execute_prompt(prompt, project_dir.as_posix())

# make sure the skill was used

assert "dagster-skills:dg" in result.summary.skills_used

# make sure the asset was scaffolded

defs_result = subprocess.run(

["uv", "run", "dg", "list", "defs"],

cwd=project_dir,

check=True,

capture_output=True,

text=True,

)

assert asset_name in defs_result.stdout

baseline_manager.assert_improved(result.summary)

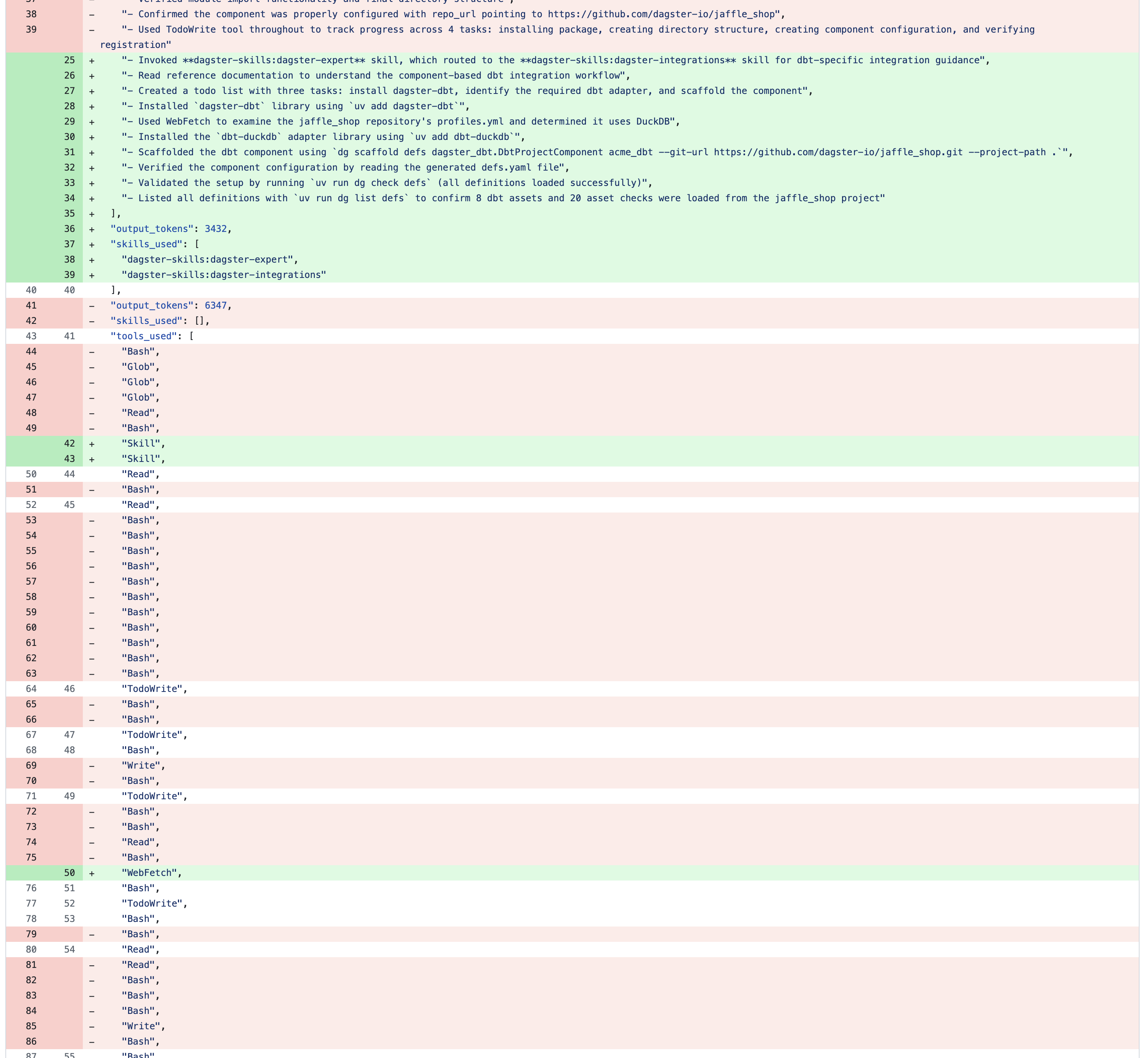

For a more compelling example, let’s look at a diff that was produced while improving the routing logic of our skills. Without the skills being used, we can see that the agent is struggling to make sense of the project, and manually reading and writing files in an attempt to complete the users goal. However, once the skills are adequately referenced, things become much more streamlined.

In addition to allowing us to better understand the actions of the agent, the narrative_summary has another benefit. It enables us to create a self-optimizing feedback loop. We can take the summary produced, provide that to an agent, and have it optimize the context while validating that the correct actions are being taken, token usage is reduced, and operations are simplified.

Lessons learned

While iterating on our skills, and validating the evaluation results, we've had a few insights.

Less is more!

This is the biggest takeaway in my mind. The initial creation of our skills was done by taking our existing documentation, pointing Claude at it, and asking it to extract the important information and re-structure it to be in the format of a skill.

There were a few issues with this automated translation, one is it still hallucinated and the parity of the translated docuements were not perfect. The second is that the resulting skills were extremely verbose, and our evaluations have revealed that terse skills seem to perform much more efficiently.

Decision trees

Another revelation is the importance of decision trees. At the top-level of each of our skills we define a decision tree of which files to reference. This maps key words or phrases to a sub-set of documentation within a references/ directory, and has helped ensure the agent retrieves only the information required for a given task.

Provide foundational command-line utilities

There is no replacement for purpose-built command-line utilities. We've found the composition of tools to work extraordinarily well from within our skills, and when interfacing with Dagster, we've opted to create specific dg commands to support it.

Instead of relying on the agent to determine which file to create, we have scaffold commands to make deterministic. To validate whether or not the Dagster definitions were created correctly, we have a dg check command. To interface with a Dagster instance, we've wrapped our API in a dg api command.

These commands have given our skills a structure to be based upon, and also a more predictable structure to parse.

Feedback loops

And finally, by creating this evaluation framework we have enabled ourselves (and agents) to iterate quickly and improve our skills in a quantitative way. By having a summary of all actions taken place during the agent’s session, we are able to quickly review if the expected behavior is taking place, and if not, modify our skills to ensure that it does.

On evaluation documentation

To close out this piece, I want to re-emphasize the importance of evaluations, a topic that feels surprisingly controversial, with many arguing online that they’re unnecessary. Before adopting this framework, we were primarily operating on faith: hoping that the context embedded in our skills would yield the desired outcomes. With evaluations in place, that guesswork disappeared. We gained clear, measurable confidence in system behavior, established tight feedback loops for iteration, and saw concrete improvements in both token utilization and tool usage.

And finally, it feel like we might be entering a new era for technical writing and education enabled by large-language models. Technical writing might be more important now than it has ever been—and it can be measured! We are now able to validate information architecture, documentation accuracy and topic coverage, and ensure that we are supporting both our human and agent readers in a qualitative way. Working on the Dagster skills and the evaluation framework has made it clear the immense importance of accurate, well thought-out documentation, and information architecture.

As always, contributions and feedback are always welcome, feel free to create discussions, pull requests, or issues on the dagster-io/skills repository.