When lots of event logs must be stored and indexed, Kafka is the obvious choice. Naturally, our queue runs on Postgres.

We shipped Dagster Cloud 1.0 in August. It’s been pretty successful so far, and this is the first in a series of blog posts talking about how we built it.

Today I’m going to talk about why we made the unconventional decision to build our logging system on top of Postgres instead of Kafka, what worked well, what didn’t work well, and how we did it.

🔬 Framing the problem

🔨 Don’t choose the right tool for the job

🚌 Postgres as a message queue

📏 How we measured

📈 Scaling the database: archiving and rate limiting

💥 Dealing with failure

🙈 Things that didn’t go well

🧬 Future work

🔬 Framing the problem

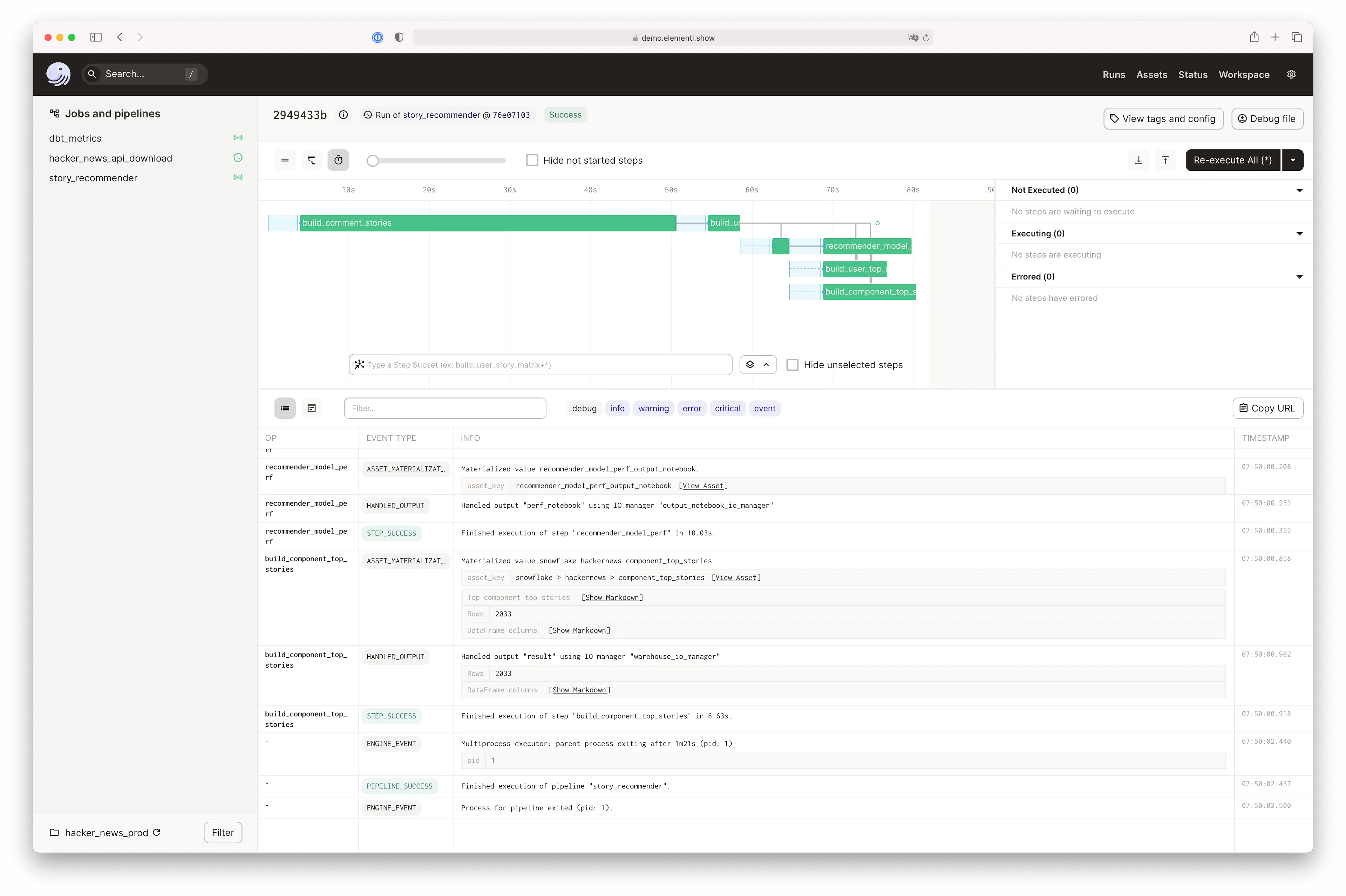

Dagster Cloud is a system for orchestrating data pipelines. At its core, there are two main components:

- A scheduler that kicks off runs of data pipelines

- A logging system that aggregates structured and unstructured information about the pipelines and reports it to the user via a UI.

In this post, we’re focused on that second component.

As the data pipeline executes, it spits out lots of event logs that we must store and index to render our UI.

At first glance, ingesting these logs looks like a classic Kafka-shaped problem. It’s an append-only, ordered queue of messages organized by topic that we want to consume in near-real-time. Furthermore, we have a lot of data coming from our customers, so it’s critical that we process this data in a scalable, cost-effective way. Kafka is the obvious solution.

Or is it?

🔨 Don’t choose the right tool for the job

I am a big fan of Dan McKinley’s essay on Choosing Boring Technology.

The tl;dr is that adding infrastructure incurs significant costs. If you’re going to add new infrastructure, it better be really important and useful infrastructure. Especially when you’re a small startup.

When I say costs, I mean stuff like this:

- Time to evaluate the new technology

- Time to learn best practices

- Monitoring it

- Debugging it in production

- Following the ecosystem

- Evangelizing it internally and driving the right level of adoption

It’s just a lot of work to add a new piece of infra. And it’s often sustained work over a long period of time.

So here’s my philosophy when faced with a new problem:

- Never choose the wrong tool for the job. If existing infrastructure can’t solve the problem, or it will be too slow, expensive, or insecure/noncompliant, don’t use it.

- It’s better to use a tool that you’re already using, even if it’s not the perfect tool for the job

When we built the first features in the open-source project, we reached for Postgres. This was a technology our team already knew how to use, and from prior experience, we knew it could serve our initial use cases well.

🚌 Postgres as a message queue

As we were already running Postgres, if we could prove to ourselves that Postgres wasn’t the wrong tool for the job, it might be worth using instead of a new piece of infra like a dedicated message queue.

However, using a relational database - such as a Postgesql database - as a message queue is a well-known anti-pattern. The haters dial in on three main points:

- RDBMS’s are too slow to act as a message queue

- Sharing direct RDBMS access across a fleet of services is bad practice

- Many haters want you to buy their message queue product

We don’t have any issues with #2, as we are proud of our majestic, well-factored monolith (but that’s another blog post… someday). In environments where this is an issue, it does not need to be a deal breaker; it can be wrapped in a service API just like any other piece of state.

Really, I’d like to focus on the first point: performance.

🚅 Computers Are Fast™ (and so is your RDBMS)

I can’t tell you how many times I’ve heard this exact conversation in my career.

Engineer 1: “We need to adopt new technology X because boring technology Y is too slow.”

Engineer 2: “How slow is it, and how fast does it need to be?”

Engineer 1: “No idea, I never measured it.”

It’s important to actually measure this stuff by running a stress test. Most of the time, you’ll discover that the boring old technology is fast enough because Computers Are Fast™.

Computers are also Big, too. You can rent a 128-core, 3 TB of RAM instance from AWS. That ought to be enough for anybody.

Even if your old, boring technology is having trouble scaling today, you might be able to scale up if put on a New, Big Box™ with very little effort.

Obviously, this is not a silver bullet. Some workloads can’t take advantage of all those resources, or it otherwise won’t be cost-effective. But you should measure it and be sure.

📏 How we measured

We originally chose Postgres simply because it was a technology that the team already knew. And it just kept chugging along as we gradually rolled our product out to a few early access customers.

However, before we launched Dagster Cloud to the public, we did revisit this decision to ensure that we made the right call. This time, we measured. This is actually a pretty straightforward, objective process that comes in three steps.

- Define your service level objectives (SLOs) and measure them via an observability system like Datadog. You usually want, at a minimum, an availability SLO (i.e., 99.9% of requests succeed) and a latency SLO (p99 latency is < 1s).

- Define your load objective. This is just the number of users you want to be able to support at a given time. If you’re launching a new product, ask marketing how much traffic they expect on launch day and double it. If there isn’t going to be a splashy launch, try to project out where you’ll be in, say, one year, and add a 10-20% buffer.

- Run a load test by spinning up a test cluster and writing some scripts to simulate real usage. Keep fixing bottlenecks and re-running load tests until you hit your objective.

What we found was Postgres did not hit our objective out of the box. However, we were able to get there within 1-2 weeks of work. The specific changes we had to make were:

- Our DB instance size was too small. We had to move up to the next AWS instance size. For extra headroom, we picked an even larger instance size.

- We had three problematic queries that we addressed one by one. We were able to completely eliminate one of them (it was unnecessary), and the other two were fixed by reducing a polling interval and by adding additional columns to the index.

Once we had done this work, we were confident that we would be able to survive launch day and go well into 2023 without this system falling over.

📈 Scaling the Postgresql database: archiving and rate limiting

We did a number of tricks to scale the database beyond simply “buying a bigger box.”

The first thing we did was proactively address the issue of unbounded growth. When building a process that writes to a database, it’s critical to design your application in a way that does not cause unbounded growth. Said another way, we need a way to ensure that we’re regularly purging the database of old data, so it doesn’t continue to grow forever.

We solved this by building a log archiving system that consisted of two components:

- The write path. We built a daemon that would select log entries that were older than two weeks, copy them into a file in S3, and delete the rows from the database.

- The read path. Our web application would look at the time period of the logs being queried and dynamically decide to query either the database or S3.

This log archiving system substantially reduced the total size of the database, massively slowed its growth, and had a negligible impact on the user experience.

The second thing we were worried about were spikes in customer log volume. We are a developer tool, and from time to time developers make mistakes and create large volumes of log spew. We needed a way to protect the database from the impact of an incident like this.

We did this by introducing a rate limiter. When a customer would log too many events of a specific type during too short of a time window, our API servers would start processing and returning a 429: Too Many Requests HTTP response code, causing our customers to throttle their requests.

Our rate limiter has a few important characteristics:

- It does not depend on Postgres. The rate limiter is designed first and foremost to protect Postgres, so it’s one of the only stateful pieces of our infrastructure that cannot depend on it. We store its configuration in the Kubernetes ConfigMap, and we store counts in Redis.

- It supports different rate limits based on the - It supports rate limiting by DB cost.** We can give individual customer deployments a certain quota of “DB-seconds” - basically, time that they can spend querying the database. If they exceed this threshold we rate limit the misbehaving deployment.

💥 Dealing with failure

One of the big advantages of using replicated, distributed message queue systems like Kafka is the strong availability guarantees and ability to recover from failure.

What we have found so far is that, because there are fewer moving parts than a large Kafka deployment, we likely have similar uptime with our single, rock-solid Postgres DB. Additionally, modern environments like AWS RDS allow for hot standbys and quick failovers to replicas, which means failures will often result in just a few seconds of downtime.

Regardless of whether you use Postgres or a message queue, it’s very important to actually test a failover. You’ll often find misbehaving clients that do not gracefully fail.

🙈 Things that didn’t go well

Like any big launch, things weren’t perfect.

- ID overflows. A long time ago, we built a prototype that used 32 bit autoincrement integers as the primary key for a few - Determining rate limiting thresholds. After we built our rate limiting infrastructure, it was challenging to determine which thresholds we should set. During development, we accidentally rate limited a few customers that had bursty traffic, leading to slightly degraded service for those customers.

- Upsizing our DB instance. Before launch we decided to upsize our DB instance. This required us to take a brief (20 minutes or so) downtime before the launch. While there are ways to do this sort of migration with no downtime, it would require more engineering time to implement, and add more risk to the migration due to the increased complexity of the migration. After examining our customer SLAs and determining that we had plenty of headroom, we decided to give our customers two weeks notice and take this brief downtime during our scheduled maintenance window.

- Redis-related downtime. We weren’t able to use Postgres for everything. Specifically, we had introduced Redis for some of our less important, transient event streams because the Python queue library we used at the time did not support Postgres. Redis ended up causing a number of incidents, so we are likely going to migrate this transient queue to Postgres.

🧬 Future work

Our current architecture is not perfect, and we will have to make improvements in the future. Here are some of the things that are bad about it:

- We don’t support multiple regions, which is important for some non-US customers.

- While short, failovers still require taking some downtime.

- It is possible for a misbehaving internal service to take down the DB for everyone.

- We are wasting money by having Postgres provisioned for peak load at all times.

Today these are not major problems for us, and frankly, I’m not sure how we’ll tackle these problems. Maybe we’ll grow multi-region support and buy really big Postgres boxes. Maybe we’ll move to sharded Postgres. Maybe we’ll switch to Kafka or some other distributed queue. Maybe we’ll use cool Postgres features. Or maybe Neon, CockroachDB or DynamoDB will save us.

While I don’t know what the future will hold, I do know that we will try to choose the most boring technology possible, and ground our decisions to add new technology in objective measurement.

With that said: we’d love for you to help us solve these problems! Hit me up on Twitter if you’re interested in jamming on this stuff as a full-time engineer at Dagster Labs. And if you enjoyed this blog post, don't forget to star us on github!.

(1) Image courtesy of DALL-E 2

We're always happy to hear your feedback, so please reach out to us! If you have any questions, ask them in the Dagster community Slack (join here!) or start a Github discussion. If you run into any bugs, let us know with a Github issue. And if you're interested in working with us, check out our open roles!

Follow us: