The beliefs that organizations adopt about the way their data platforms should function influence their outcomes. Here are ours.

Different data tools reflect different philosophies of how data pipelines—and the data platforms they live in—should be built.

Our mission at Dagster Labs is to empower every organization to build a productive and trustworthy data platform. The beliefs that organizations adopt about how their data platforms should function make a huge difference in their ability to achieve these outcomes.

We designed Dagster around a set of beliefs about the right way to build and operate data pipelines and data platforms. While these beliefs aren’t shared by all data practitioners, organizations, or tool makers, our experience has taught us that organizations who adopt them end up with significantly higher productivity and more trustworthy data—especially as their data platforms grow and mature.

A summary of these beliefs:

- Data engineering is software engineering – A modern software engineering workflow, where all logic is captured as code or configuration in a version-controlled repository, is the best way to develop and manage data pipelines.

- Data platforms are heterogeneous – Data platform need to accommodate a variety of languages, data storage, and compute.

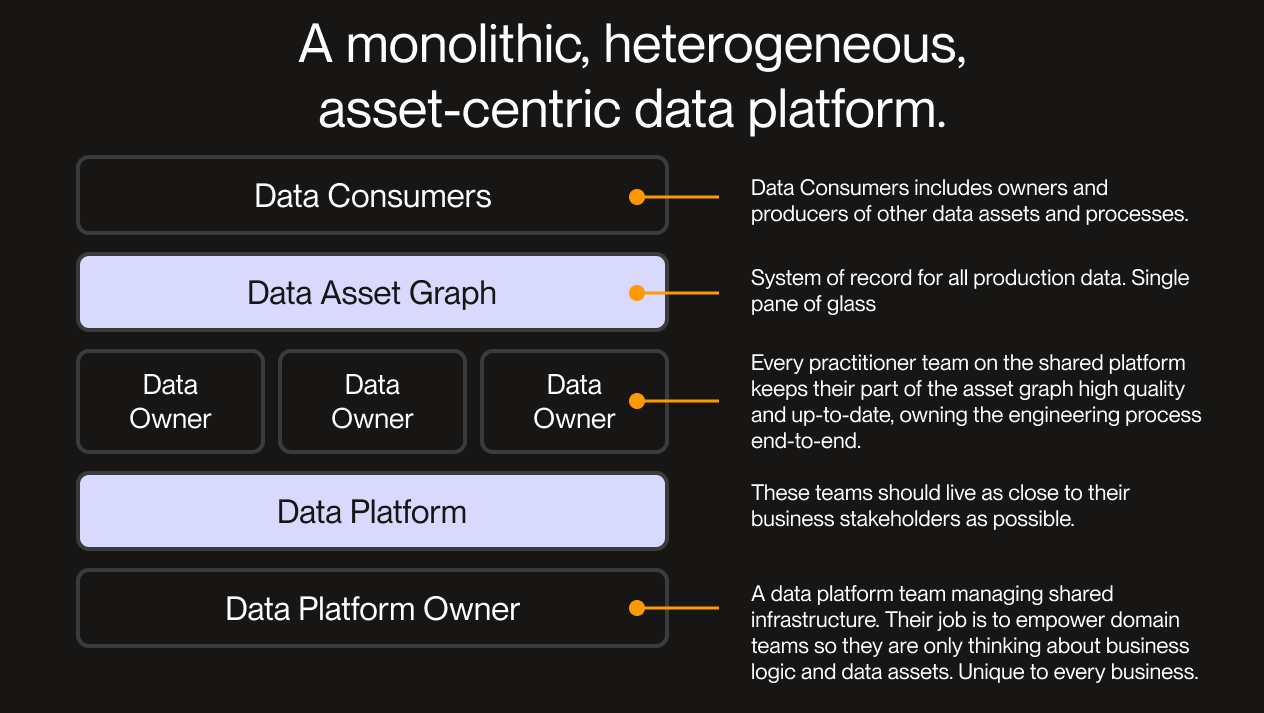

- Data platforms should be unified – There should be one data platform per organization, not one per team. The benefits of wide access to data, a standardized metadata and control plane, and data platform leverage are worth the costs of integrating everyone underneath a single roof.

- Declarative > imperative – Explicitly defining data assets and the state they should be in is the best way to tame the chaos that naturally arises in data platforms.

These beliefs have heavily influenced Dagster’s architecture. Tools necessarily reflect the environments that they’re intended to operate in and the usage patterns that they’re intended to facilitate.

In this post, I’ll explain these beliefs and why we hold them, and then discuss the set of design principles that they’ve led us to adopt for Dagster.

What is a Data Platform?

Before we dive in, what even is a data platform? When we say “data platform”, we mean a set of technologies and practices that support working with data within an organization. A data platform stores and serves data assets, like tables, files, and ML models. It enables data engineering, the development and maintenance of those data assets, by loading data from external systems and transforming data within the platform. And it often includes tools for automatically keeping data up to date, cataloging data, tracking data quality, governing data, and more.

Every organization that has data has a data platform. Google Sheets can be an effective way to store and process data in some circumstances – but some data platforms are more developed than others. Even among developed data platforms, there’s significant variation in tools and practices.

The Dagster Approach to Data Platforms

Data Engineering is Software Engineering

Data pipelines are software, often very complex software. The best way to develop and manage complex software is with a modern software engineering workflow where:

- Data pipelines are expressed as code, and checked into a shared version control system like git.

- Changes are vetted in a staging or test environment before being deployed to production.

Code is infinitely flexible. This allows adapting to the inevitable eccentricities of data pipeline requirements (”update this data as soon as its upstream data is updated, except on Bulgarian bank holidays that don’t occur on leap years”). And it allows for fighting bloat and redundancy by factoring out common patterns into reusable utilities.

The software engineering workflow is by far the best way of making changes auditable, reviewable, and holding bugs at bay.

This doesn’t mean that everyone who develops data pipelines needs to know Python. SQL is a fantastic language for expressing data transformations. Often, even simpler ways of expressing pipelines are useful: for example, if you have many different source datasets that need to be ingested in similar ways, a simple configuration language might be the best way to express these pipeline steps. What’s important is that everyone’s work lives in the same version control system, and that there are credible ways to include more flexible logic when it’s needed.

A consequence of this belief is avoiding:

- No-code / drag & drop approaches to data pipeline development.

- Data pipelines composed of notebooks that aren’t testable and version-controlled.

These approaches can make it quicker to develop data pipelines in the short term, but they’re more costly in the long term because it’s difficult to evolve and maintain these pipelines without introducing bugs.

Last, software engineering is also increasingly becoming data engineering: as more software becomes powered by data and AI, data pipelines become part of software applications. Adopting a common approach to development makes crossing the chasm between data and applications much more tractable.

Data Platforms are Heterogeneous

Data platforms are fundamentally heterogeneous: they need to accommodate a diversity of languages, storage systems and data processing and execution technologies under a single roof.

This heterogeneity stems from two sources: technological heterogeneity and organizational heterogeneity.

Technological heterogeneity: Different tools are good for different jobs. The best tool for transforming relational data will not be the best tool for fetching data from a web API. Data warehouses are excellent for storing relational data, but not excellent for storing images, text, or audio. Different technologies make different tradeoffs: latency vs. throughput vs. expressiveness vs. price. Resultantly, the best technology for the job today might be superseded by better technology in the future. A data platform that supports a variety of technologies can accommodate a variety of data storage and processing patterns.

Organizational heterogeneity: When creating a centralized data platform, you’ll often encounter people already working with data in the organization, often using different tools. For example, a marketing analytics team using Snowflake and an ML team using Spark and PyTorch. Even if you have the luxury of starting fresh, new teams with existing setups will inevitably need to be onboarded. For example, your company might acquire a company and need to integrate their data pipelines, or a finance team that has handled their own data so far might want to onboard to the platform so they can share reporting tables with the sales team. Migrating pipeline code to new frameworks is often prohibitively expensive. A data platform that supports a variety of technologies can accommodate a variety of teams and users.

Data Platforms Should Be Unified

Why not just have a separate data platform for each team or each usage pattern? For example, an analytics platform that’s restricted to Snowflake and dbt Cloud, and a separate ML data platform that centers on Spark and Python. This would enable avoiding the complexity of integrating disparate technologies into a single platform.

But there are big advantages to an organization-wide, unified data platform, where data flows easily between teams and the entire organization standardizes on a set of tools for cataloging, governing, and orchestrating data:

- From a business alignment perspective, it allows everyone to work off of a unified set of facts. If the sales team and finance team use a common set of tables as the source of truth for sales numbers, they’ll be more aligned and more able to make decisions together.

- From a data productivity perspective, a unified data platform means less duplicated work and more data access. When the machine learning team needs quality data to feed their ML models, they can benefit from the prior data cleaning work of the analytics teams.

- Finally, a unified data platform means that cross-cutting functionality has more leverage and more consistency. Each team doesn’t need to roll their own data catalog or their own way of pulling logs out of Kubernetes – the platform team solve these problems once for the entire organization. And users switching teams or debugging across pipelines don’t need to learn new technology at every boundary.

Declarative > Imperative

Data platforms naturally lean towards complexity - they grow to hold vast collections of interdependent files, tables, reports, ML models, and data processing steps. Declarative approaches are the best way to manage this kind of complexity and prevent it from turning into tangled, unobservable chaos.

Under an imperative paradigm, you give commands telling your system what to do (”change X”, “change Y”). With a declarative paradigm, you instead describe the end state that you want your system to be in, and manage change with respect to that end state.

Declarative approaches are appealing because they make systems dramatically more debuggable and comprehensible. They do this by making intentions explicit: for example “This table should exist, and should be derived from this file”. They also offer a principled way of managing change. When you explicitly define what the world should look like, software can then help you to identify when it doesn’t look like that and manage the reconciliation between the current and desired state.

Sometimes, writing data pipelines declaratively requires putting in a little more work at the start to state your intentions—things like what the pipeline produces and how it relates to other pipelines inside the platform. However, this work pays long-term dividends in productivity and reliability by making it possible to evolve and build on top of the pipeline with confidence.

Dagster’s Design Principles

As a data orchestrator, Dagster is a tool that often sits at the center of a data platform. Our beliefs about data platforms, outlined above, have led us to a set of design principles for Dagster. These principles influence the design of every feature inside Dagster and also help us prioritize which features to focus on.

Center the Assets

In the realm of data, being declarative means thinking in assets. A data asset is the umbrella term for a dataset, table, ML model, file, report, etc. – any object in persistent storage that captures some understanding of the world. Data pipelines produce and consume data assets. Understanding the purpose of a data pipeline requires understanding the data assets that it produces and consumes.

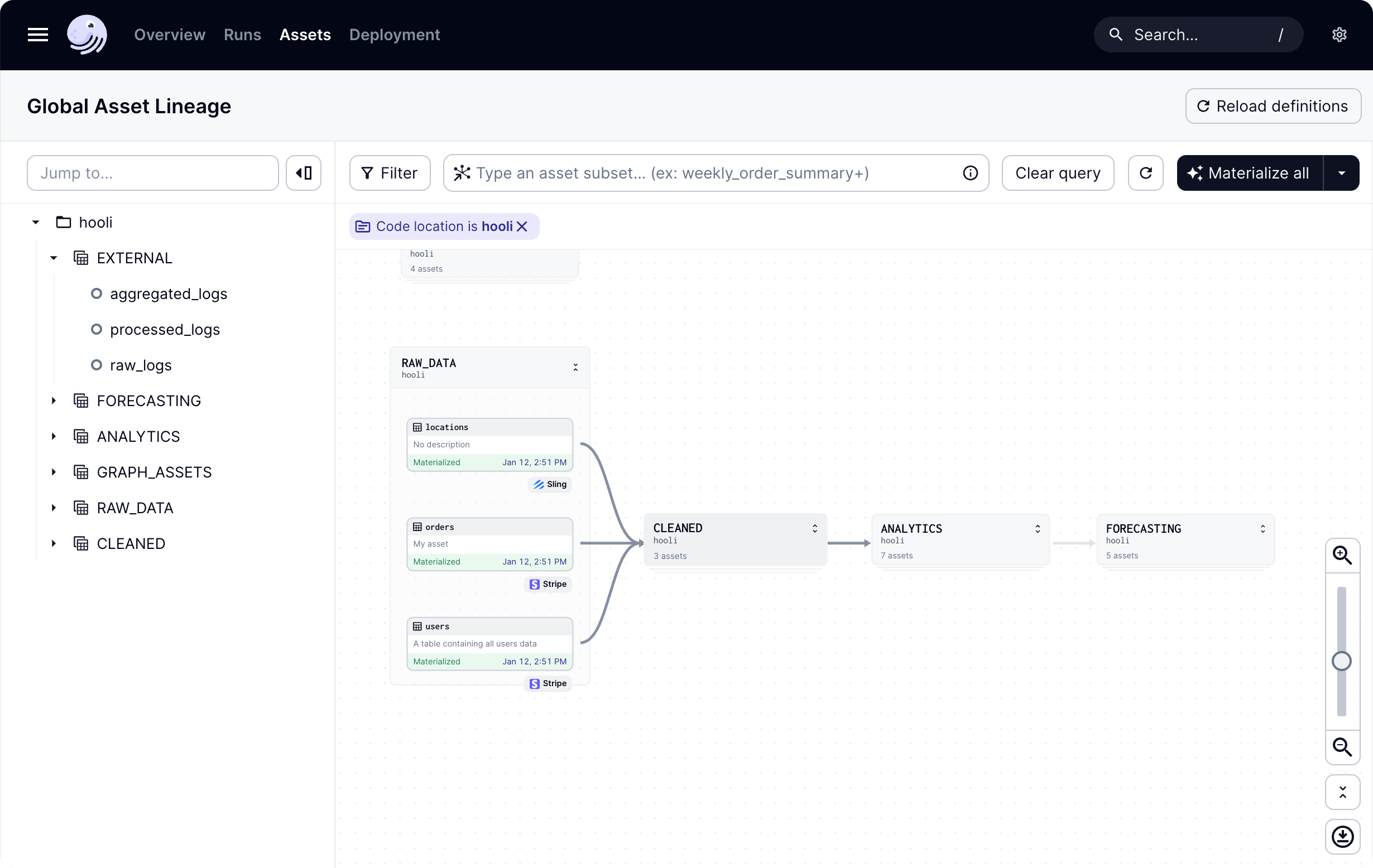

Dagster is designed to encourage users to think about data assets at every stage of the data engineering lifecycle. When defining data pipelines using Dagster’s APIs, users start by declaring the assets that their pipelines operate on and the dependencies between them. Dagster’s UI revolves around the data asset graph, which makes it straightforward to track problems by starting from the impacted data and debugging backwards to the computations responsible for producing that data.

Dagster’s feature set is largely designed around the core Software-defined Asset abstraction, where each asset can be partitioned, assets can be executed automatically in response to new data upstream, dbt models can be represented as Dagster assets, and data quality checks can be target assets and report on their health.

This allows anyone in an organization to understand the canonical set of data assets, how they’re derived, and how they’re used.

Code is King

Dagster is not a CRUD app. You build pipelines in code, not in the UI. That is, you create, update, and delete assets and jobs in Dagster by editing and deploying code, not by directly editing them in Dagster’s web app.

The same goes for the logic that specifies:

- When to automatically execute assets and jobs

- When to retry on failure

- How data is partitioned

- Data quality checks

All of these are specified and version-controlled alongside the assets and jobs that they apply to.

Dagster is designed to smooth the frictions that can arise with code-centric development through:

- Its streamlined decorator-based APIs enable defining pipelines without extraneous boilerplate.

- Rich logging and observability make it easier to understand what happens when Dagster executes user code.

- Dagster Cloud’s built-in Github Actions aid with packaging and deploy code.

- For those who don’t want to host any orchestration infrastructure, Dagster Serverless fully automates packaging and deploying code.

Embrace Non-Production Environments

Data engineering is software engineering, and much of software engineering involves non-production environments: local development, staging, and tests that run in CI.

If you can run your data pipeline before merging it to production, you can catch problems before they break production. If you can run it on your laptop, you can iterate on it quickly. If you can run it in a unit test, you can write a suite that expresses your expectations about how it works and continually defends against future breakages.

Dagster is designed for all of these scenarios. We believe that Dagster should encourage writing data pipelines without coupling them to any particular environment. This freedom makes it possible to run them in a variety of contexts. We’ve prioritized this across all of Dagster’s features:

- You can work with all Dagster objects inside unit tests and notebooks. The direct invocation and in-process execution APIs enable execution without spinning up any long-running processes.

- Dagster’s resource and I/O manager abstractions make it easier to test pipelines without overwriting production data.

- The

dagster devcommand enables an iterative local pipeline development workflow without launching any infrastructure. - Dagster Cloud branch deployments enable every pull request to be accompanied by a staging environment that contains the pipelines as defined in that branch.

Multi-Tenancy From Day One

Good fences make good neighbors. A heterogeneous data platform means many teams and use cases in close contact. It’s critical for these to be able to coexist peacefully, not trample over each other.

Trampling in data platforms takes many forms:

- Starvation: a pipeline uses up resources, which prevents another pipeline from making progress. For example, a low-priority experimental pipeline might compromise a business-critical report by occupying all available slots on the cluster.

- Monolithic Dependencies: requiring all code in the platform to be packaged with the same set of library versions. For example, adding a library dependency to a pipeline might restrict the Python libraries versions that other pipelines can depend on.

- Coupled Failures: a problem with one pipeline taking down other pipelines. For example, an invalid Cron schedule in one pipeline might cause distant pipelines to be unable to load.

- Clutter: Irrelevant assets or pipelines make it hard for people to find what they're looking for. For example, a massive new dbt project is onboarded onto the platform, and whenever the data engineering team searches in the catalog for their assets, the results they're looking for are buried under scores of "int_" tables.

Dagster’s architecture takes a federated approach, enabling teams to isolate their pipelines in ways that avoid this kind of trampling:

- Dagster code locations enable teams within a Dagster deployment to have fully separate library dependencies, including different versions of Dagster itself.

- Code locations also defend against starvation and coupled failures: even if code can’t be loaded, or if one team's schedules take too long to evaluate, other teams won’t be affected.

- Dagster has rich functionality for separating work into queues and applying per-queue rate limits.

- Dagster’s Software-Defined Asset APIs encourage a federated approach to defining pipelines. Instead of encouraging monolithic DAG definitions, each data asset can function as its own independent unit, with its own data dependencies and its own criteria for when it should be automatically updated.

It's

From Beliefs to Practice

In many cases, putting these beliefs about data platforms into practice means choosing to do the “right” thing over the easiest thing. For example:

- Asking teams to come together and standardize on cross-cutting tools and practices, rather than each team using what’s easiest for them in the moment.

- Writing pipelines in code and checking them into version-control systems, rather than using drag-and-drop tools.

- Writing pipelines in a way that declares their purpose, rather than writing the shortest piece of code that gets the job done.

These choices are sometimes less convenient in the short run but pay massive dividends in the long run through higher productivity, less entropy, and more trustworthy data.

A major theme in the way we designed Dagster was to make these choices easier. Dagster’s architecture and features aim to smooth out the friction-y parts of doing the right thing. In a data platform built on Dagster, it should feel natural to develop pipelines in code, declaratively, and link them into a unified whole.

Data platforms FAQs

Why is a software engineering approach crucial for data engineering?

Treating data engineering as software engineering ensures robust, maintainable data pipelines. This approach emphasizes version control for all code, testing in staging environments, and auditable changes, which minimizes bugs and facilitates long-term evolution. It also allows for greater flexibility and reusability of logic, adapting to complex requirements.

How do modern data platforms accommodate diverse technologies and teams?

Modern data platforms are designed to be heterogeneous, supporting a variety of languages, storage systems, and processing technologies. This design accommodates both technological diversity, like different tools for varying jobs, and organizational diversity, allowing disparate teams with existing setups to integrate seamlessly into a centralized platform without costly migrations.

What are the key benefits of a unified data platform for an organization?

A unified data platform provides a single source of truth, fostering business alignment by ensuring all teams work from consistent data. It boosts data productivity by reducing duplicated effort and increasing data accessibility across the organization. This approach also leverages cross-cutting functionalities, such as cataloging and governance, consistently for all users.

How do declarative principles enhance data platform reliability and management?

Declarative approaches define the desired end state of data assets, making intentions explicit and systems more comprehensible and debuggable. By describing what the world should look like, software can help identify deviations and manage reconciliation, which is crucial for taming complexity and improving reliability in dynamic data environments.

Why are non-production environments vital for developing data pipelines?

Non-production environments, including local development, staging, and continuous integration tests, are critical for building reliable data pipelines. They allow engineers to test changes thoroughly before deployment, catching problems early and iterating quickly on pipelines. This practice ensures that only validated and robust code reaches production, significantly enhancing overall data quality and system stability.