A recap of our live event on the benefits and techniques for orchestrating analytics pipelines.

As data analytics teams scale their efforts, they rapidly run into challenges. They are overrun by an increasingly complex collection of systems, code, files, requirements and stakeholders. They become over-reliant on institutional memory, and see degrading morale as more time is sent fixing things than on building anything new.

Bringing more people onto the team is one option, but it's expensive and does not address the root of the problem: Dealing with growing organizational complexity. In modern data engineering, it is not 'Big Data' that presents the big problems, it's 'Big Complexity' that is found inside most data-driven organizations.

In and of itself, tooling is not the answer. But a lack of framework and tooling for managing the critical data assets the team is in charge of producing will make it very hard to navigate organizational complexity, respond to many requests from stakeholders, enable self-service on data assets, and collaborate with other data practitioners across the enterprise.

From this perspective, an orchestrator is the essential platform for scaling your data analytics efforts. It provides a proper development process, observability and testability for your data pipelines, and performance optimization tools such as scheduling and partitioning.

In this article we dive into the benefits of bringing orchestration to your data analytics efforts, and provide a demonstration of the value orchestration will bring.

Managing complexity in data analytics

According to Brian Kernighan, Professor of Computer Science at Princeton University "Managing complexity is the essence of computer programming."

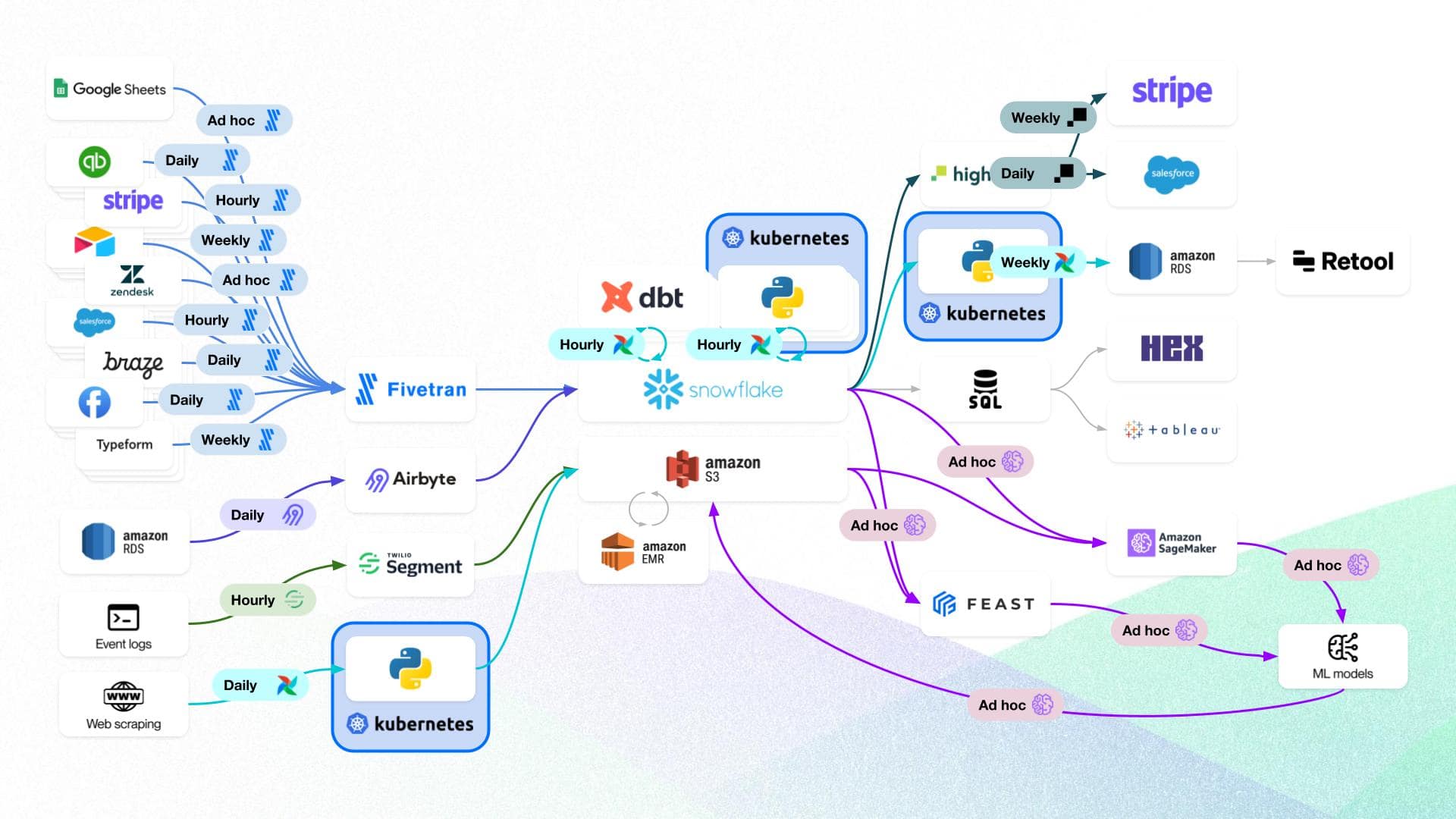

Managing complexity is important in traditional software engineering; it is equally important in the realm of data management and data engineering. In many ways, it is even more of a challenge when managing large platforms built around heterogeneous technologies and systems, including on-prem, cloud, SaaS, and/or open-source solutions.

Data platforms can span across multiple compute environments, be coded in different languages, and be operating on many isolated scheduling tools. Upstream data sources are unpredictable and beyond the control of the downstream consumers.

Understanding how these systems interact, who owns them, what their current status is can be challenging. When something breaks, debugging, fixing and recovering from the error can be time-consuming. Errors are often discovered in production by business owners downstream.

the data assets these fragile, untrusted platforms produce are critical for the efficient performance of the organization.

Data engineers are only too familiar with the stream of frustrated emails or Slack messages asking when the issues will be addressed or the dashboards updated. They also know how much time is wasted doing data wrangling across many services. Analytics can be chaos, and engineers can get burnt out, spelunking through thousands of lines of poorly documented and duplicated code, trying to figure out how it all holds together.

And yet, the data assets these fragile, untrusted platforms produce are critical for the efficient performance of the organization.

The role of orchestration in mastering complexity

Data analytics teams are discovering the value an orchestration tool can provide in their work. Simply put:

- Orchestration brings order to the codebase: it provides a "factory floor view" of the assets your code produces and manages the metadata for you.

- Build locally, then ship with confidence: no more pushing to prod, burdensome delays or jumping through hoops.

- Code becomes testable: You can test your code rapidly and in a totally safe environment.

- Unified control plane, which provides observability across your platform (including sources and definitions). It allows you to monitor status and troubleshoot issues rapidly.

Most orchestration platforms would claim to deliver some of the above benefits. But Dagster brings a unique declarative asset-centric approach to orchestration. This greatly simplifies the mental model in data analytics as the outputs of each defined asset can be directly stored and refreshed as a table. You can observe the state of each asset, its metadata, and trace the lineage and dependencies all in one interface.

Dagster fully supports dbt models with a native integration, making it easy to import all of your models, then manage and execute them in the context of the larger platform.

Moving your analytics pipelines to Dagster

If you currently run an analytics data pipeline in Python, with dbt models or other common analytics tools, porting it over to Dagster is typically straightforward. It is often simply a case of setting up the configuration of the resources that the data pipeline uses and moving the Python functions over, adding the Dagster @asset decorator. Yuhan Luo shows us how in this section of the event.

In most cases, you can tap into Dagster's free 30-day trial and run your initial pipeline on Dagster+.

Our data analytics showcase

In early May 2023, we invited Pedram Navid, founder of West Marin Data, to hand us (the Elementl team) a typical analytics pipeline he would build for his clients, using Dagster as the orchestrator.

From this example, we ran a Dagster showcase, highlighting the features and techniques demonstrated by Pedram. Here, you can watch the recording, access the code for this example in our public repo, and read a summary of the key takeaways from our event.

Pedram points to the key high-level benefits of orchestration in his work:

- Build locally and ship confidently to speed up his development cycles with cheap local resources, rapid testing, and iteration.

- Separate I/O and compute to free himself up from having to plan where the pipeline will ultimately be executed so it can be 'plugged in' to production systems once ready.

- Think in Assets which is a much more natural mental model in data analytics than focusing on ops.

- Use selective "materializations" - i.e., create and refresh parts of the data in a very surgical fashion, such as only updating data for the last week, thus speeding up his work and reducing costs.

In his example, Pedram taps into many of Dagster's features, including schedules, integrations, metadata analysis, partitions, and backfills. Thanks to these, he can build with confidence, deploy with ease and put his data pipelines on autopilot.

For local development, his example uses CSV files, Steampipe, the GitHub API, DuckDB.In production, he easily switches to Google Big Query and taps into HEX for visualisation.

Explore the video and the companion repo, and join us on Slack to find out how to boost your analytics pipeline with Dagster.

Interested in trying Dagster Cloud for Free?

Enterprise orchestration that puts developer experience first. Serverless or hybrid deployments, native branching, and out-of-the-box CI/CD.