Solve data ingestion issues with Dagster's Embedded ELT feature, a lightweight embedded library.

We have seen a lot of attention given to many parts of the data engineering lifecycle, from storage to transformation to analytics. However, one part of the lifecycle that remains frustrating for data engineers is ingestion.

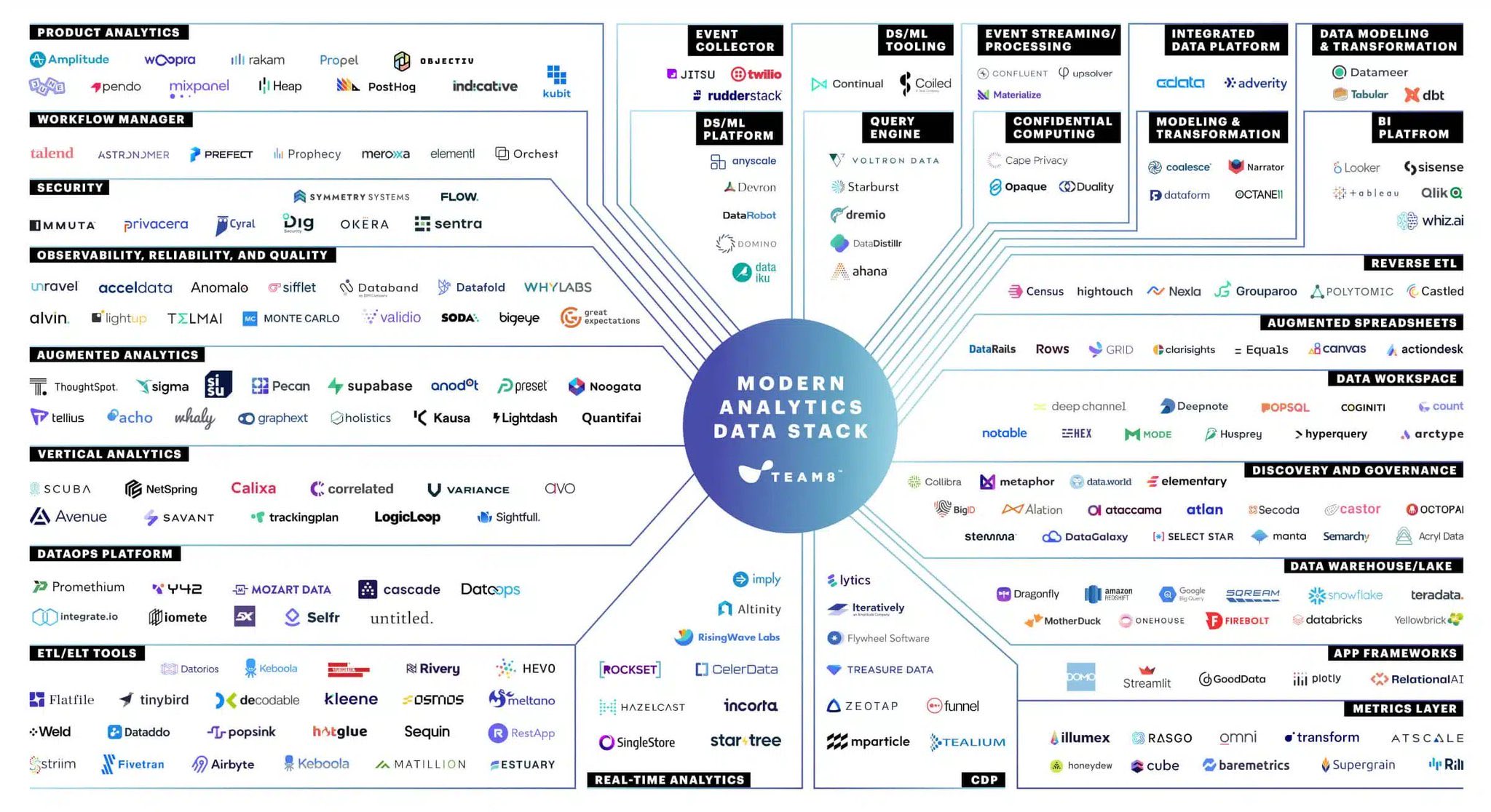

For many teams, the choice is often between rolling your own ingestion solution, cobbling together heavyweight third-party frameworks like Airbyte or Meltano, or paying a SaaS company that charges for ingestion by the row.

Rolling your own ingestion can be enticing, but you’re quickly faced with a range of requirements such as monitoring, retries, state management, and more. While open-source products such as Airbyte or Meltano are powerful in their own right, they have complex requirements to deploy, requiring many different services to operate. Airbyte, for example, will spin up 11 different services as part of the deployment. Fivetran, Stitch, and other managed solutions might offload that burden but can be cost-prohibitive.

We’ve seen a shift in the data engineering landscape away from heavy frameworks and libraries toward smaller, embedded solutions. Much of the hype around DuckDB comes from how light and simple it is to use, and the developer experience of that tool has set the bar for how we think about the rest of the categories in the data platform.

We’ve also seen some promising work in the ingestion space with tools like Sling, dlt, Steampipe, pg2parquet, CloudQuery, and Alto, offering small, simple libraries that are great for extracting and loading data. However, on their own, these tools still lack basic functionality that makes them suitable for production.

We at Dagster saw an opportunity: we already had a powerful orchestration platform that managed much of the complexity that the heavier ingestion frameworks duplicated. What if we could leverage that to provide a great foundation to build using these lighter-weight tools?

To help bridge the gap in ingestion, we’re introducing the Dagster Embedded ELT library: a set of pre-built assets and resources around lightweight frameworks that make it easy to ingest and load data.

What Makes Ingestion Difficult?

Ingestion alone may seem simple, but building a reliable ingestion pipeline is more than querying a database and saving the results. Let’s quickly look at some of the primary concerns that make ingestion difficult:

- Observability: it’s critical to understand how data is flowing through the system, from the number of rows ingested to being able to capture and store logs, as well as alerting on any failures

- Error Handling: if there’s one universal truth about data pipelines, they will fail in some fashion. Being able to handle errors through retries and workflow dependencies is essential.

- State Management: unless you want to pull all the data out of your database on every run, you’ll need a way to manage state as part of incremental loads

- Data Quality: the ability to validate before loading it, identify schema changes and unexpected values, and ensure constraints are met, and data consistency is a minimum requirement for many data teams.

- Type Conversions: everything from strings to JSON to date-time columns between two systems often will need some conversion logic.

- Schema Drift: As your source data structure changes, you will need to handle that in the destination system, either by adding or dropping columns or even changing column types.

A careful observer might notice that there are two categories of problems here. The first four, observability, error handling, state management, and data quality, are all the natural domains of orchestrators, while type conversions and schema drift are precisely what good ELT tools handle for you.

The problem is that absent a good orchestrator, ELT tools need to start bundling large components to be able to operate correctly. These siloed, purpose-specific orchestrators often cause much pain for data teams.

Unbundling the Orchestrator

At Dagster, we’ve seen great success with users with centralized orchestration in one place for their workflows across all data lifecycle stages. Instead of building a feature-incomplete orchestrator for every tool, data engineers are taking advantage of the full power of Dagster to build resilient pipelines.

In that spirit, we’re introducing dagster-embedded-elt: a framework for building ELT pipelines with Dagster through helpful pre-built assets and resources.

Our first release features the free open-source tool Sling, which makes it easy to sync data between data systems, be it a database, a data warehouse, or an object store. Check out the list of Sling connectors.

We’ve built abstractions around the tool so that syncing data from a production database to your data warehouse can be done in just a few lines of code.Our integration is build around Sling's replication configuration, which is a declarativeway to define how to sync data between databases and object stores.

To use our dagster-embedded-elt to sync data between systems, it's as simple creating a replication configuration, defining your sources and targets, and calling the replicate method.

First, we'll define a replication config. We can do this either in the native yaml supported by Sling, or as a Python dictionary:

Next, we define the source and destinations and create a Dagster Resource:

With the resource set, we then use the @sling_assets decorator and Dagster will read and parse your replication config, build your assets, and run your syncs.All in just a few lines of code.

Going forward, as we add more ingestion libraries, the design of this plugin architecture will make it simpler to swap solutions without rewriting your entire codebase. In building this, we had three key goals in mind:

1) Make it easier to replicate data from your operational databases such as Postgres and MySQL to your data lake and data warehouse.

2) Make it faster to get started on your core analytics platform without a slew of hosted SaaS solutions.

3) Remove cost anxiety when it comes to large production tables, so you don’t have to worry about how many rows you are syncing and whether a backfill will surprise you at your next invoice.

We are initially launching with Sling as a proof of concept, which gives you great options out-of-the-box. We’d love your feedback to help further develop and extend this feature to other integrations and will build this capability out based on feedback from Dagtser users.

The Modern Data Stack has given us many great tools over the past years. It has also created problems by adding a layer of complexity (and cost) on top of traditionally basic data engineering tasks. We hope that with this integration, Dagster can simplify data engineering again and hopefully bring some fun back along the way.

Learn more about Embedded ELT in the Dagster Docs.

Update: Dagster's embedded ELT capability has evolved since this article was published. You might want to check out:* Sling Out Your ETL Provider with Embedded ELT* Expanding the Dagster Embedded ELT Ecosystem with dltHub for Data Ingestion