Setting up your Dagster project the right way from day one saves you headaches later, makes your team more effective, and helps scale.

Here's something I've learned from talking to hundreds of Dagster users: the teams that succeed aren't necessarily the ones with the most sophisticated pipelines. They're the ones whose project structure actually makes sense to their organization.

That might sound obvious, but you'd be surprised how often I see data teams build architectures that end up with creation that is a result of trying to build the plane while flying it.

Additionally, when it comes to AI assisted development, having a thoughtful project structure makes LLMs and other AI tools much more efficient with your casebase, as the folders and files act as guardrails and add additional context so you consume less tokens, maintain style and consistency, and gets you to the correct solution faster.

The Golden Rule: Mirror How Your Organization Talks About Data

Your Dagster project structure should reflect the language your organization already uses. If your business talks in terms of "customer data marts" and "financial reporting," your assets should be grouped that way. If your team often talks about "the dbt transformation layer" and "the Snowflake integration," then organize around those tools.

This isn't just about being user-friendly (though it is). It's about reducing cognitive overhead. When a product manager asks about "customer acquisition metrics," you want to point them to a clearly labeled group of assets; not send them hunting through a maze of folders named after internal engineering concepts.

Start Simple, Then Grow Organically

Every new Dagster project starts the same way: with one definitions.py file and big dreams. That's perfect. Don't overthink it in the beginning.

Here's the pattern that works:

Phase 1: Everything in one file (0-400 lines) Keep all your assets, schedules, and resources together. You can see everything at once, which makes debugging easier and helps you understand the relationships between different parts of your system.

Phase 2: Separate by abstraction (400+ lines) Once your single file gets unwieldy, split things out:

- assets.py

- schedules.py

- resources.py

Phase 3: Group by function or tool (multiple teams/domains) This is where you make the crucial decision about how to organize. I'll share a couple patterns that I often see.

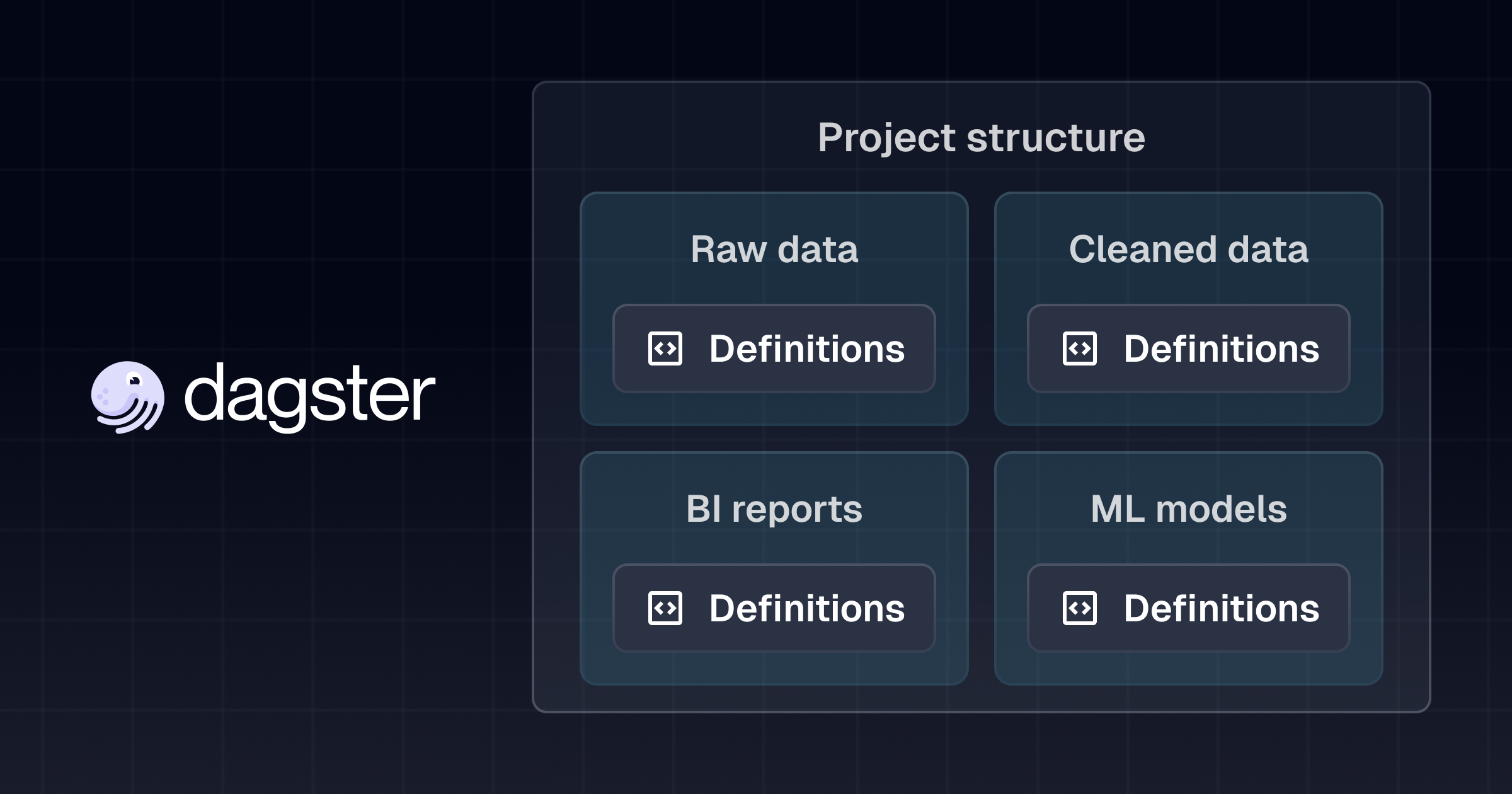

Pattern 1: Organize by Business Function (Medallion/Data Mart Style)

.

└── my-project

├── pyproject.toml

├── src

│ └── my_project

│ ├── raw_data/

│ ├── cleaned/

│ ├── analytical/

│ ├── bi_reports/

│ ├── ml_models/

│ ├── forecasting/

│ ├── __init__.py

│ ├── definitions.py

│ └── defs

│ └── __init__.py

├── tests

│ └── __init__.py

└── uv.lock

This works when your stakeholders think in terms of data products and business outcomes. Your sales team doesn't care that you're using dbt. They care about "customer acquisition reports" and "revenue forecasting."

Use this approach when:

- Your stakeholders are less technical

- You follow a medallion architecture

- People talk about data marts and business domains

- You want to emphasize data products over engineering processes

We have an example repo using this architecture here.

Pattern 2: Organize by Tool/Integration

.

└── my-project

├── pyproject.toml

├── src

│ └── my_project

│ ├── snowflake/

│ ├── dbt/

│ ├── airflow_migration/

│ ├── fivetran/

│ ├── hex_reports/

│ ├── ml_platform/

│ ├── __init__.py

│ ├── definitions.py

│ └── defs

│ └── __init__.py

├── tests

│ └── __init__.py

└── uv.lock

This makes sense when your conversations revolve around technical implementation. "How's the dbt integration going?" "Are we still having issues with the Fivetran connector?"

Use this approach when:

- Your stakeholders are technically savvy

- You're managing complex multi-tool integrations

- Your team frequently discusses specific tools and platforms

- You're in migration/modernization mode

We use this structure in our internal Dagster implementation and we made the repo public which you can check out here.

The Mono Repo Question

Mono repos are controversial, but for Dagster projects, they work really well.

Your Dagster code, dbt models, and deployment scripts are all tightly coupled. Why scatter them across different repositories where it's harder to see dependencies and coordinate changes?

Benefits of keeping everything together:

- Single source of truth for your entire data platform

- Simplified CI/CD - one pipeline for everything

- Better collaboration - data engineers and analytics engineers working in the same space

- Easier debugging - you can trace issues across the entire stack

If you have multiple teams with conflicting dependencies or completely different release cycles, separate code locations make more sense. Code locations can all live in the same repository so you can still utilize a monorepo while still having isolated environments. This blog is a great resource in explaining how they work.

Code Locations: Start with One, Split When You Need To

Most teams can start with a single code location. It's simpler to manage and easier to understand.

Split into multiple code locations when:

- Different teams have conflicting package dependencies

- You need different deployment schedules

- Teams work on completely independent domains

- You're managing a large organization with distinct data platforms

Configure multiple locations using dagster_cloud.yaml (for Dagster+) or workspace.yaml (for OSS deployments).

Dbt project placement

.

├── my-project

│ ├── pyproject.toml

│ ├── src

│ │ └── my_project

│ │ ├── snowflake/

│ │ ├── dbt/

│ │ ├── airflow_migration/

│ │ ├── fivetran/

│ │ ├── hex_reports/

│ │ ├── ml_platform/

│ │ ├── __init__.py

│ │ ├── definitions.py

│ │ └── defs

│ │ └── __init__.py

│ ├── tests

│ │ └── __init__.py

│ └── uv.lock

└── dbt_project/

├── models/

└── dbt_project.yml

This makes it simple to reference dbt models in your Dagster assets and keeps related code changes together.

The Real Test: Can Someone New Navigate Your Project?

Here's how you know if your structure works: a new team member should be able to find what they're looking for without asking you.

If someone needs to update "customer churn predictions," can they immediately see which folder to look in? If they need to debug the "weekly revenue report," is it obvious where that logic lives?

Your project structure is documentation. Make it good documentation.

Getting Started

The beautiful thing about Dagster is that it grows with you. You don't need to architect for massive scale on day one. Start simple:

- One definitions file with your core assets

- Group assets using metadata that matches your business language

- Split gradually as complexity demands

- Always optimize for the people who will actually use this code

Remember: the best project structure is the one that makes your team more effective, not the one that looks impressive in a conference talk.

Your data platform should work for your organization, not the other way around.