Python's clean syntax makes it easy to jump into unfamiliar codebases, but this simplicity often masks the intricate world of packaging that confuses many developers.

Python is an incredibly user-friendly programming language. Its clean syntax and intuitive design make it easy for developers to jump into unfamiliar codebases and quickly start contributing. However, this ease of use can mask one of the more intricate aspects of the Python ecosystem: packaging.

Many developers adopt conventions like adding `__init__.py` files to directories or ignoring Python-generated artifacts such as `.egg-info` without fully understanding their purpose. It’s tempting to overlook these components, especially since packaging practices often appear inconsistent across libraries. Yet, gaining a deeper understanding of Python packaging is essential for maintaining and distributing clean, reliable code.

At Dagster we want people to have a great experience building with Python and to make building as easy as possible. That is why our create-dagster CLI is centered around an opinionated way to structure your Dagster and Python project so you don’t have to worry about packaging (the next post will talk more about the specifics of the Dagster Python package).

But it is still worth exploring why the project is structured the way it is. We will go through how Python packaging has evolved and its current state. Then we can deconstruct the package that `dg` creates and explain how all the pieces fit together. This will give you insight both into how to maintain your Dagster project and tips for working with Python packages in general.

Python Hierarchy

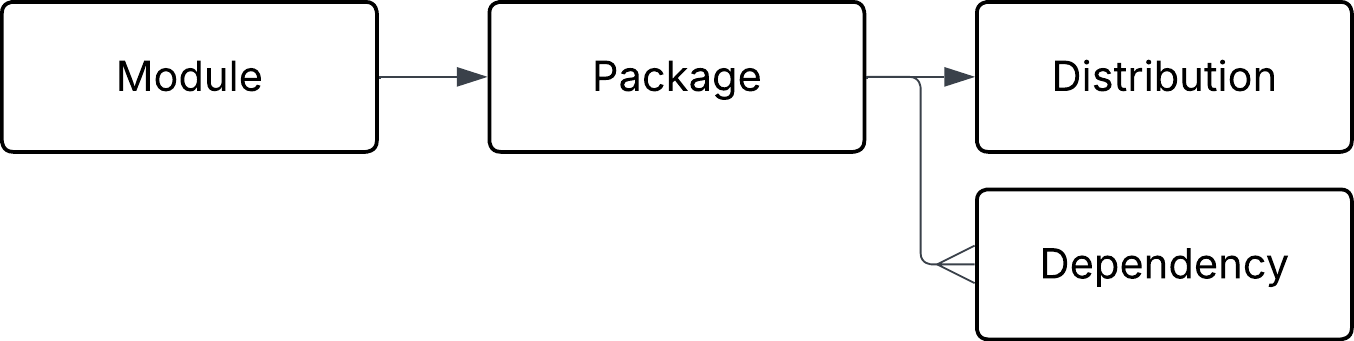

Before diving into the intricacies of Python packaging, it's helpful to understand the hierarchy of code organization in Python. You may have heard the terms module, package, dependency, and distribution. They are all related and sometimes used interchangeably though they are all different with each serving a distinct role in Python’s packaging.

We covered this topic in more detail in a previous blog post, but as a quick recap: a module is a single Python file containing code (e.g., `foo.py`). A package is a collection of modules organized in a directory, typically marked by an `__init__.py` file—though starting with Python 3.4 and PEP 420, this file is no longer required for implicit namespace packages. A distribution is the packaged version of your code, including one or more packages along with metadata used for installation and discovery (e.g., via PyPI). A dependency is any third-party package that your distribution requires in order to function correctly.

History of Python packaging

We now have the language to explore how Python packaging has evolved and to examine the tooling that enables Python developers to package and distribute code.

The Early Days (pre-1998)

Managing packages in Python's early years was a highly informal process. There was no standard way to distribute modules, so `.py` files were often shared via mailing lists, websites, or FTP servers. Without standard distribution mechanisms, every module had to be handled differently.

distutils (1998)

Recognizing the limitations of this ad-hoc approach, the Python community introduced `distutils` in 1998. It was later included in the standard library in Python 1.6 (2000), providing a framework for building, packaging, and installing Python modules.

This shift introduced the `setup.py` script. It defined metadata such as the module’s name and version, and enabled packages to be installed locally using: `python setup.py install`.

This was a significant step forward, but the process remained inflexible. `distutils` lacked native support for managing dependencies, and there was still no centralized package index, so distribution remained fragmented.

Note: `distutils` was officially removed in Python 3.12

PyPI (2004)

Efforts to create a centralized package index began with PEP 301 in 2002. This introduced the idea of including richer metadata with packages to facilitate search and installation.

By 2003, the Python Package Index (PyPI) was live, allowing users to upload and download packages. However, installation still required the use of `setup.py`.

setuptools (2005)

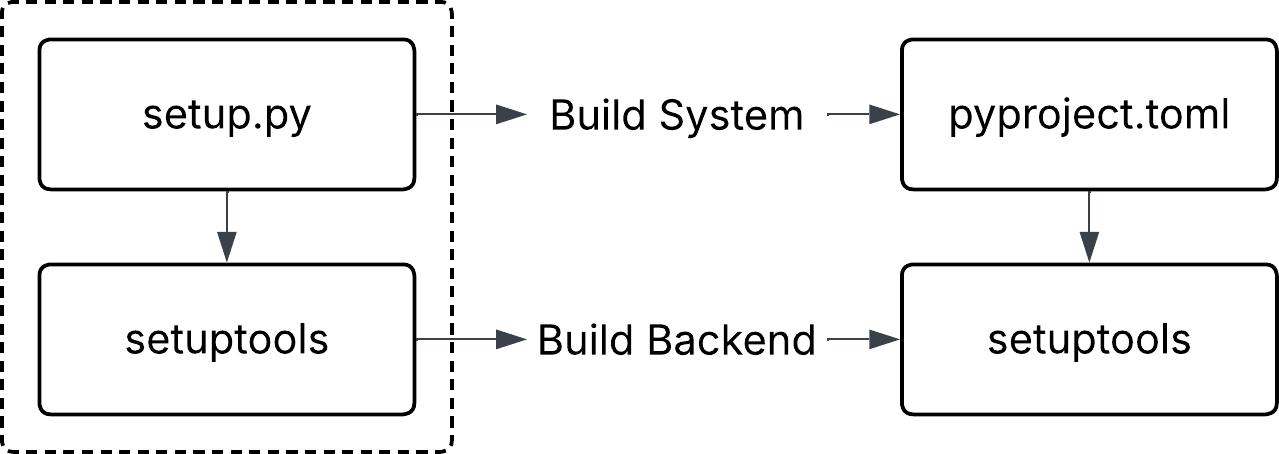

`setuptools` built on top of `disutils` and introduced some innovations such as `install_requires` for dependencies and the ability to support `.egg` files. `setuptools` was an extremely ambitious project and attempted to solve both the build system and backend of packaging.

This all centered around the `setup.py` file as an imperative configuration that used the `setuptools` library to build, install, and package a Python project.

from setuptools import setup, find_packages

setup(

name=”my_project”,

version=”0.1.0”,

description=”An example Python package”,

author=”Your Name”,

packages=find_packages(),

install_requires=[“requests”],

entry_points={

“console_scripts”: [

“mycli=myproject.cli:main”

],

},

)

When you run `python setup.py install`, the `setup.py` is executed and a series of build steps are triggered. An`.egg` file, or unzipped `.egg` directory, is installed `site-packages` which contains all the source code files (.py or .pyc files) and any compiled extensions. As well as the `.egg-info` directory containing metadata directory inside the installed package.

pip (2008)

There were still usability issues around downloading and installing packages from PyPI, which led to the introduction of `pip` as a replacement for `easy_install` (usually bundled with setuptools and now deprecated).

Unlike `easy_install`, `pip` provided:

- A simpler, more consistent CLI (`pip install package`).

- The ability to uninstall packages.

- Better handling of dependency trees and versioning.

Over time, `pip` became the recommended installer for Python and has been bundled with Python since version 3.4 (2014).

virtualenv (2008)

Around the same time, as installing packages became easier, a new problem emerged: dependency conflicts across projects.

Previously, packages were installed globally into the Python interpreter, making it difficult to manage multiple applications with different requirements on the same system.

To solve this, developers began using `virtualenv`, which creates isolated environments with their own site-packages directory to keep project-specific dependencies separate from the global Python environment.

This made multi-project development manageable and was a huge leap forward. However, it didn’t solve the problem of dependency resolution which tools like `pip-tools`, and later, opinionated solutions like `poetry` or `hatch`, aimed to address.

wheel format (2012)

The Wheel format (`.whl`) is the modern standard for distributing built Python packages, replacing the `.egg` format previously used by `setuptools`. Introduced with PEP 427, it aimed to standardize package distribution and decouple the installation from the tightly coupled `.egg` and `easy_install` workflow.

pyproject.toml (2016)

While Python packaging was becoming more powerful, it was also becoming more fragmented and complex. This was even more difficult since the `setup.py` file was Python code that needed to be executed to determine metadata. Without a standardized way of setting this information, new tools had to reimplement their own metadata and install flows.

Introduced in PEP 518 and expanded in PEP 517 and PEP 621, the `pyproject.toml` decouple project metadata and build configuration by providing a static and declarative interface.

[build-system]

requires=[“setuptools”, “wheel”]

build-backend = “setuptools.build_meta”

[project]

name = “myproject”

version = “0.1.0”

dependencies = [“requests”]

This allows tools like `pip`, `build`, `tox`, or IDEs to:

- Understand project metadata without executing Python.

- Automatically install build requirements into isolated build environments.

- Support multiple build backends (e.g., `setuptools`, `flit`, `poetry-core`, `hatchling`).

Although `pyrproject.toml` replaces the `setup.py` for configuration, most projects still use `setuptools` as their build backend. This makes Python packaging more modular and interoperable, enabling a healthier tool ecosystem.

Modern Packaging

This still isn't an exhaustive list of all the developments in the Python packaging ecosystem, but you can begin to see why it's often considered confusing. Over time, different tools have attempted to solve different parts of the problem, sometimes overlapping, sometimes not.

Thankfully, things have started to settle in recent years. A major reason for this is the growing adoption of `pyproject.toml` as a central, interoperable configuration file. It enables tools to share a common interface for building, installing, and managing packages.

There is still some overlap between tools, but it typically occurs within specific layers of the Python packaging ecosystem. For example, `tox`manages package installation (using `pip`) and virtual environment creation (via `virtualenv`), but it does not act as a build system or backend. Instead, it delegates those responsibilities to whatever is declared in `pyproject.toml`.

The same is true for modern package managers like `uv`, which are designed to be fast, reliable frontends that interoperate with `pyproject.toml`. They handle tasks such as dependency resolution, environment management, and syncing lockfiles, but they still rely on the build backend (e.g. `setuptool`, `flit`, or `hatchling`) to perform the actual build process.

Dagster Python Package

Hopefully, this gives you a clearer understanding of the building blocks that make up Python packaging. In the next post, we’ll explore what happens when you initialize a new project in Dagster, including the default project structure, the layout of the generated `pyproject.toml`, and how to customize or extend it to suit your specific use case.

We'll also share some practical examples and highlight internal tools and workflows we use to supercharge our Dagster projects.