What Is a Big Data Platform?

A big data platform is an integrated set of hardware and software tools designed to collect, store, process, and analyze massive volumes of structured, semi-structured, and unstructured data that traditional systems cannot handle efficiently.

These platforms use distributed computing and often cloud infrastructure to manage the volume, velocity, and variety of big data, enabling organizations to extract actionable insights for decision-making, fraud detection, predictive analytics, and more.

Big data platforms typically integrate some or all of the following components:

- Data storage: Uses distributed systems like Hadoop Distributed File System (HDFS) or cloud-based options (e.g., Amazon S3, Google Cloud Storage) to store large datasets.

- Data ingestion: The entry point for information into a big data platform, ensuring that raw data from multiple sources is collected, transported, and made available for storage and processing

- Processing engines: Employs frameworks like Apache Spark to process data at high speeds, often in real-time or batch modes.

- Data wrangling and management: Tools that help organize, cleanse, and transform raw data into a format that is understandable and useful for extracting insights.

- Analytics tools: Includes software for performing complex queries and various types of analytics, such as business intelligence and machine learning.

- User interfaces: Provide ways for users to access, visualize, and interact with the data and analytical results.

As organizations continue to generate and collect more data, these platforms remain central to uncovering actionable insights, driving innovation, and maintaining competitive advantage.

How Big Data Platforms Work: Key Components and Functions

Data Storage

Modern data solutions commonly employ distributed file systems like HDFS or object stores such as Amazon S3 to store both structured and unstructured data. These systems are designed to scale horizontally, meaning storage capacity can be increased simply by adding more hardware or virtual resources.

Efficient storage architecture also includes mechanisms for data replication, compression, and backup, which enhance fault tolerance and optimize disk usage. Data is typically organized to support fast access patterns for downstream processing, including partitioning and indexing strategies.

Data Ingestion

Data ingestion is the entry point for information into a big data platform, ensuring that raw data from multiple sources is collected, transported, and made available for storage and processing. Sources can include transactional databases, IoT devices, application logs, or third-party APIs.

Ingestion tools are designed to handle high-volume and high-velocity streams, capturing both batch uploads and continuous real-time feeds without data loss. Ingestion pipelines typically support connectors and adapters that standardize heterogeneous input formats, transforming them into consistent schemas before passing them downstream. Features like buffering, checkpointing, and replay ensure that data is not lost during failures or network interruptions.

Processing Engines

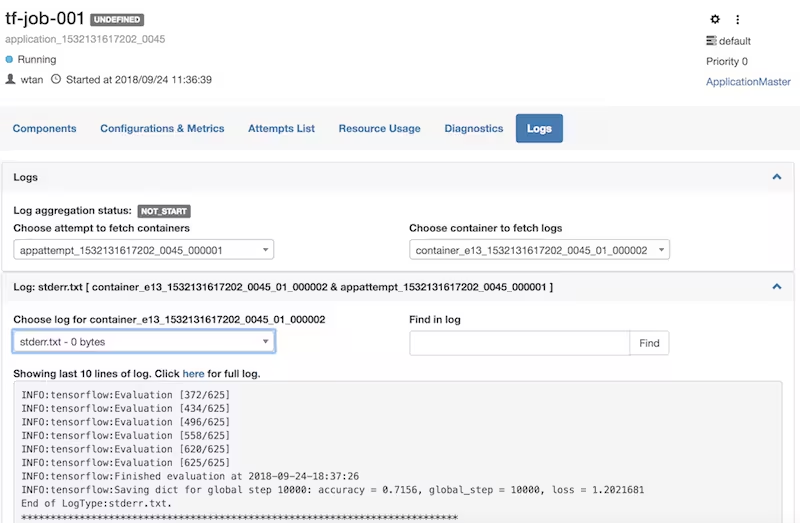

Processing engines are responsible for executing data transformations, aggregations, and analysis at scale. Tools like Apache Spark and Hadoop MapReduce distribute computational tasks across a cluster, breaking down large workloads into smaller jobs that run in parallel. This approach dramatically accelerates processing times for tasks such as ETL, ad-hoc querying, and advanced analytics compared to single-node solutions.

These engines often support both batch and real-time data processing modes. Batch processing suits large, periodic data transformations, while real-time or streaming engines address scenarios such as fraud detection and instant data feeds. Support for multiple programming languages and APIs enables organizations to develop pipelines in Python, Java, SQL, or Scala.

Data Wrangling and Management

Data wrangling and management are critical for preparing raw, messy inputs into reliable datasets ready for analytics. This stage often involves data profiling, deduplication, validation, and enrichment. Automated pipelines apply rules and transformations to clean records, align schema inconsistencies, and normalize formats such as dates, currencies, and categorical fields. Effective wrangling ensures downstream processes operate on accurate, consistent data, reducing errors in reporting or modeling.

Management extends beyond transformation to include cataloging, versioning, and metadata tracking. Platforms typically provide centralized data catalogs, lineage tracing, and policy enforcement to help teams understand where data originated, how it has been transformed, and who has access. This governance layer makes it easier to maintain compliance, support schema evolution, and enable reproducible workflows while ensuring that high-quality data remains available to analytics and machine learning applications.

Analytics Tools

Analytics tools within big data platforms provide the capabilities required to transform raw data into actionable insights. These tools range from interactive SQL query engines to advanced statistical modeling, data visualization, and machine learning libraries. Their integration within big data platforms means analyses can be performed directly on data at scale, eliminating bottlenecks caused by data movement between systems. T

Beyond querying and visualization, analytics components often support complex event processing and predictive analytics workflows tailored for specific industries. Built-in support for leading data science frameworks allows practitioners to develop and test models using real-world datasets within the platform, reducing time-to-value.

User Interfaces

User interfaces (UIs) in big data platforms serve as the bridge between complex backend systems and users with varying technical skill levels. They can range from graphical dashboards and SQL workbenches to code-based notebooks. Intuitive UIs empower data scientists and analysts to interact with data, define workflows, monitor jobs, visualize results, and manage datasets without writing low-level infrastructure code.

Besides day-to-day data operations, UIs enable collaboration, audit trails, and documentation, making it easier for teams to share insights and maintain reproducibility. Advanced permissioning and customizable dashboards allow organizations to tailor access and views according to users’ roles or business needs.

Big Data Platforms vs. Data Lakes vs. Data Warehouses

A data lake is primarily a central repository for storing raw, unstructured, and semi-structured data at scale. Data lakes focus on ingesting a wide range of formats without enforcing strict schema, making them suitable for exploration and data science experimentation.

Data warehouses are optimized for structured data, supporting fast SQL analytics on cleaned, modeled datasets. Traditional data warehouses enforced schema-on-write, offering high performance for business intelligence workloads but less flexibility for handling raw or unstructured data. Modern data warehouses also make it possible to store unstructured data, providing improved flexibility, a pattern known as data lakehouse.

Big data platforms extend beyond both concepts by integrating storage, processing, analytics, management, and user interface layers into a cohesive ecosystem. Unlike standalone data lakes or data warehouses, a big data platform combines real-time and batch processing, governance tools, and analytics engines, allowing organizations to handle varied data workloads end-to-end.

Some platforms blur the lines, offering “lakehouse” architectures that merge the flexible storage of data lakes with the performance of data warehouses, catering to both data engineering and analytical use cases within a single solution.

Learn more in our detailed guide to:

- data warehouse platform (coming soon)

- data lake platform (coming soon)

Notable Big Data Platforms

1. Apache Hadoop

Apache Hadoop is an open-source framework for distributed storage and large-scale data processing across clusters of commodity hardware. It addresses the challenge of managing big data by dividing datasets into blocks, distributing them across nodes, and processing them in parallel using the MapReduce model.

Key features include:

- HDFS (Hadoop Distributed File System): A scalable, fault-tolerant storage system that replicates data across multiple nodes and supports high throughput access to large files.

- MapReduce engine: Distributes processing tasks across nodes, enabling parallel execution of data transformation and analysis jobs.

- YARN (Yet Another Resource Negotiator): Manages cluster resources and schedules jobs dynamically, supporting multiple application types beyond MapReduce.

- Modular architecture: Includes Hadoop Common (shared utilities), and allows integration with tools like Hive, HBase, Pig, and Spark.

Data locality optimization: Minimizes network traffic by processing data on or near the node where it is stored.

2. Apache Spark

Apache Spark is an open-source, unified analytics engine built for large-scale data processing across distributed clusters. It was designed to overcome the performance limitations of Hadoop MapReduce by supporting in-memory processing, iterative computations, and a rich set of APIs for various programming languages.

Key features include:

- In-memory computing: Uses memory caching to speed up iterative and interactive data processing, often outperforming disk-based engines like Hadoop MapReduce.

- Resilient distributed datasets (RDDs): Core abstraction for fault-tolerant, parallel processing with functional programming constructs like map, filter, and reduce.

- Dataset and DataFrame APIs: High-level APIs for working with structured and semi-structured data, with support for compile-time type safety in some languages.

- Spark SQL: Enables querying data using SQL or the DataFrame API; integrates with JDBC/ODBC and supports multiple languages (Python, Scala, Java, R).

Structured streaming: Supports scalable, fault-tolerant stream processing using the same APIs as batch jobs, simplifying the lambda architecture.

.avif)

3. Snowflake

Snowflake is a fully managed, cloud-native data platform for analytics, artificial intelligence, data engineering, and application development across a unified architecture. Operating as a multi-cloud solution, Snowflake simplifies data workflows by eliminating the need for infrastructure management, while enabling collaboration, elastic scalability, and high-performance processing.

Key features include:

- Fully managed platform: No infrastructure setup, tuning, or maintenance; users focus on building data products, not managing servers.

- Multi-cloud & cross-region: Runs natively on AWS, Azure, and Google Cloud with the ability to share and operate data across regions and providers.

- Separation of storage and compute: Enables independent scaling of workloads, optimizing cost and performance for analytics, ETL, and machine learning tasks.

- AI & ML capabilities: Integrates native support for developing, fine-tuning, and deploying machine learning models and large language models using Snowflake Cortex AI.

Data sharing & collaboration: Securely share live data with partners, customers, or across business units without data duplication or complex integration.

.avif)

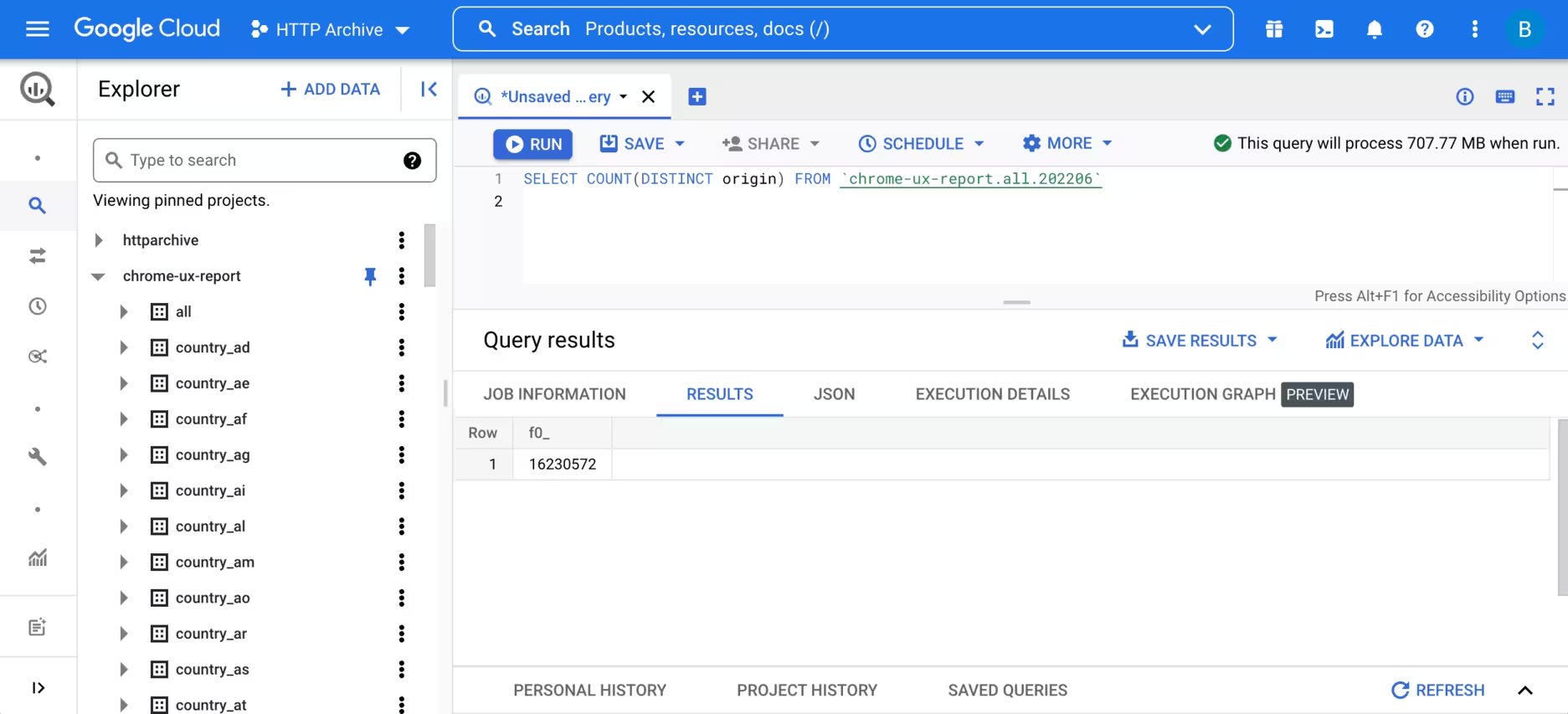

4. Google BigQuery

Google BigQuery is a managed, serverless, and AI-ready data platform to handle large-scale analytics across structured and unstructured data. It separates compute and storage, allowing independent scaling of resources without the need for manual provisioning or maintenance. It supports standard SQL, Python, and other languages.

Key features include:

- Serverless architecture: Fully managed platform with no infrastructure to manage, compute and storage scale independently to meet demand.

- High-speed performance: Query terabytes in seconds and petabytes in minutes with Google’s petabit-scale network and distributed compute engine.

- Multi-format & multi-source support: Analyze structured, semi-structured, and unstructured data across BigQuery, Cloud Storage, Bigtable, Spanner, and Google Sheets using federated queries.

- Machine learning (BigQuery ML): Train and deploy models using SQL without moving data; supports classification, regression, clustering, and time series forecasting.

Geospatial and search analytics: Natively supports geographic data types and functions, as well as semantic search to explore metadata and lineage.

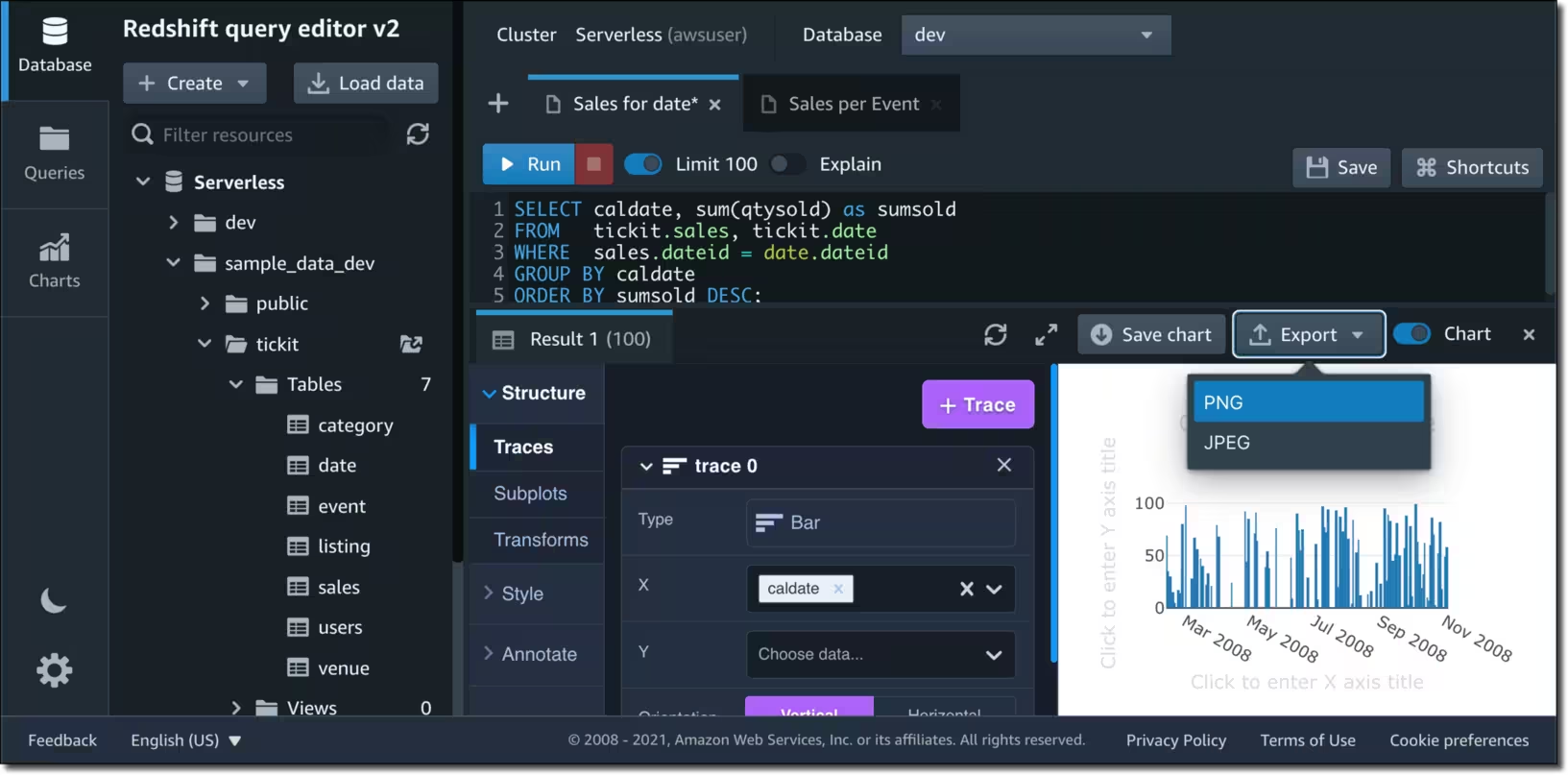

5. Amazon Redshift

Amazon Redshift is a fully managed data warehouse service for fast analytics on large volumes of structured data. Built on a massively parallel processing (MPP) architecture, Redshift distributes workloads across multiple nodes, delivering high performance through columnar storage, data compression, and advanced caching mechanisms.

Key features include:

- Massively parallel processing (MPP): Distributes queries and data across multiple nodes for fast performance at scale.

- Columnar storage & compression: Optimizes I/O performance and reduces storage footprint by storing data in columnar format.

- Serverless & elastic scaling: Automatically scales compute resources or allows manual cluster resizing with minimal downtime.

- Redshift spectrum: Query exabytes of data directly in Amazon S3 without moving or transforming it first.

Federated queries: Combine and analyze live data across Redshift, S3, and operational databases like Aurora and RDS.

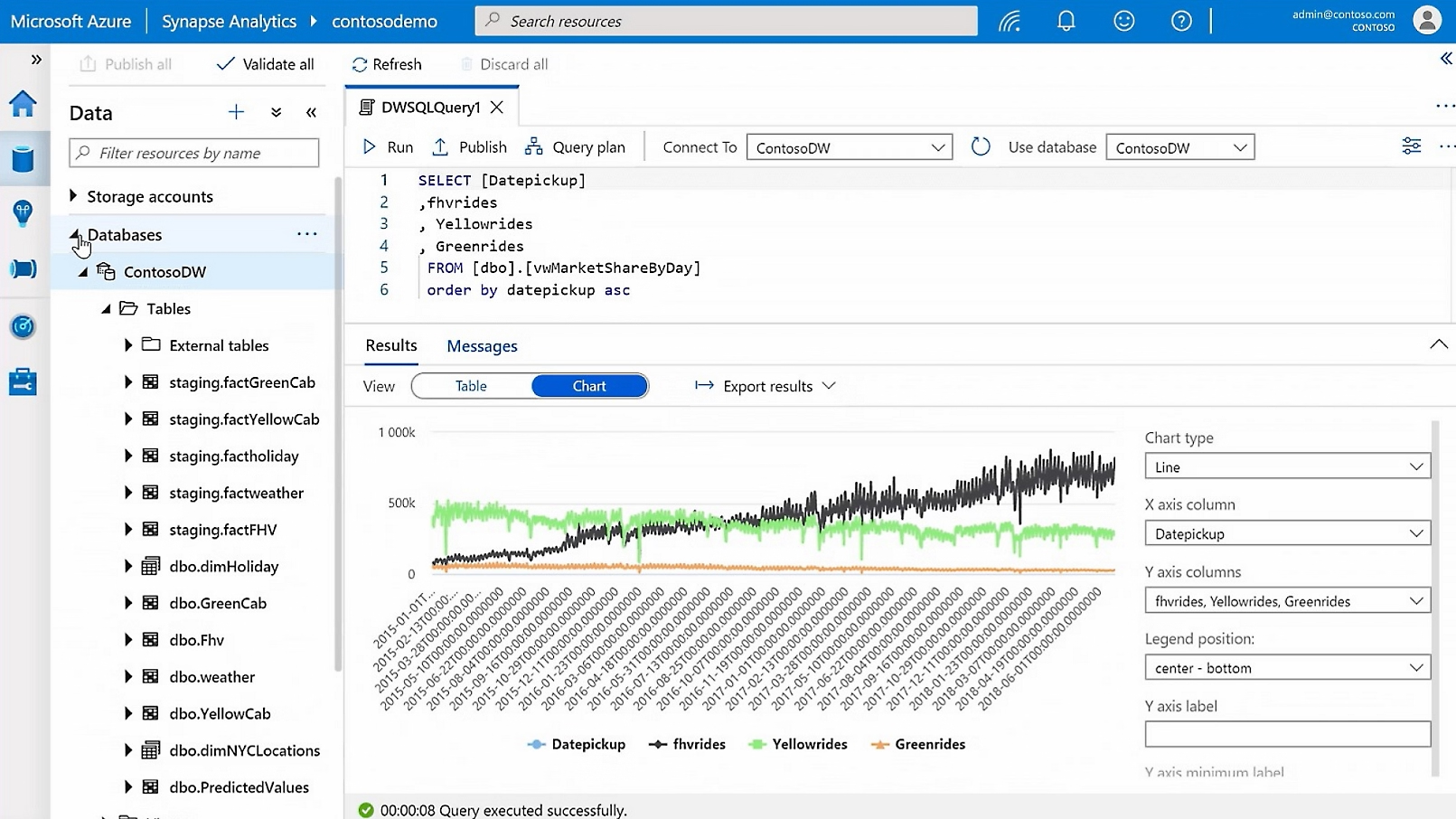

6. Microsoft Azure Synapse

Azure Synapse is a unified analytics platform that integrates data warehousing, big data, data exploration, and data integration into a single environment. It combines enterprise-grade SQL analytics with Apache Spark, Data Explorer for log analytics, and Azure Data Factory for ETL pipelines, all integrated with services like Power BI, Azure Machine Learning, and Cosmos DB.

Key features include:

- Unified SQL engine: Synapse SQL supports both serverless and provisioned (dedicated) models, enabling scalable, distributed T-SQL queries across structured and semi-structured data.

- Apache Spark integration: Native Spark support with fast startup, autoscaling, and ML capabilities via SparkML and Azure Machine Learning integration; supports Delta Lake and .NET for Spark.

- Lakehouse access: Use SQL or Spark to directly query Parquet, CSV, JSON, and TSV files stored in Azure Data Lake; define shared tables accessible by both engines.

- Data integration: Full integration with Azure Data Factory to ingest from over 90 sources, build no-code ETL/ELT pipelines, and orchestrate complex workflows.

Data explorer runtime (preview): Optimized engine for log and time series analytics with interactive KQL queries and automatic indexing of semi-structured data.

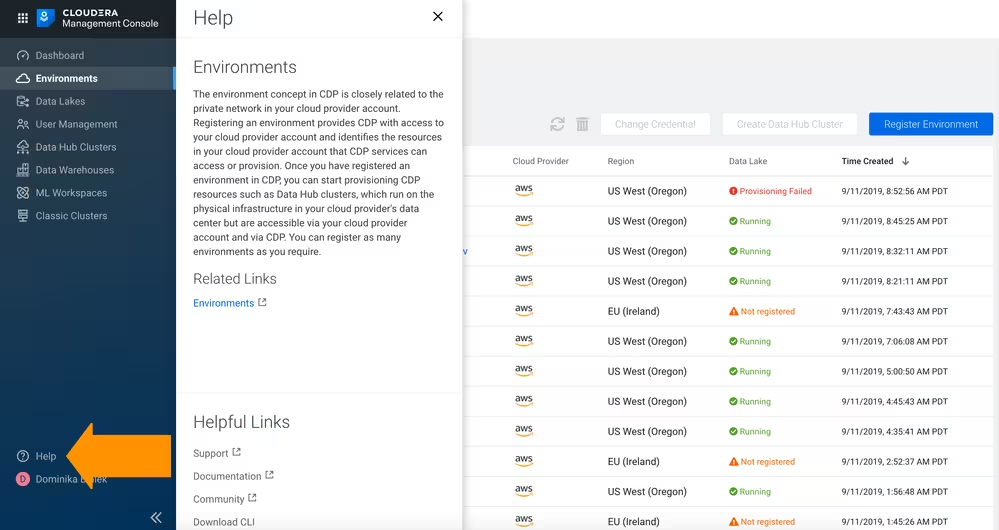

7. Cloudera

Cloudera is a hybrid data and AI platform for enterprises that need unified analytics and governance across cloud, data center, and edge environments. It enables organizations to manage and analyze data anywhere it resides (public cloud, private cloud, or on-premises) with consistent security, governance, and control.

Key features include:

- Hybrid and multi-cloud architecture: Run data workloads seamlessly across AWS, Azure, GCP, private clouds, and on-premises infrastructure.

- Unified data fabric: Connect, govern, and analyze structured and unstructured data from multiple sources through a single integrated platform.

- Open data lakehouse: Built on Apache Iceberg, Cloudera delivers an open, high-performance lakehouse that supports both analytical and AI workloads at scale.

- Scalable data mesh support: Manage decentralized, domain-oriented data architectures with enterprise-grade governance and interoperability.

Portable data analytics: Easily move users, applications, and data between environments without rewriting code or compromising security.

Evaluation Criteria for Choosing a Big Data Platform

Scalability and Performance

Platforms supporting horizontal scaling allow organizations to add resources as needed, reducing the likelihood of bottlenecks or downtime. Performance hinges not only on raw compute power and throughput, but also on architectural factors like data partitioning, memory management, and parallel processing capabilities.

Evaluation should include real-world benchmarking on representative data workloads, considering metrics like query latency, ingestion speed, and job completion time. Effective support for both batch and streaming scenarios is also significant, especially for organizations with hybrid analytics requirements.

Ease of Integration with Enterprise Systems

Big data platforms need to fit into existing enterprise IT landscapes, which typically include various databases, business applications, workflow engines, and analytic tools. Effective platforms offer connectors and APIs for seamless data ingestion, export, and bidirectional communication with core enterprise systems. Strong support for industry standards and out-of-the-box integrations reduces the effort and cost of implementation.

Beyond technical integration, platforms must align with enterprise data governance, compliance, and security workflows. They should support identity management systems, role-based access controls, and provide audit trails for all data interactions.

Cost Models and Pricing Transparency

The economics of big data platforms can significantly impact their suitability for an organization. Most modern platforms offer pay-as-you-go, subscription, or hybrid pricing models that scale with compute, storage, and user needs. Transparent cost structures are essential for avoiding unexpected expenses, especially as data volumes grow.

Cost assessment should consider not just immediate expenses, but also total cost of ownership, including maintenance, support, training, and any required infrastructure upgrades. Cloud-native offerings may reduce capital expenses but can introduce unpredictable charges for data egress, API calls, or unused resources.

Security, Compliance, and Governance

Big data platforms are often entrusted with sensitive or regulated data, making robust security a non-negotiable requirement. Core features should include encryption at rest and in transit, fine-grained access controls, audit logs, and real-time threat detection. Platforms must also support compliance with regional and industry-specific regulations such as GDPR, HIPAA, or SOC 2, offering features like data masking, retention management, and automated reporting.

Effective data governance extends beyond technical controls, including data lineage tracking, metadata management, and support for classification and policy enforcement. Centralized and automated governance tools help organizations manage risk, maintain data quality, and demonstrate accountability.

Vendor Support and Community Strength

The level of support, whether from a commercial vendor or the open-source community, can determine how successfully an organization operates and evolves its big data platform over time. Responsive vendor support means faster resolution of incidents, access to updates, and guidance on best practices, all of which are critical in dynamic production environments.

Long-term viability should be assessed by examining the platform’s release cadence, contribution trends, documentation quality, and activity on forums or repositories. Organizations should favor solutions with vibrant user and partner networks, as these increase resilience to vendor lock-in or product discontinuation.

Ingesting and Managing Big Data with Dagster

Dagster provides a modern data orchestration framework that simplifies how teams ingest, transform, and manage large-scale datasets across complex environments. It offers strong abstractions for building reliable data pipelines and ensures that every step of the data lifecycle is observable, testable, and maintainable.

Dagster treats data ingestion as a first-class concern by allowing engineers to define clear asset boundaries and ingestion logic using a declarative model. These assets represent meaningful units of data and make it easier to track upstream sources, dependencies, and transformations. Dagster’s scheduling and sensor features allow pipelines to react to events, perform incremental ingestion, or run at regular intervals, which supports both batch and streaming workloads.

For data management, Dagster provides built-in tools for tracking metadata, schema evolution, and lineage across the entire platform. The asset graph gives teams a complete view of how data flows through the system, helping them identify bottlenecks, diagnose failures, and understand the impact of changes before they occur. Integration with storage systems and compute engines is supported through a flexible I/O manager interface, which allows teams to connect to data lakes, warehouses, object stores, or custom data platforms.

Observability is central to Dagster’s design. Each execution produces structured logs, metadata events, and materialization records that give operators insight into performance, data quality, and resource usage. These features help organizations enforce governance policies, validate data expectations, and maintain trust in the accuracy of downstream analytics.

Dagster’s approach enables engineering and analytics teams to collaborate effectively. By unifying ingestion, transformation, governance, and monitoring in a single platform, Dagster reduces operational complexity and provides a strong foundation for managing big data workloads at scale.