What Are Data Pipeline Orchestration Tools?

Data pipeline orchestration tools are software solutions designed to automate, manage, and monitor complex data workflows or pipelines. These tools ensure the reliable and efficient movement and transformation of data from various sources to their intended destinations, often involving tasks like ingestion, ETL/ELT, quality checks, and reporting.

Orchestration tools provide a central interface for scheduling, triggering, monitoring, and troubleshooting data workflows. This coordination ensures that dependencies are respected, tasks execute in the right sequence, and operational requirements, like retries or notifications, are handled automatically. Such orchestration is essential for ensuring data quality, reliability, and consistency in large-scale or distributed data systems.

This is part of a series of articles about data platform

Key Functions and Capabilities of Data Pipeline Orchestration Tools

Workflow / Pipeline Definition

Defining workflows is fundamental to orchestration. Tools typically allow users to express workflows as directed acyclic graphs (DAGs), code, or configuration files, outlining task dependencies and execution order. This approach abstracts away the complexity of handling these dependencies explicitly in application code.

Many orchestration tools support programmatic, declarative, or UI-driven approaches, tailoring to different user preferences and technical skills. A well-defined workflow ensures modularity and consistency across data pipelines. This fosters reusability and better maintainability, as code changes and improvements can be reflected across workflows.

Scheduling and Triggering

Scheduling and triggering are core aspects of orchestration platforms. Schedulers enable pipelines to run at specified intervals or times, such as every midnight or at regular minute intervals. This automates repetitive data processes, ensuring timely data delivery without manual intervention.

Triggers can be based on external events, such as file arrivals, API callbacks, or table updates, providing flexibility for event-driven pipelines. Advanced tools support a mix of time-based and event-based scheduling, allowing for more responsive and optimized workflows. Conditional triggers and dependency management enable execution based on the completion or status of upstream tasks.

Execution Engine

The execution engine is responsible for running pipeline tasks reliably and efficiently. It handles task queuing, resource allocation, and parallelization. Modern engines can distribute workload across multiple nodes, scale according to demand, and recover from temporary failures automatically, reducing the operational burden on data engineers.

An effective execution engine also integrates with existing compute environments such as on-premises clusters, Kubernetes, or cloud services. This integration ensures that orchestrated pipelines run close to where data is generated or stored, optimizing performance and cost. It may also support configuration of concurrency, retries, and custom execution logic.

Monitoring, Logging, and Observability

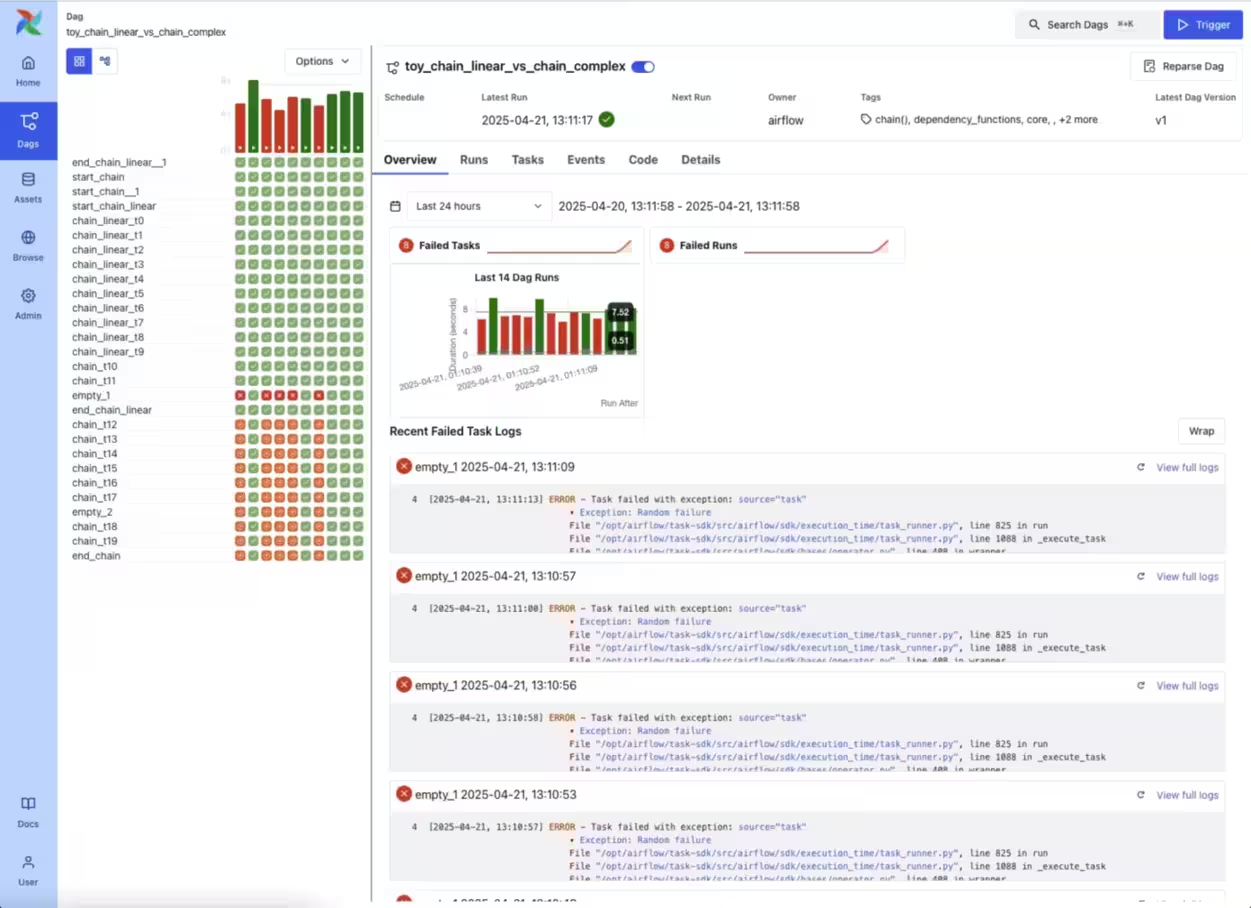

Monitoring and logging features provide real-time visibility into pipeline execution, making it easier to detect and resolve issues. Orchestration tools offer dashboards that show pipeline status, individual task progress, and historical runs. Detailed logs can help troubleshoot failed tasks, enabling engineers to identify root causes and resolve issues quickly.

Observability extends beyond simple logs and metrics by including alerting for failures, latency, or performance degradation. Integration with external monitoring tools allows organizations to centralize their incident response and maintain compliance with operational standards.

Error Handling

Robust error handling ensures that transient issues or infrastructure outages do not cause data loss or workflow failure. Orchestration tools allow specification of retry logic, where failed tasks can be re-run automatically with configurable delay and maximum attempt policies. Notification mechanisms can alert operators or stakeholders when failures occur that require manual intervention.

Advanced error handling includes support for conditional branching, so downstream tasks can react differently to upstream failure or success. This flexibility allows teams to implement compensating actions, skip tasks, or trigger alternative workflows in response to errors.

Data Lineage

Data lineage tracks how data flows through pipelines and how each transformation affects it. Orchestration tools increasingly offer lineage features, capturing metadata about task execution, input-output relationships, and dependencies between tables or datasets.

This visibility is crucial for debugging, auditability, and regulatory compliance. Lineage capabilities also support impact analysis. When data definitions or code change, teams can assess which downstream assets may be affected. By understanding source-to-destination pathways, organizations can trust the provenance and quality of analytics outputs.

Security, Access Control and Governance

Security features enforce access controls over who can view, edit, or execute workflows. Orchestration tools support user authentication, role-based access control (RBAC), and integration with enterprise identity providers. This minimizes risk of unauthorized changes or accidental data exposure.

Governance functions provide audit trails tracking who modified, triggered, or approved pipeline runs. Combined with lineage and logging, these controls support regulatory requirements and internal security best practices. Automated policy enforcement helps prevent privilege escalation or compliance drift over time.

Notable Data Pipeline Orchestration Tools

1. Dagster

.avif)

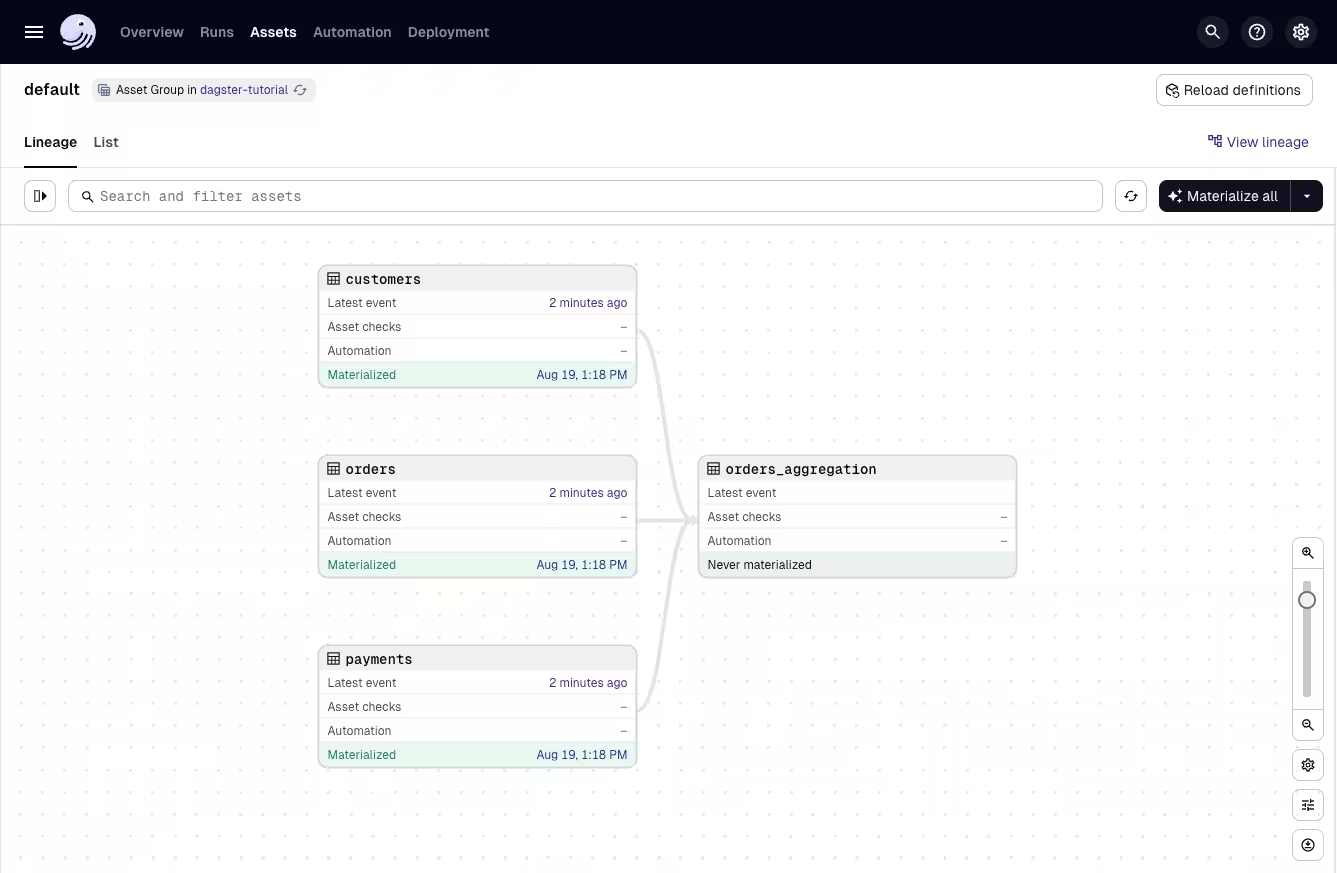

Dagster is an open-source data orchestrator built for modern data engineering teams that need reliable, maintainable, and testable pipelines. It emphasizes software engineering best practices and introduces software-defined assets that allow teams to represent data pipelines around the datasets they produce instead of traditional task-centric DAGs. This approach improves clarity, observability, and long-term maintainability across complex data ecosystems.

Key features include:

- Asset-centric design: Pipelines are modeled around data assets with explicit dependencies, enabling first-class lineage, reproducibility, and a clear understanding of how data moves through the platform.

- Built-in quality enforcement: Strong typing, schema checks, and runtime validation create predictable pipelines and reduce data quality issues before they reach consumers.

- Developer-friendly workflow: Local-first development, Python-native APIs, and testable components give engineers a fast, iterative environment for building and refining pipelines.

- Comprehensive observability: A modern UI provides detailed views of lineage, run status, partitions, metadata, and freshness, helping teams diagnose issues quickly and maintain trustworthy data services.

- Scalable orchestration options: Supports schedules, sensors, event triggers, partitions, and large-scale backfills across multiple execution environments such as Kubernetes, serverless, and local compute.

Ecosystem-wide integrations: Works seamlessly with major data engineering tools including dbt, Snowflake, BigQuery, Spark, Kafka, DuckDB, and other systems used across ingestion, transformation, analytics, and machine learning workflows.

2. Apache Airflow

.avif)

Apache Airflow is an open-source platform for authoring, scheduling, and monitoring workflows using Python code. Originally developed at Airbnb to manage complex internal workflows, it allows users to define workflows as directed acyclic graphs (DAGs), where each node represents a task and the edges define dependencies.

Key features include:

- Python-based DAGs: Workflows are defined in Python, allowing use of standard programming constructs and libraries.

- Flexible scheduling: Supports both time-based scheduling (e.g., daily, hourly) and event-based triggers.

- UI for monitoring: Built-in web interface for visualizing DAGs, tracking task execution, and managing workflow runs.

- Extensible and modular: Users can create custom operators, sensors, and hooks to integrate with various data systems.

Open source ecosystem: Backed by a large community and extended by third-party providers with tools for deployment and monitoring.

3. Prefect

%20(1).avif)

Prefect is a data orchestration tool to support dynamic, Python-native workflows without forcing developers into rigid DAG structures. It adapts to the way teams already write code, turning Python scripts into resilient, observable workflows with minimal changes. Prefect is designed to handle complex, changing workflows across ETL, ML pipelines, and operational automation.

Key features include:

- Dynamic workflow engine: Prefect's orchestration adapts to real-time data conditions and execution paths, avoiding stalls caused by static DAGs.

- Python-native interface: Workflows are written in plain Python with minimal annotations, eliminating the need to rewrite existing code or adopt a new DSL.

- Built-in observability: Provides clear error messages, audit logs, and real-time visibility into flow runs to simplify debugging and monitoring.

- Scalable deployment: Flows can be deployed independently in isolated environments with customizable infrastructure, supporting hybrid and multi-cloud strategies.

Flexible execution model: Resources are attached to individual jobs rather than environments, supporting diverse workloads and improving efficiency.

.avif)

4. Flyte

%20(1).avif)

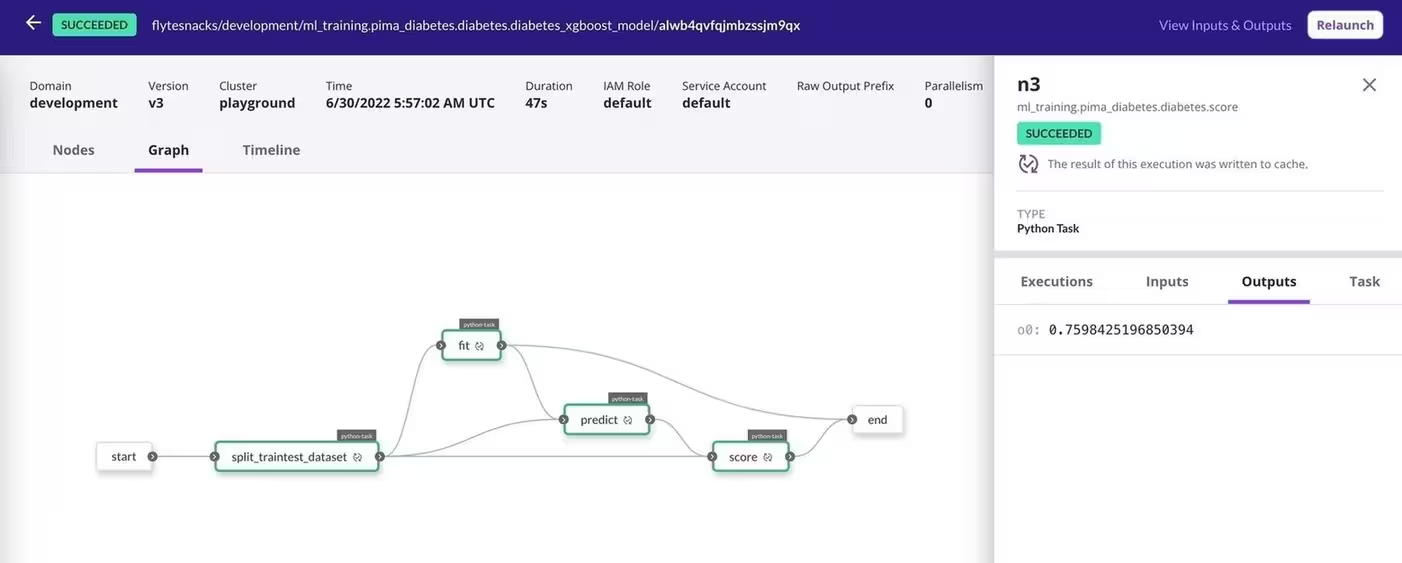

Flyte is a Kubernetes-native orchestration platform built for creating and managing reliable, scalable, and crash-proof workflows, especially in AI/ML, data, and analytics use cases. It allows developers to define workflows as standard Python code and run them at scale with minimal overhead.

Key features include:

- Kubernetes-native scaling: Tasks are dynamically scaled across compute nodes, leveraging Kubernetes for elastic, cost-efficient execution with no idle resources.

- Crash-proof reliability: Failure recovery with automatic retries, checkpointing, and state tracking keeps workflows running even when systems fail.

- Python-native workflow definition: Author workflows using a clean Python SDK without needing a new DSL or framework; supports dynamic branching and decision-making.

- Run locally, deploy remotely: Developers can debug and test locally, then deploy the same code to remote clusters for production without modification.

Data lineage: Automatically tracks inputs, outputs, and dependencies across tasks and workflows for better traceability and auditability.

5. Mage

%20(1).avif)

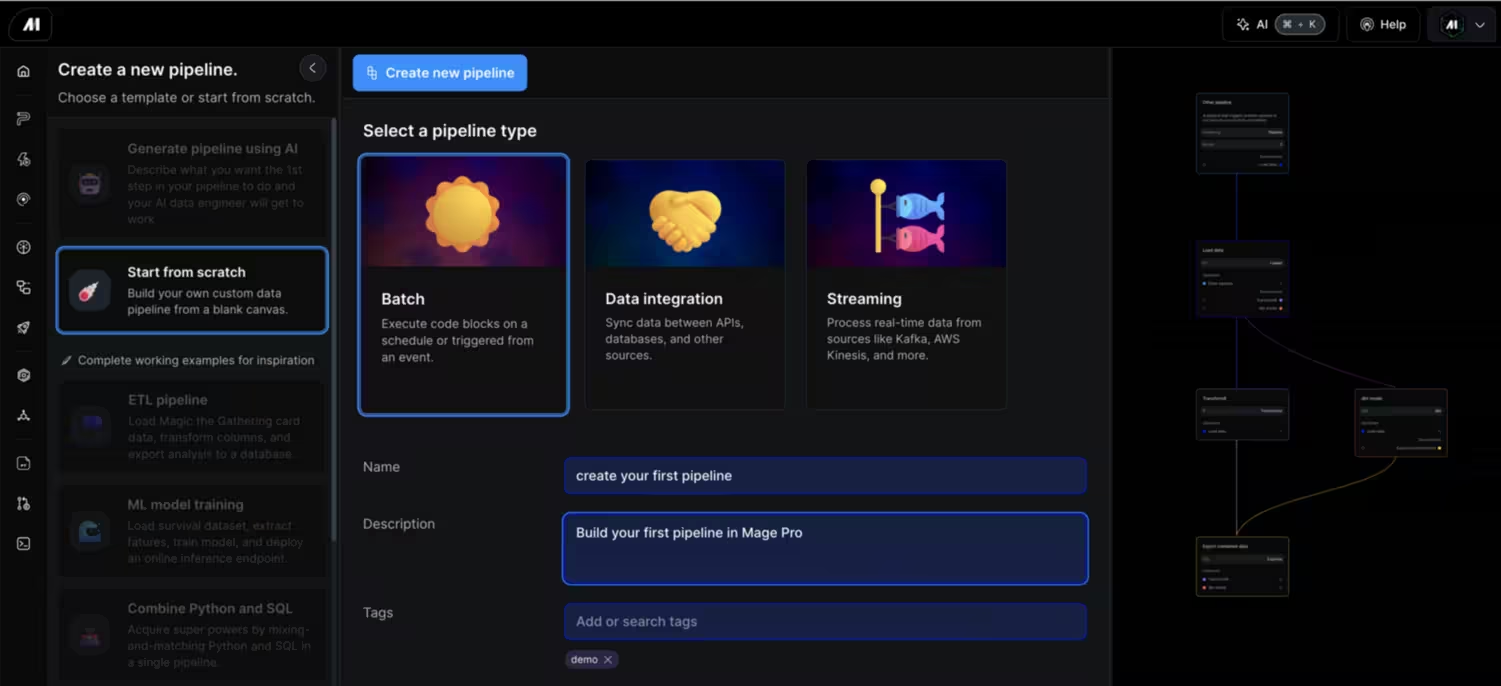

Mage is a data orchestration and integration platform that combines the interactive experience of notebooks with modular, production-ready code. It allows data teams to build, run, and monitor batch or streaming pipelines using Python, SQL, or R within a unified development environment.

Key features include:

- Hybrid development interface: Combines a notebook-style UI with modular file-based code, enabling rapid experimentation and clean production code in one system.

- Multi-language support: Write pipeline steps in Python, SQL, or R, and mix them within a single pipeline for flexibility.

- Real-time and batch processing: Build pipelines that handle both real-time streaming and batch data workloads.

- Interactive code execution: Instantly preview the output of each block in the pipeline; no need to wait for full DAG execution.

Orchestration and scheduling: Manage triggers, monitor runs, and set schedules directly through the platform with full observability.

6. Metaflow

.avif)

Metaflow is a human-centric Python framework that simplifies building, scaling, and deploying data science and machine learning workflows. Originally developed at Netflix, Metaflow provides a unified interface across the full stack of infrastructure (data, compute, orchestration, and versioning) so that data scientists can focus on modeling and experimentation.

Key features include:

- Unified infrastructure API: Abstracts away complexity by providing a single, high-level Python API to manage data, compute, orchestration, and deployment across the ML lifecycle.

- Local-to-cloud workflow: Prototype locally and scale to cloud environments (AWS Batch, Step Functions, Kubernetes, etc.) without rewriting code.

- Production-ready orchestration: Offers support for orchestrators with high availability and failure recovery, making it suitable for business-critical deployments.

- Automatic versioning: Tracks code, parameters, and artifacts across runs, enabling reproducibility, collaboration, and auditing without extra tooling.

Flexible data handling: Supports access to external data sources and manages data movement internally, ensuring consistent data access across environments.

.avif)

Conclusion

Data pipeline orchestration tools play a critical role in managing the growing complexity of modern data systems. By automating task execution, enforcing dependencies, and ensuring observability, these tools reduce operational overhead and improve reliability. They enable teams to build scalable, maintainable workflows that align with evolving business and data requirements, supporting faster decision-making and more efficient data operations across the organization.