What Are Data Pipeline Tools?

Data pipeline tools automate the process of collecting, moving, transforming, and loading data from various sources into a single destination for analysis. These tools maintain data consistency and integrity, especially as organizations scale and data ecosystems increase in complexity.

Efficient data pipeline tools help teams orchestrate workflows, schedule recurring jobs, and manage dependencies across multiple data processing steps. By abstracting away the complexities involved in building and maintaining custom integrations and transformation logic, data pipeline tools enable data engineers and analysts to focus on analytics and strategic initiatives rather than infrastructure concerns.

Types of Data Pipeline Tools

Data pipeline tools can be classified into the following types, although these often overlap.

Cloud-Native Solutions

Cloud-native data pipeline tools are services built to leverage the scalability, reliability, and elasticity of cloud infrastructure. These solutions are typically managed by cloud providers and offer integration with the provider’s ecosystem, such as storage, compute, and analytics services. They are built for high availability and automatic scaling, which lets organizations handle variable or growing data volumes without manual intervention.

These tools often offer a pay-as-you-go pricing model and minimal administrative overhead, making them a popular choice for organizations prioritizing flexibility and quick deployment. However, this convenience may come with a trade-off in customization and control compared to open-source or on-premises solutions. Data sovereignty considerations are also a factor, as organizations must be mindful of where and how data is processed and stored.

Open-Source Frameworks

Open-source data pipeline frameworks offer transparency, flexibility, and community-driven support. Common examples like Apache Airflow and Apache NiFi provide workflow orchestration, scheduling, and extensibility through plugins or custom code. These frameworks can be deployed on-premises or in the cloud, allowing organizations full control over configuration and security.

Adopting open-source tools can reduce licensing costs and avoid vendor lock-in. However, they typically require engineering expertise for setup, monitoring, and maintenance. Updates, debugging, and scaling are also the team’s responsibility, which can increase operational complexity compared to fully-managed solutions.

Learn more in our detailed guide to data pipeline framework

Low-Code Solutions

Low-code data pipeline tools simplify data integration and processing by offering graphical interfaces, prebuilt connectors, and drag-and-drop workflow design. These platforms allow business analysts and non-technical users to configure and deploy pipelines without needing to write extensive code, accelerating development cycles and reducing dependency on specialized data engineering talent.

While low-code tools are accessible and easy to use, they might offer limited flexibility for highly customized or complex transformation logic. Organizations must evaluate whether the tool’s abstraction meets their data needs or if it imposes constraints on integration, scalability, or automation.

Enterprise-Grade Platforms

Enterprise-grade data pipeline platforms cater to large-scale, complex data environments with strict requirements for security, compliance, and integration. These solutions provide features for governance, auditing, role-based access control, and pipeline versioning. They often include support for hybrid or multi-cloud deployments, ensuring operational flexibility to meet varying regulatory and business needs.

Implementing enterprise platforms frequently involves higher upfront costs, vendor contracts, and sometimes bespoke integrations. In return, organizations benefit from mature support, reliability SLAs, advanced analytics, and integration with a broad range of enterprise systems. These platforms are favored by organizations that need assurances around uptime, compliance, and service continuity.

Related content: Read our guide to data pipelines architecture

Key Features to Look for in Data Pipeline Tools

Scalability and Performance

Data pipelines must handle growing volumes, velocity, and variety of data as business needs evolve. Scalability means the tool can accommodate higher throughput by adding resources or balancing workloads across distributed systems. This includes the ability to process data in batches, real time, or both, depending on analytic requirements.

Performance is equally critical. Tools should efficiently process data with low latency, handle peak loads, and avoid bottlenecks that could delay insights. Evaluate whether the platform supports parallel processing, resource elasticity, and workload isolation, as these directly affect the reliability and speed of data operations under changing demands.

Monitoring, Alerting, and Logging

Robust monitoring capabilities are crucial to track pipeline health, data movement, and system resource utilization in real time. Effective alerting mechanisms notify teams of failures, slowdowns, or data quality issues before they impact downstream applications or decision-making.

Comprehensive logging provides actionable information for troubleshooting, auditing, and performance optimization. Structured logs allow engineers to analyze historical trends, debug errors, and demonstrate compliance for regulatory requirements. Together, these features help ensure consistent and uninterrupted data processing.

Data Quality and Reliability

Ensuring data quality requires built-in validation, cleaning, and enrichment capabilities at each stage of the pipeline. Tools should provide mechanisms to detect, report, and recover from missing or corrupt data, so analytics and reporting remain accurate and trustworthy.

Reliability involves designing for automatic retries, checkpointing, and idempotent operations to prevent data loss or duplication. Pipeline tools that support versioning and rollback of jobs or configurations further enhance the reliability of your data delivery processes. Look for tools with proven track records of uptime and consistency in production environments.

Security and Compliance

Data pipeline tools should incorporate granular security features such as encryption in transit and at rest, secure credential management, and fine-grained access controls. This is particularly important when handling personally identifiable information (PII), financial data, or other sensitive records.

Compliance is non-negotiable for organizations operating under regulations like GDPR, HIPAA, or SOX. Evaluate whether the platform supports auditing, logging, data retention policies, and region-specific deployment options to ensure both legal and industry-specific requirements are consistently met.

Cost Optimization

Cost optimization features help organizations control spending while scaling data infrastructure. Tools that provide usage-based billing, detailed cost reporting, and auto-scaling capabilities allow better budgeting and flexibility as workloads fluctuate over time.

Additionally, the ability to optimize resource allocation, such as shutting down idle services or leveraging spot instances, ensures you aren’t overpaying for underutilized capacity. Systems that offer tiered storage options and granular cost forecasts make it easier to plan for future growth without risk of bill shock.

Notable Data Pipeline Tools

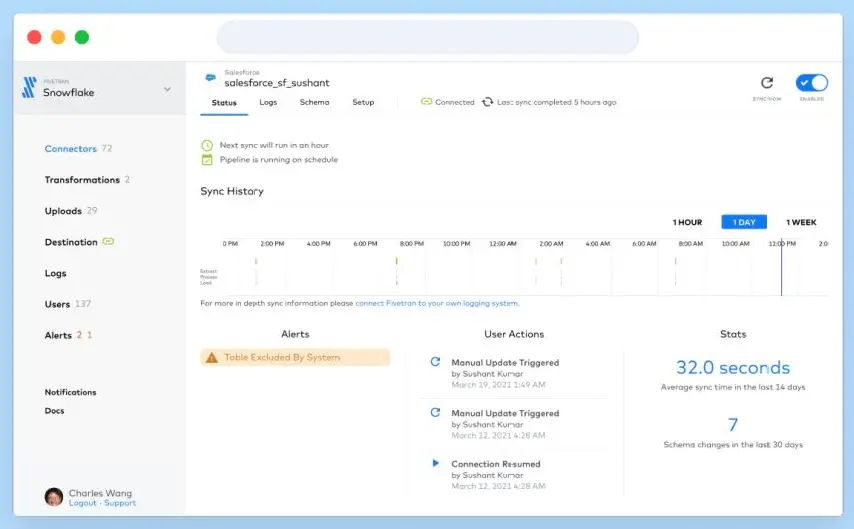

1. Fivetran

Fivetran is a managed data movement platform that simplifies and automates the process of ingesting data from hundreds of sources into destinations like data warehouses and lakes. Fivetran eliminates the need for manual ETL development by offering prebuilt connectors, managed pipeline infrastructure, and automated schema handling.

Key features include:

- 700+ prebuilt connectors: Supports a wide range of data sources including SaaS apps, databases, ERPs, and cloud storage platforms.

- Fully managed pipelines: Automates schema mapping, error handling, and maintenance, reducing the need for manual intervention.

- Data lake integration: Offers a managed service to land standardized, governed data directly into lakes using query-ready open table formats.

- Custom connector framework: Enables organizations to build and deploy their own connectors when unique integration needs arise.

Enterprise-grade security: Certified for SOC 1 & 2, ISO 27001, GDPR, HIPAA, PCI DSS, and HITRUST compliance.

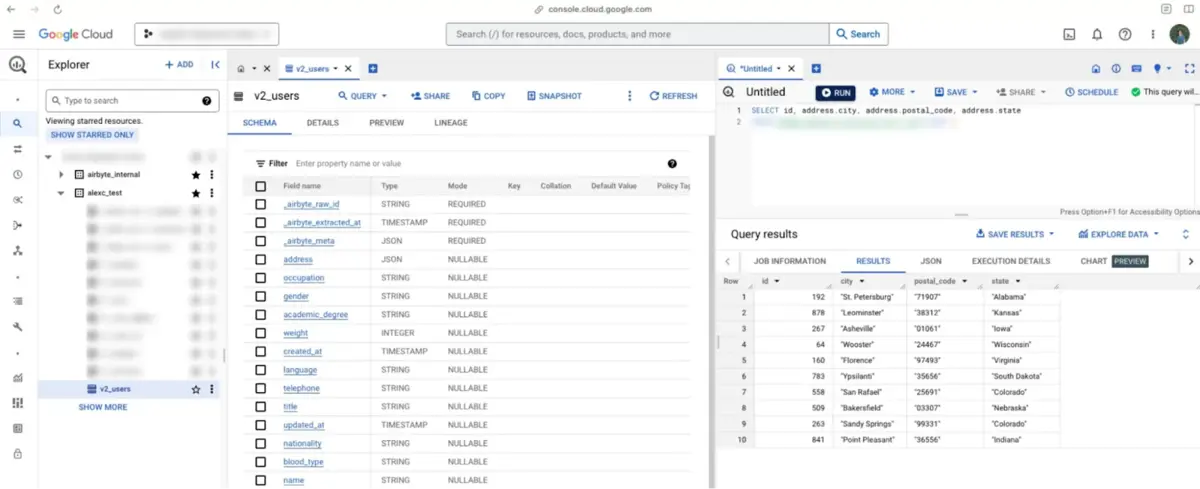

2. Airbyte

.webp)

Airbyte is an open-source ELT platform to simplify and accelerate the movement of data from any source to any destination. With over 600 prebuilt connectors and the ability to create custom ones using a no-code builder or code development kit, Airbyte offers a fast and flexible way to build data pipelines.

Key features include:

- 600+ prebuilt connectors: Access the largest open-source connector marketplace to extract data from SaaS apps, databases, APIs, and more.

- No-code and code-based connector development: Use the no-code builder or developer tools like CDK and PyAirbyte to build new connectors quickly.

- Self-hosted and customizable: Deploy on your own infrastructure for full control over security, compliance, and performance.

- Integration with orchestration tools: Seamlessly works with Airflow, Dagster, and Prefect to fit into existing pipeline orchestration stacks.

Infrastructure-as-code support: Manage and automate configuration through a robust API or Terraform provider using YAML files.

3. Kafka Connect

Kafka Connect is an open-source component of Apache Kafka for streaming data between Kafka and external systems. It defines connectors that ingest entire databases or collect metrics into Kafka topics with low latency, and can export topics to indexes or batch systems. Deployments run standalone for simple jobs or in distributed mode for scalability and fault tolerance.

Key features include:

- Source and sink connectors: Stream data from databases, apps, and files into Kafka topics, or deliver Kafka data to indexes and batch systems.

- Standalone and distributed deployment: Run on a single node for development, or across workers with automatic scaling, coordination, rebalancing, and fault tolerance.

- Tasks and workers model: Connectors coordinate parallel tasks, rebalance work across workers, and store task configuration and status in Kafka-managed internal topics.

- Converters and formats: Pluggable converters translate between bytes and structured data, supporting Avro, Protobuf, JSON Schema, raw JSON, strings, and byte arrays.

- Single message transforms: Apply chained, lightweight per-record modifications and routing within connectors; use external stream processing for complex, multi-record operations.

Dead letter queues: Configure sink connectors to route invalid records to a DLQ topic, with optional context headers for diagnostics in secure environments.

.webp)

4. Prefect

Prefect is an open-source workflow orchestration tool that helps data teams build, run, and monitor reliable data pipelines using python. Prefect turns fragile ETL processes into version-controlled workflows with features like retries, scheduling, logging, and state tracking. It integrates natively with tools like dbt, data warehouses, and BI platforms.

Key features include:

- Python-native orchestration: Define workflows using python code with full access to version control, libraries, and custom logic.

- Task-level control: Supports retries, dependencies, mapping, and conditional logic for building modular, fault-tolerant pipelines.

- dbt integration: Run dbt transformations via native support for both dbt Cloud and CLI-based workflows.

- Self-service deployment: Enables quick development and deployment of data pipelines with minimal operational overhead.

Data quality and SLAs: Automates alerts, failure notifications, and SLA tracking to ensure data reliability and timely delivery.

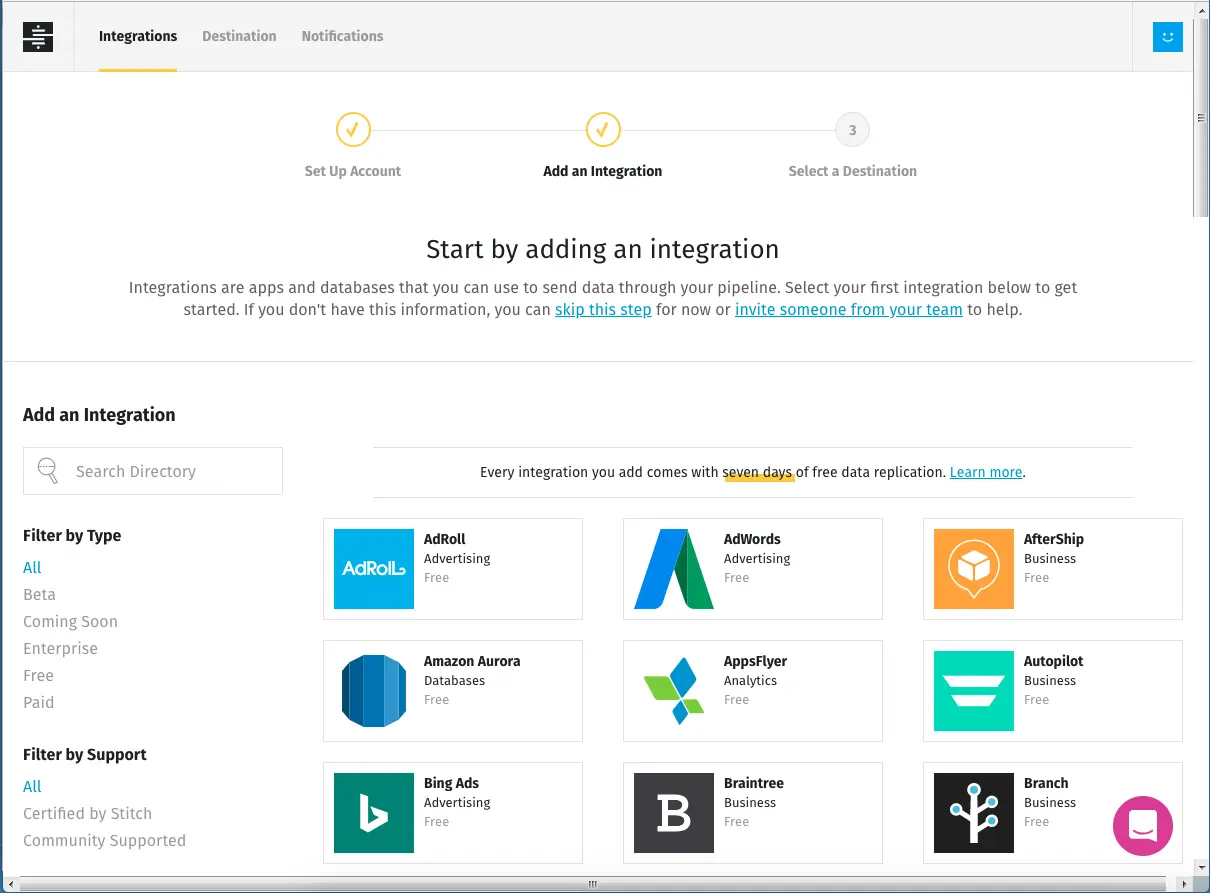

5. Stitch

Stitch is a cloud-first ELT platform that helps teams quickly and securely move data from over 130 sources into cloud data warehouses, lakes, or lakehouses. Now part of Qlik, Stitch’s capabilities are being integrated into the Qlik Talend Cloud, combining its ease of use and reliability with a broader set of enterprise features.

Key features include:

- 130+ data source connectors: Supports popular platforms like Salesforce, Google Analytics, HubSpot, Shopify, and TikTok for fast data extraction.

- No-code setup: Configure pipelines once through a user-friendly interface, then monitor and control without writing custom code.

- Automated ELT pipelines: Handles data extraction, loading, and optional transformations with minimal manual intervention.

- Secure and compliant: Ensures data integrity and regulatory compliance with secure pipeline infrastructure.

Fast time to value: Enables teams to start syncing data in minutes, reducing setup time and accelerating insight delivery.

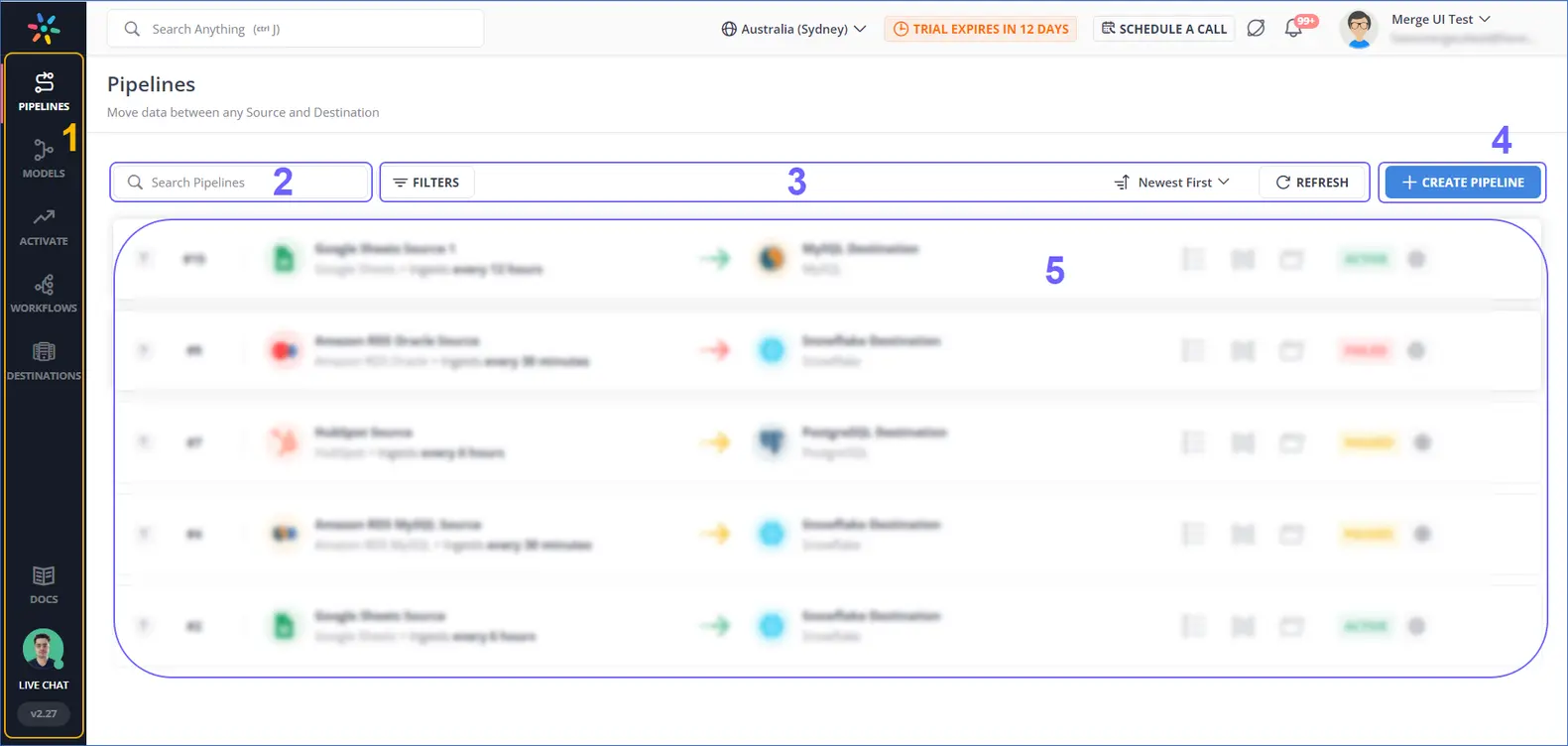

6. Hevo Data

Hevo Data is a fully managed ELT platform designed to move data securely from a range of sources to cloud data warehouses. With built-in automation, high throughput, and intelligent error handling, Hevo enables engineering and analytics teams to deliver analytics-ready data at scale without writing complex code or managing infrastructure.

Key features include:

- Automated ELT pipelines: Orchestrates end-to-end data movement with no-code configuration, ensuring up-to-date, analysis-ready data.

- High-throughput change data capture (CDC): Enables low-latency replication from operational databases without impacting source performance.

- Schema drift handling: Detects and adapts to schema changes automatically, adding new columns or tables without breaking the pipeline.

- Custom connector builder: Allows integration with internal systems and third-party tools using REST APIs or webhooks via an intuitive interface.

Intelligent error recovery: Features automatic retries, checkpointing, and error detection to ensure reliability even during transient failures.

Conclusion

Data pipeline tools are essential for modern data infrastructure, enabling efficient movement, transformation, and delivery of data across increasingly complex environments. By automating core ETL/ELT processes, these tools reduce manual workload, ensure data consistency, and improve pipeline reliability. They support diverse use cases, from real-time analytics to large-scale batch processing, while offering flexibility, scalability, and integration with broader data ecosystems.