What Are Data Engineering Services?

Data engineering services support organizations in building a modern data infrastructure. These services involve designing, building, and maintaining systems for collecting, storing, processing, and analyzing large volumes of data. Data engineers ensure that data is readily available, reliable, and usable for various purposes, including analytics, reporting, and machine learning.

Key aspects of data engineering services include:

- Data strategy and architecture: Developing a roadmap for data management, including identifying data sources, platforms, and key performance indicators (KPIs).

- Data pipeline development: Building and maintaining pipelines for the extraction, transformation, and loading (ETL) of data, as well as for real-time data processing (streaming).

- Data storage and warehousing: Designing and implementing data lakes, data warehouses, and delta tables for efficient storage and retrieval of both structured and unstructured data.

- Data governance and management: Ensuring data quality, security, and compliance through the implementation of data governance frameworks and policies.

- DataOps and MLOps: Implementing automated processes for data integration, quality assurance, and deployment, as well as enabling machine learning operations.

- Cloud data engineering: Utilizing cloud platforms like AWS and Azure for data storage, processing, and analytics.

Modern data engineering services may include building data lakes, warehouses, real-time pipelines, ETL (Extract, Transform, Load) processes, and supporting the implementation of data security and governance policies. Whether delivered through consulting firms, managed solutions, or specialized platforms, these services help organizations of all sizes turn raw data into actionable insights.

Benefits of Using Data Engineering Services

Leveraging data engineering services offers organizations the foundation they need to manage data effectively and derive meaningful insights. By outsourcing or partnering for these capabilities, organizations can focus on outcomes rather than the complexities of infrastructure and pipeline management:

- Faster time to insights: Well-structured data pipelines and storage systems reduce the time needed to prepare and access data, enabling quicker decision-making.

- Scalability and flexibility: Data engineering services are designed to handle growing data volumes and evolving business needs without requiring a complete system overhaul.

- Improved data quality and consistency: Automated validation, transformation, and monitoring processes help ensure data is clean, accurate, and consistent across systems.

- Operational efficiency: Automation and optimized workflows reduce manual effort, minimize errors, and improve the overall efficiency of data operations.

- Enhanced data security and compliance: Services often include built-in mechanisms for access control, encryption, and auditability, supporting regulatory compliance and data protection.

- Access to specialized expertise: Teams benefit from the experience of data engineering professionals who stay up to date with evolving technologies, architectures, and best practices.

- Cost optimization: By using cloud-native and scalable technologies, data engineering services can help reduce infrastructure costs and eliminate redundant systems.

Key Aspects of Data Engineering Services Providers

Data Strategy and Architecture

A comprehensive data strategy defines how an organization will collect, manage, and use its data assets, aligning technical approaches with business objectives. Data engineering services providers assist in developing this strategy, identifying data needs, mapping out data flows, and recommending best-fit technologies. Architecture design involves structuring data systems to support performance, scalability, and reliability, considering factors such as data formats, integration patterns, cloud vs. on-premise deployment, and compliance requirements.

Effective data architecture also addresses future needs, making sure systems are extensible and easily adaptable as business priorities shift. Providers often conduct audits of existing infrastructure, propose modernization plans, and establish reference architectures that guide the implementation of platforms and pipelines. This ensures long-term sustainability and a solid foundation for analytics, AI, or other data-driven applications.

Data Pipeline Development

Data pipelines automate the movement and transformation of raw information into structured, usable data sets ready for analysis. Providers design and implement both batch and real-time pipelines that extract data from diverse sources, apply cleaning and transformation steps, and load results into target systems, like warehouses or lakes. This development process focuses on minimizing latency, ensuring accuracy, and making pipelines resilient to changes in source systems or data formats.

Building robust pipelines includes error monitoring, automated testing, and validation steps to reduce downstream data quality issues. Providers also employ workflow orchestration tools that schedule, monitor, and manage pipeline executions, improving operational efficiency. The result is a reliable flow of high-quality data that underpins analytics, machine learning models, and enterprise reporting.

Data Storage and Warehousing

The choice of data storage solutions, whether data lakes, data warehouses, or hybrid models, depends on the business's analytical needs, data variety, and performance requirements. Providers help organizations select and implement the best-fit storage options, designing schemas to optimize querying and ensure cost-effective scalability. Data warehouses support structured, high-speed analytics, while data lakes accommodate large volumes of raw, unstructured, or semi-structured information.

Providers also handle data migration, integrating legacy databases with modern platforms and ensuring minimal business disruption. Optimization and lifecycle management are crucial, as data storage costs and system performance can be major concerns. By organizing data logically and applying governance policies, providers make stored data more accessible and secure for business and technical users.

Data Governance and Management

Data governance encompasses the policies, standards, processes, and technologies that ensure data consistency, accuracy, privacy, and regulatory compliance. Providers help define governance frameworks, establish data catalogs, implement security measures, and monitor access and usage. This enables organizations to maintain trusted data assets, mitigate privacy risks, and meet requirements like GDPR, HIPAA, or CCPA.

Data management extends beyond policy creation to include metadata management, master data management, and data quality monitoring. Providers often deploy tools for automated lineage tracking, anomaly detection, and reporting. These practices prevent the proliferation of “shadow data,” support audit trails, and foster a culture of responsible, evidence-based decision-making throughout the organization.

DataOps and MLOps

DataOps applies DevOps principles to data pipeline development and management, aiming for rapid, reliable delivery of data products through automation, continuous integration, and continuous deployment. Providers implement DataOps practices to improve collaboration between engineering, analytics, and business teams, reducing manual bottlenecks and increasing deployment speed and reliability.

MLOps focuses on automating the deployment, monitoring, and management of machine learning models in production. Providers help build workflows for version control, model testing, retraining, and rollback, ensuring that machine learning delivers consistent value without unexpected failures. By institutionalizing these practices, organizations can scale AI initiatives with confidence and agility.

Cloud Data Engineering

Cloud data engineering services focus on designing, implementing, and managing data solutions in public, private, or hybrid cloud environments. Providers leverage cloud-native technologies such as serverless compute, containerized workflows, and managed databases to deliver highly scalable and cost-efficient data architectures. This allows organizations to respond quickly to changing data volumes and analytical demands.

Cloud services also enable data sharing, multi-region availability, and advanced analytics features out-of-the-box. Providers manage cloud resource optimization, security configuration, migration from on-premises systems, and ongoing operational monitoring. As a result, organizations benefit from faster innovation cycles, simplified infrastructure management, and reduced total cost of ownership.

Notable Data Engineering Services

1. Dagster Cloud

.avif)

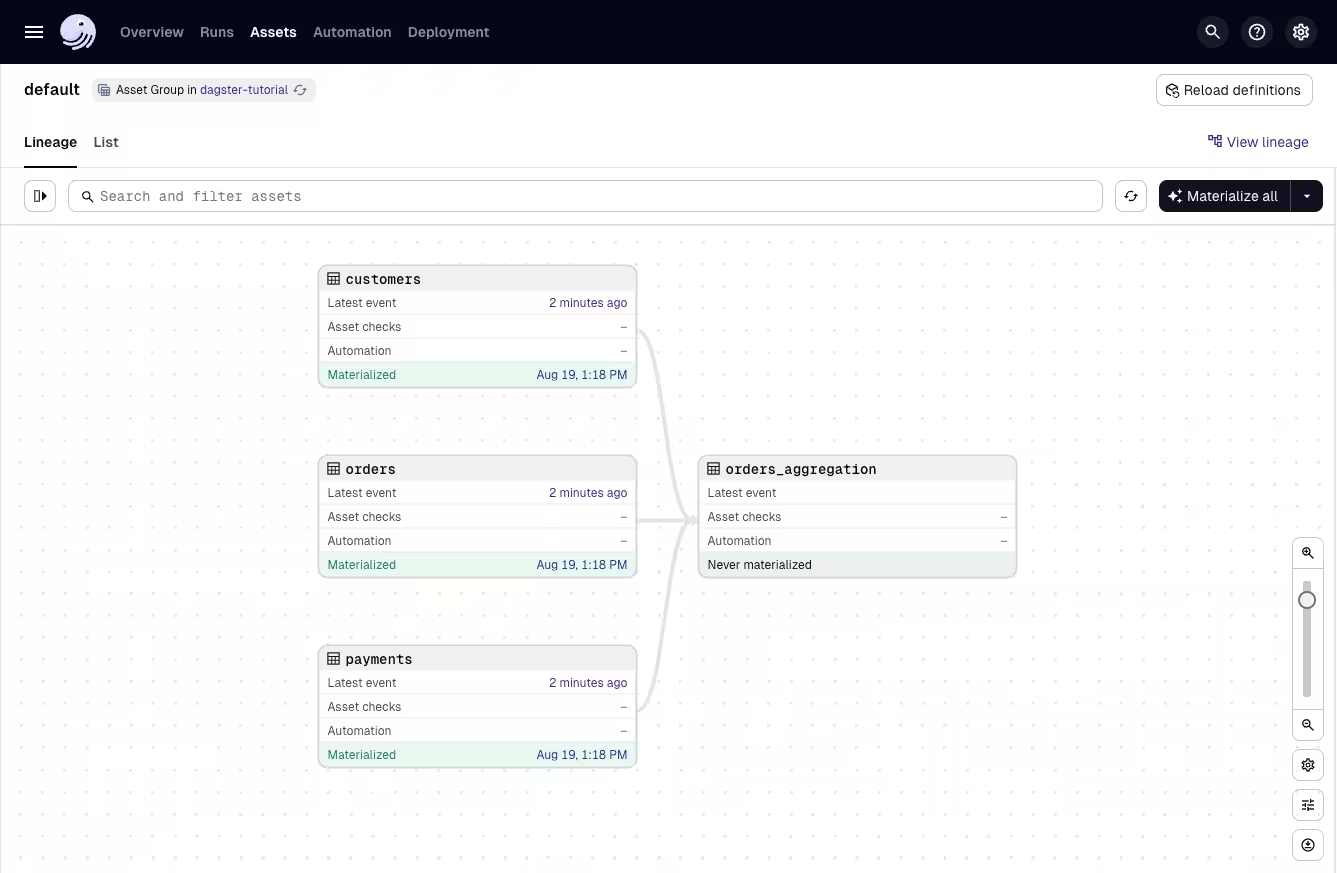

Dagster is an open-source data orchestrator designed for developing, deploying, and observing data pipelines with a strong focus on software engineering best practices. Built to improve reliability, maintainability, and data quality in modern data platforms, Dagster introduces software-defined assets that let teams model their data ecosystem around the datasets they produce.

Key features include:

- Software-defined assets: Model data as first-class assets with explicit dependencies, enabling clear lineage, consistent updates, reproducible outputs, and a unified view of the data lifecycle.

- Typing and validation: Enforce schemas, runtime checks, and strong typing to ensure predictable pipelines, early error detection, and consistent data quality across ingestion and transformation steps.

- Developer experience: Provide local-first, testable, Pythonic workflows that support fast iteration and clear structure, helping teams build and maintain pipelines efficiently.

- UI and observability: Offer a modern interface showing lineage, runs, partitions, metadata, and asset health, giving teams deep visibility into pipeline operations and data states.

- Flexible orchestration: Support schedules, sensors, partitions, and backfills across multiple execution environments, enabling reliable orchestration of ingestion, transformation, and analytics pipelines.

Integrations: Connect to warehouses, lakes, analytics tools, and ML frameworks, integrating smoothly with dbt, Snowflake, BigQuery, Spark, and other data platform components.

2. Databricks

Databricks offers a suite of data engineering services through its Lakeflow platform, intended to simplify the development of reliable, scalable, and AI-ready data pipelines. By combining ingestion, transformation, orchestration, and governance, Databricks enables organizations to build batch and real-time workflows with reduced operational complexity.

Key features include:

- Unified tool stack: Combines data ingestion, transformation, governance, and orchestration into a single platform, reducing integration overhead and tool sprawl.

- Easier pipeline creation: Supports no-code connectors, CDC (change data capture), and AI-assisted code generation.

- Optimized processing engine: Automatically tunes compute resources for efficient batch and real-time processing, improving performance and cost-efficiency.

- Lakeflow jobs: Supports high-reliability orchestration with observability and support for ETL, analytics, and AI tasks.

Lakeflow Connect: Provides native integration and prebuilt connectors for quick access to analytics and AI-ready data.

3. Snowflake

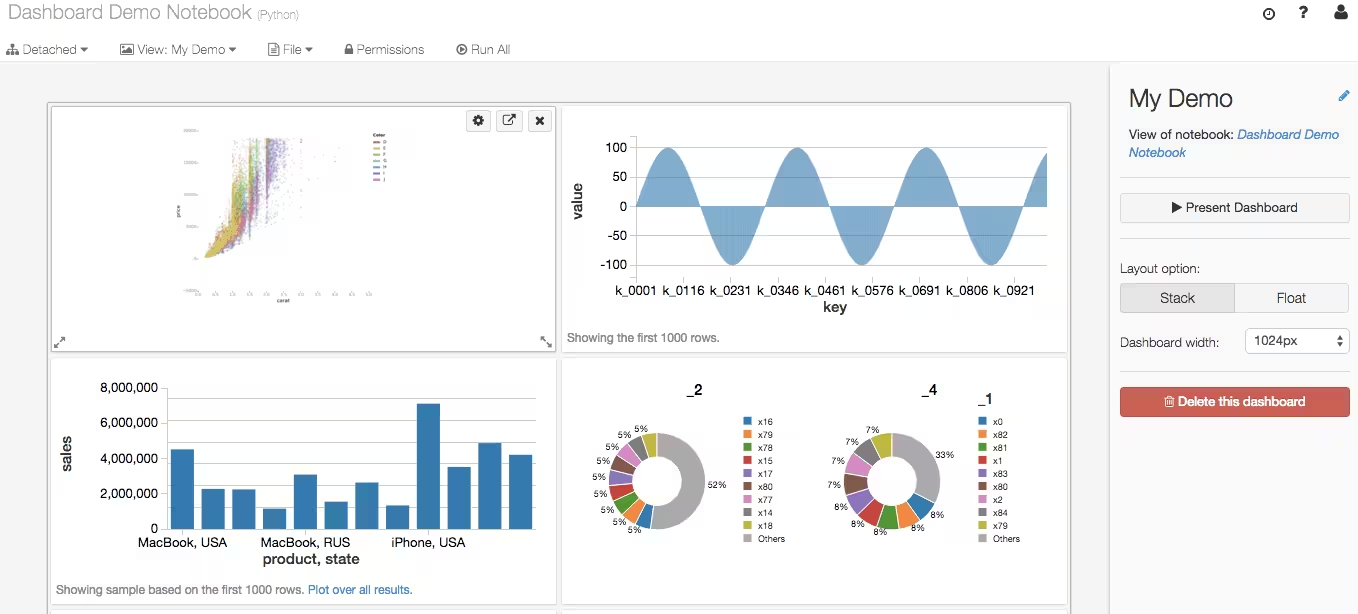

Snowflake provides a data engineering solution that removes the complexity of managing infrastructure, enabling teams to focus on building scalable, AI-ready pipelines. Its platform supports the full lifecycle of data engineering, from ingestion and transformation to delivery, while offering native features and interoperability with open-source tools.

Key features include:

- ZeroOps data engineering: Automates pipeline development and operations, removing the need for infrastructure tuning while meeting strict data SLAs

- Open lakehouse architecture: Supports integration with or creation of open lakehouse environments using open-source standards like Apache Iceberg™, reducing data silos and improving accessibility

- AI-ready data pipelines: Handles unstructured and semi-structured data for real-time AI applications, enabling seamless development of generative AI and intelligent agents

- Openflow for ingestion: Offers a managed, multi-modal ingestion service that supports structured, unstructured, batch, and streaming data, ensuring interoperability across any architecture

Developer productivity tools: Includes native dev environments, Git integration, observability dashboards, and Snowpark to support multi-language development and DevOps practices.

4. Coalesce

.avif)

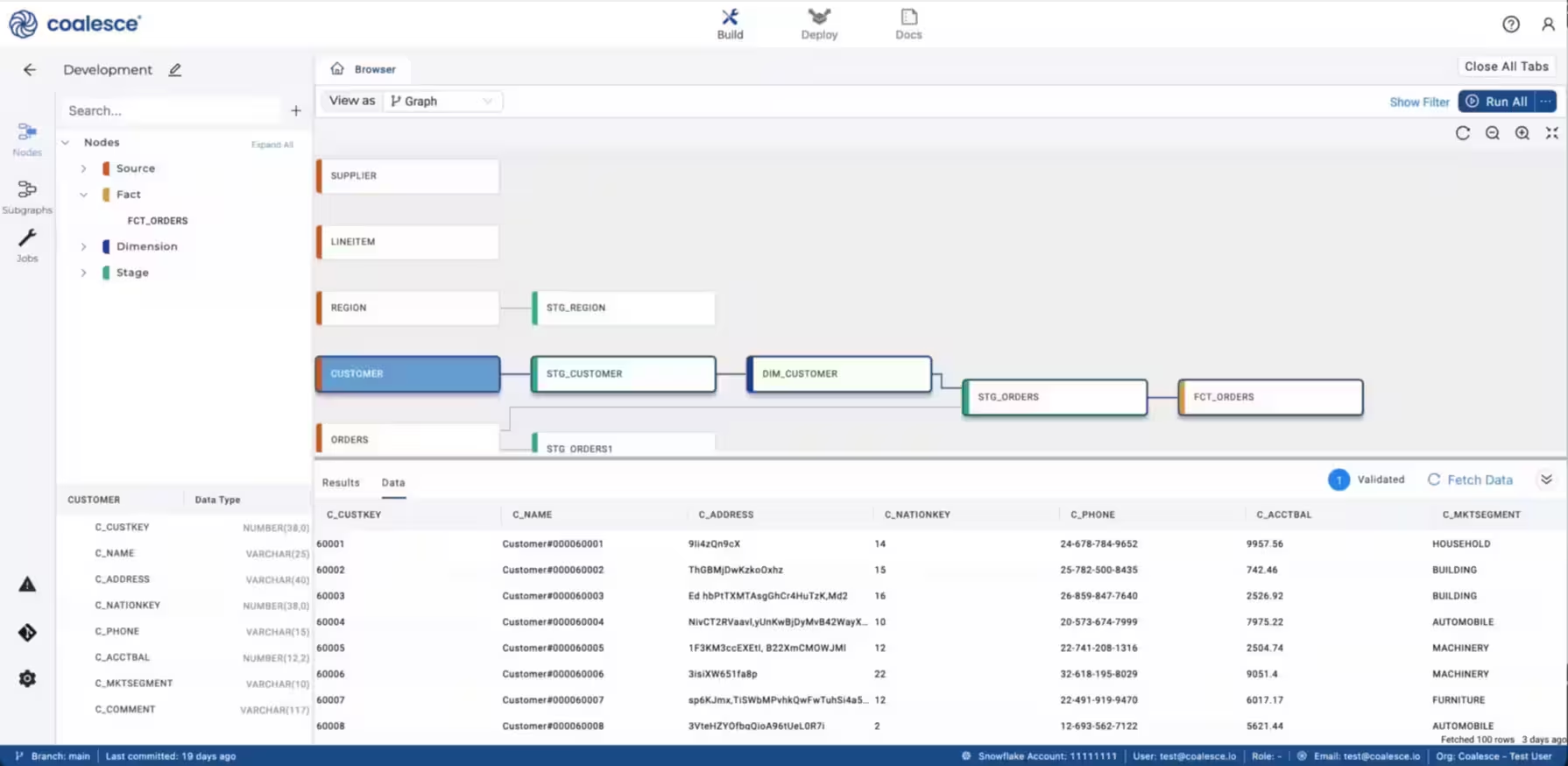

Coalesce is a data engineering platform to accelerate data transformation, improve pipeline reliability, and simplify data discovery within a unified environment. It enables teams to build scalable ELT workflows with a balance of automation and control, while also embedding cataloging and governance throughout the development process.

Key features include:

- AI-powered data transformation: Speeds up the transformation phase of ELT by allowing users to code when needed and automate the rest.

- Standardized, scalable pipelines: Uses structured SQL and a consistent framework to build maintainable pipelines, avoiding unmanageable code and reducing upkeep.

- Column-level lineage and governance: Tracks data changes at a granular level, improving auditability and control while supporting DataOps practices.

- Intelligent Data catalog: Integrates documentation, governance, and AI-driven search into daily workflows, enabling teams to discover and understand data more easily.

Repeatable, trusted development: Provides quality-tested templates and pre-built Packages from the Coalesce Marketplace to reduce manual work and development time.

5. Fivetran

.avif)

Fivetran is a fully managed data movement platform that automates the extraction and loading of data from almost any source to any destination. With a focus on reliability, security, and performance, it eliminates the complexity of building and maintaining pipelines, enabling teams to centralize data quickly and consistently.

Key features include:

- Automated ELT pipelines: Handles extraction and loading from hundreds of sources to destinations with minimal configuration and ongoing maintenance.

- Connector library: Offers a large catalog of pre-built, managed connectors for SaaS, databases, SAP systems, and files.

- Custom connector support: Provides SDKs, partner-built solutions, and a request program to support niche or proprietary data sources

- Streaming and reverse ETL: Supports real-time data flows and syncs transformed data back into operational systems via its reverse ETL capability (powered by Census).

Hybrid deployment: Enables secure deployments within the customer’s own environment while maintaining Fivetran’s automation benefits.

Related content: Read our guide to data engineering tools

Conclusion

Data engineering services provide organizations with the expertise and infrastructure needed to turn raw data into reliable, analytics-ready assets. By combining strategy, architecture, and operational execution, these services simplify complex data environments and enable businesses to extract value from data more effectively. They ensure scalability, governance, and automation across the stack, creating a foundation for real-time analytics, AI adoption, and long-term digital transformation.