What Are Data Engineering Solutions?

Data engineering solutions make it possible to build and manage the infrastructure that supports data-driven applications and analytics. These solutions involve designing, building, and maintaining systems for collecting, processing, storing, and accessing data. They are crucial for enabling businesses to leverage their data for insights, decision-making, and machine learning initiatives.

Benefits of data engineering solutions include:

- Improved data accessibility: Making data more readily available for various users and applications.

- Enhanced data quality: Ensuring data accuracy, consistency, and reliability.

- Increased operational efficiency: Automating data workflows and reducing manual effort.

- Faster insights and decision-making: Enabling timely access to data and insights for informed decision-making.

- Scalability and flexibility: Adapting to growing data volumes and changing business needs.

- Competitive advantage: Leveraging data for innovation, improved products and services, and better customer experiences.

By integrating automation, scalability, and governance, data engineering solutions provide the backbone for building robust data pipelines. They offer standardized ways to ingest, process, and catalog data, reducing the complexity of managing disparate systems. These solutions abstract much of the operational overhead involved in maintaining data workflows.

Core Capabilities of Modern Data Engineering Solutions

Ingestion Patterns

Modern data engineering solutions support a range of ingestion patterns to accommodate diverse requirements. They enable both batch and streaming data ingestion, capturing data from transactional databases, APIs, files, and event streams. This flexibility makes it possible to process real-time events from sources like IoT devices while also handling high-volume, periodic data loads needed for traditional analytics workloads.

These solutions commonly include pre-built connectors and adapters, which simplify onboarding new data sources. Ingestion processes are designed to handle schema evolution, error handling, and backpressure scenarios, ensuring resilient pipelines.

Storage Architectures

Effective data engineering relies on choosing and managing the right storage architectures. Modern solutions offer support for diverse storage paradigms, including data lakes, data warehouses, and lakehouse designs. These architectures enable organizations to separate compute and storage for cost efficiency, enable the handling of both structured and unstructured data, and ensure rapid data availability.

Storage solutions also address concerns around data durability, partitioning, and scalability. Features like versioning and snapshotting increase reliability, while automated tiering optimizes costs. Data cataloging and indexing improve discoverability, and native integrations with processing engines or BI tools further reduce friction across the analytics stack.

Transformation Paradigms

Transformation is at the core of data engineering, where raw data is shaped into analytics-ready formats. Modern solutions provide declarative frameworks for both batch and streaming transformations, supporting SQL-based, code-driven, or visual transformation paradigms. This flexibility allows teams to leverage familiar tools and programming models, reducing ramp-up time and promoting best practices in data modeling.

Advanced solutions also enable modular, reusable transformation steps, enabling extensive testing and lineage tracking. This modularity allows teams to orchestrate complex data flows efficiently and maintain clarity around how data is altered. Integration of quality checks and schema validation at this stage ensures downstream reliability for consumers.

Orchestration and Scheduling

Orchestration tools are integral to data engineering solutions, managing dependencies and scheduling data workflows across distributed environments. They coordinate jobs such as data ingestion, transformation, and data delivery, ensuring steps occur in the correct sequence and handling failure recovery scenarios. This orchestration is essential for reproducible, reliable, and efficient pipeline executions.

Scheduling capabilities enable automated, recurring pipeline runs aligned with business needs, such as hourly data refreshes or end-of-day reporting. Modern solutions include monitoring dashboards, alerting mechanisms, and logs, empowering engineers to diagnose failures, optimize performance, and manage SLAs.

Metadata, Catalog, and Governance Layers Across the Stack

Metadata management and governance are critical for operating data platforms at scale. Modern data engineering solutions embed cataloging and governance layers, capturing details like dataset lineage, ownership, schema evolution, and data quality metrics. Rich metadata improves searchability, discoverability, and transparency, allowing users to quickly locate and understand available data assets.

Governance frameworks enforce data access controls, auditing, and compliance with regulations such as GDPR and HIPAA. They enable stewardship initiatives by providing automated classification, documentation, and change management features. These layers foster trust in the data, supporting broader adoption and collaboration across business and technical teams.

Related content: Read our guide to data engineering tools

Notable Solutions Supporting Data Engineering

1. Dagster

.avif)

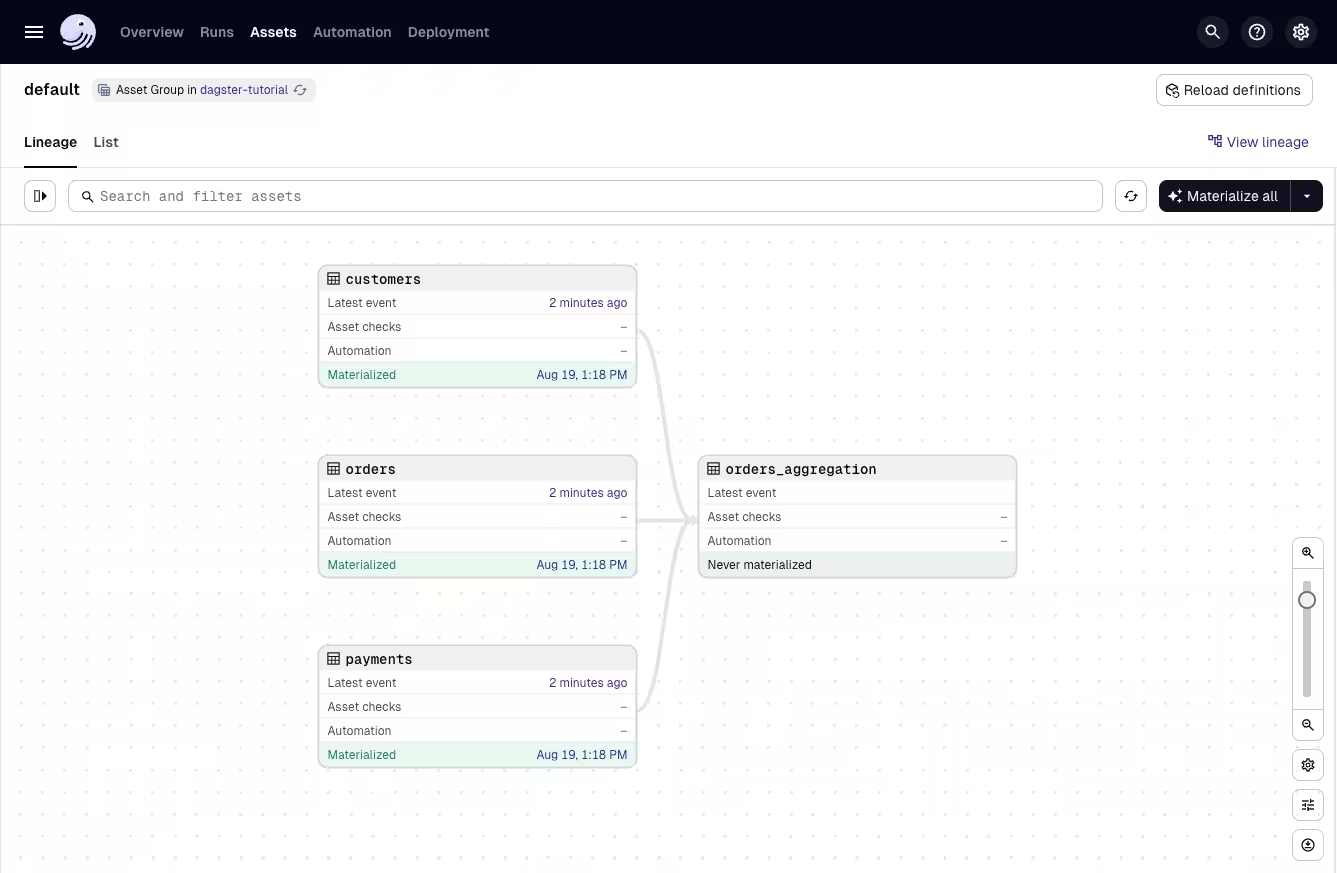

Dagster is an open-source data orchestrator purpose-built for modern data engineering teams that need reliable, maintainable, and observable pipelines. Designed with software engineering principles at its core, Dagster helps organizations build resilient data platforms by modeling pipelines around the data they produce through software-defined assets. This asset-first approach improves clarity, quality, and long-term scalability across complex data systems.

Key features include:

- Software-defined assets: Represent data as assets with explicit upstream and downstream dependencies to enable accurate lineage, reproducible transformations, and clear visibility into how data flows across the platform.

- Strong typing and validation: Apply schemas, runtime checks, and typed interfaces to enforce data quality, prevent pipeline failures, and maintain consistent contracts across ingestion, processing, and analytics layers.

- Developer-focused workflow: Local-first development, testability, and Python-native APIs support fast iteration and robust engineering practices, making pipelines easier to build, debug, and evolve.

- Comprehensive observability: A modern UI provides detailed insights into lineage graphs, run histories, partitions, metadata, and asset health, enabling proactive monitoring and quicker troubleshooting.

- Flexible orchestration model: Support schedules, sensors, event-driven triggers, partitions, and large-scale backfills across multiple execution environments, including Kubernetes, serverless compute, and local development.

Rich ecosystem integrations: Seamlessly connects with warehouses, lakes, ETL and ELT tools, analytics frameworks, and ML platforms. Includes strong integrations with dbt, Snowflake, BigQuery, Spark, DuckDB, and similar tools used across enterprise data stacks.

2. Databricks

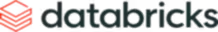

Databricks supports data engineering by providing a unified platform for building, deploying, and managing data pipelines. Its Lakeflow solution combines ingestion, transformation, orchestration, and governance into a single toolchain, helping teams simplify development and reduce operational overhead.

Key features include:

- Unified tool stack: A single platform for ingestion, transformation, orchestration, and governance, reducing integration complexity and tool sprawl.

- Declarative pipelines: Simplifies ETL with built-in support for change data capture (CDC), data quality enforcement, and streaming workflows.

- AI-assisted development: Enables faster pipeline creation through no-code interfaces and AI-assisted code generation.

- Optimized processing engine: Automatically allocates compute resources for efficient execution across real-time and batch workloads.

High observability: Offers monitoring and logging capabilities to manage thousands of daily jobs with visibility and reliability.

3. Alation

.avif)

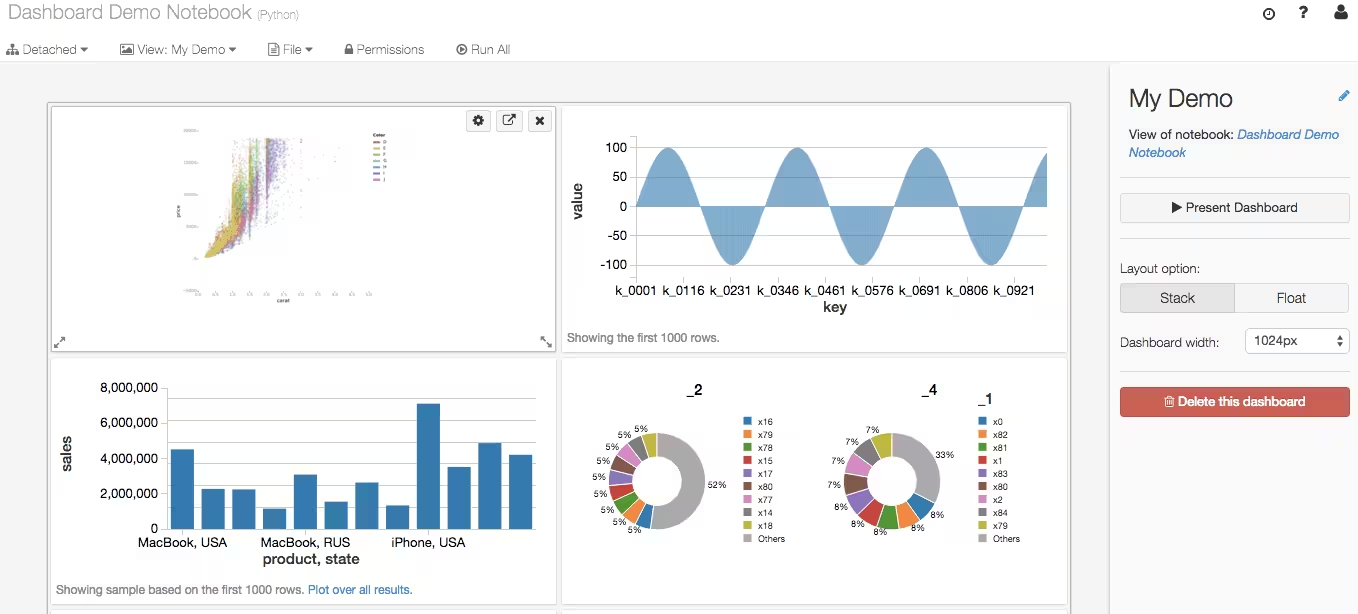

Alation is a data intelligence platform that helps organizations make better use of metadata. It enables teams to discover, govern, and use data more effectively across the enterprise. Alation combines data cataloging, governance, and search capabilities with AI-powered agents that improve metadata accuracy and usability.

Key features include:

- Metadata-aware AI agents: Boost data discovery and question answering accuracy by leveraging context from metadata.

- Data cataloging: Centralizes metadata in a searchable catalog, helping users find and understand trusted data assets.

- Integrated data governance: Embeds compliance and policy guidance directly into workflows, balancing governance with user productivity.

- Self-service analytics: Empowers users to explore data independently while maintaining control and consistency across teams.

Trusted data products: Enables organizations to create and maintain a marketplace of vetted, reliable data assets.

4. Matillion

.avif)

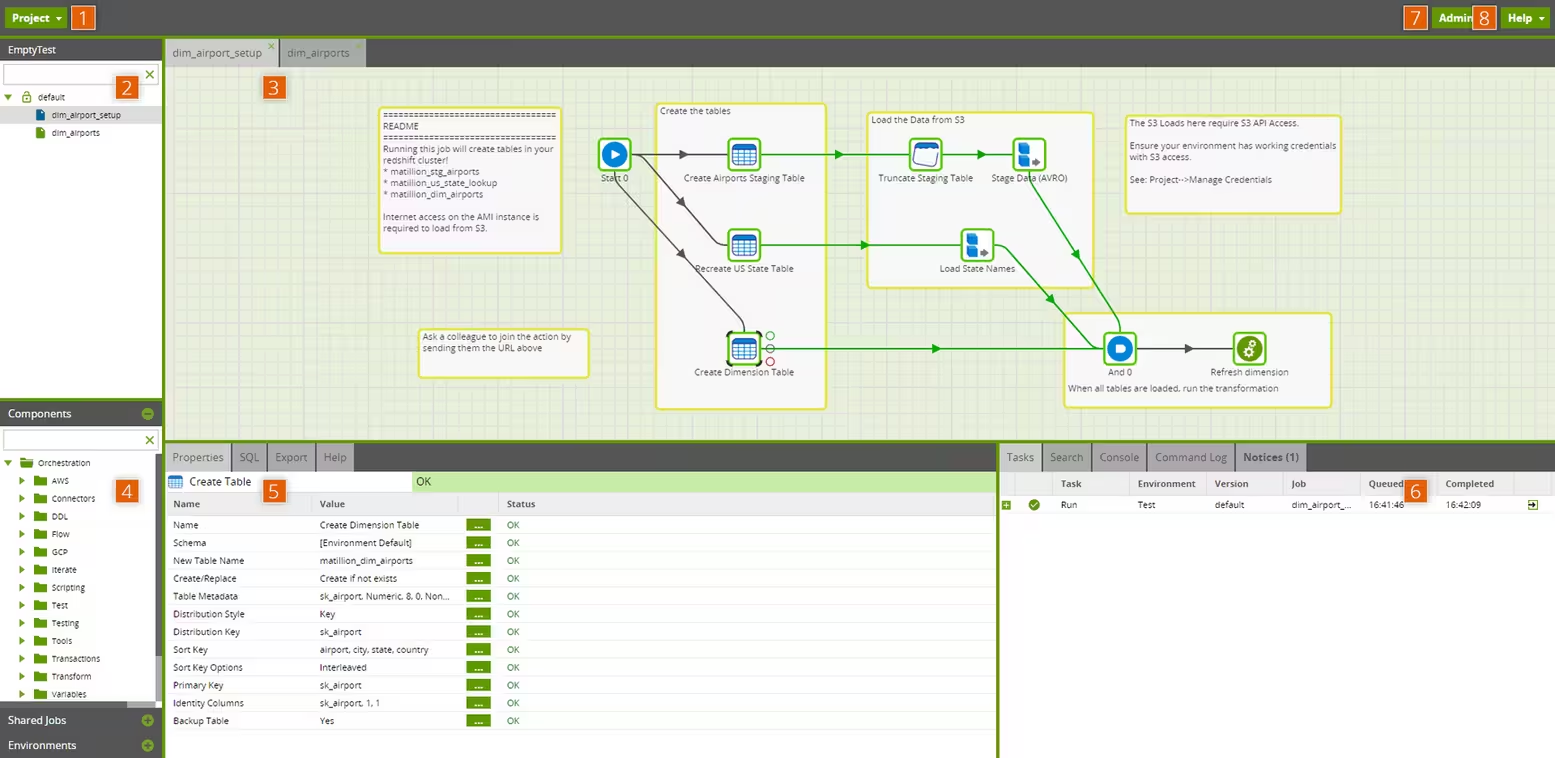

Matillion is a cloud-native data integration platform to accelerate the creation and management of data pipelines across the data team. It supports low-code and code-first development, enabling technical and non-technical users to collaborate effectively.

Key features include:

- Maia AI agents: Use natural language to automate pipeline development and assist with complex data engineering tasks, scaling team productivity without adding headcount.

- Low-code interface: Build sophisticated pipelines visually with drag-and-drop components, reducing the time to deployment for non-developers.

- Code-first flexibility: Supports SQL, Python, and dbt for advanced users, with orchestration and Git integration to enable data ops practices.

- Universal connectivity: Ingest structured and unstructured data from virtually any source using pre-built or custom connectors.

Cloud-native architecture: Generates native SQL and runs workloads on platforms like Snowflake, AWS, and Databricks for better performance and scalability.

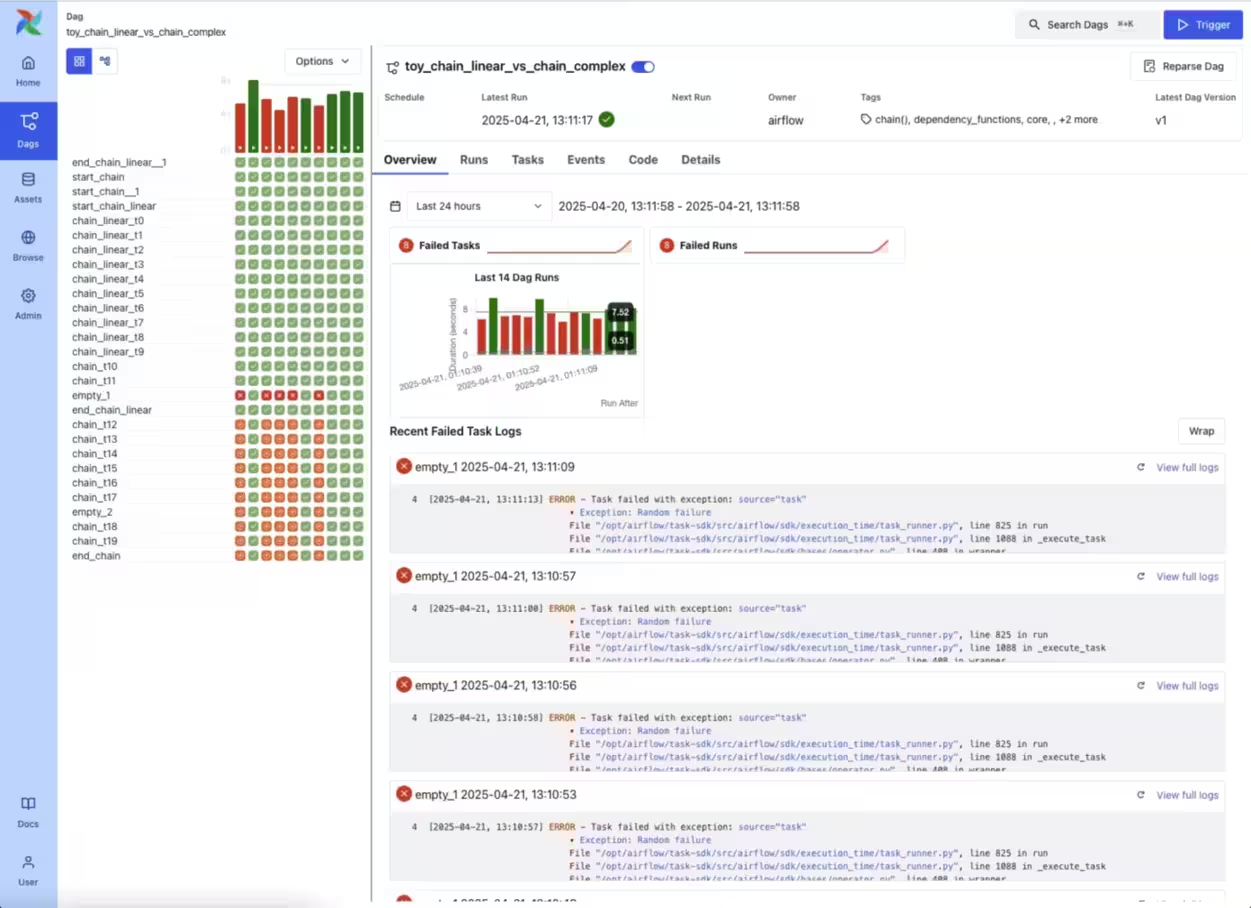

5. Apache Airflow

.avif)

Apache Airflow is an open-source workflow orchestration platform used to programmatically author, schedule, and monitor data engineering pipelines. Initially developed by Airbnb to manage complex internal workflows, Airflow has since become an Apache Software Foundation project. It uses Python to define workflows as code, allowing developers to build reusable and dynamic data pipelines.

Key features include:

- Python-based workflow definition: All workflows are written in Python, enabling the use of standard programming constructs, libraries, and modular components.

- DAG-centric orchestration: Tasks and dependencies are managed through DAGs, providing a clear and auditable structure for complex pipelines.

- Flexible scheduling: Supports both time-based scheduling (e.g. hourly, daily) and event-driven execution, allowing for reactive data workflows.

- Built-in UI and monitoring: Offers a web interface to visualize DAGs, monitor task progress, and troubleshoot execution issues.

Extensibility: Supports plugins and custom operators to integrate with external systems such as databases, cloud platforms, and APIs.

Conclusion

Data engineering solutions form the foundation of modern data-driven organizations. They provide the capabilities needed to ingest, store, process, and govern data reliably at scale. By standardizing workflows and enabling automation, they reduce complexity and operational overhead while improving data quality and accessibility. The result is a more agile data infrastructure that supports real-time analytics, advanced modeling, and machine learning, ultimately allowing businesses to make faster, more confident decisions.