What Are SQL Models in dbt?

SQL models in dbt (data build tool) are SQL files that define data transformations within a data warehouse. Each model represents a transformation step, often a SELECT statement, that is materialized as a new view or table in the data warehouse. dbt handles dependency management and the orchestration of these models, allowing data teams to focus on writing SQL queries without handling pipeline execution code.

dbt models are modular and reusable. Users write familiar SQL, enhanced with dbt features like referencing, templating, and configuration blocks. These models are version-controlled, frequently tested, and documented, ensuring high data quality and transparency. As the heart of dbt projects, SQL models help teams standardize, govern, and optimize analytics engineering tasks.

Why dbt SQL Models Democratize Data Pipelines

By making SQL models the central unit of work, dbt lowers the barrier for building production-grade data pipelines. Analysts and analytics engineers can contribute transformations without learning orchestration tools or writing procedural ETL code. The same skills used for ad-hoc analysis (writing SQL) apply directly to creating automated, maintainable pipelines. This reduces reliance on specialized data engineering resources and shortens the time from raw data to consumable insights.

Because dbt models are just SQL files with configuration metadata, they fit naturally into modern software practices like git-based version control, pull requests, and code review. Teams can collaborate with the same workflows they already use for application development, with clear history, testing, and deployment controls. This alignment between analytics and engineering practices makes it easier for more people in an organization to safely contribute to data pipelines while maintaining governance and quality.

Key Concepts of dbt SQL Models

Jinja Templating

dbt integrates Jinja, a popular templating engine, directly into its SQL models. This allows users to add logic, loops, variables, and conditionals to their SQL, making queries more dynamic and reusable. You can use Jinja to parameterize queries, generate repetitive SQL code, or implement logic that alters the structure or filters of a SQL statement on the fly.

The use of Jinja in dbt models encourages consistency and reduces errors by centralizing reusable snippets or macros. For example, implementing a column calculation or a filter condition once as a macro makes it accessible across the entire project. Developers benefit from efficient code maintenance because updates to business logic or transformations propagate to all dependent models.

Materializations

Materializations in dbt define how a SQL model's output is stored in the data warehouse. Common materializations include view, table, incremental, and ephemeral. A view does not store physical data but references the underlying query, updating dynamically every time it's queried. A table physically persists the results and is rebuilt on each execution, while incremental models update only changed data, improving performance for large datasets. Ephemeral models are inlined into downstream models as CTEs.

Each model declares its materialization type within its file or project configuration. Materializations give developers control over workflow performance and cost. Choosing the right materialization depends on business requirements such as freshness, speed, and data volume. dbt’s flexible approach means teams can optimize for query responsiveness or warehouse spend as their needs evolve.

Source Declarations and the ref() Function

Defining sources in dbt formalizes external tables as referenced inputs in a project. A source declaration captures metadata about raw data objects available in the warehouse, making downstream dependency management explicit. This allows dbt to validate input tables, enforce freshness, and help document upstream data origins within the dbt documentation site. The ref() function is the primary mechanism for referencing models within dbt.

Rather than hardcoding table names, ref('model_name') dynamically resolves dependencies and abstracts away environment-specific naming conventions. This approach enables dbt to build a directed acyclic graph (DAG) that orders model builds intelligently and provides traceability for all data lineage.

Data Tests

In dbt, data tests are SQL queries designed to validate data quality. There are two main types: singular tests, which are custom queries that return zero rows if the data passes the test, and generic tests, which are reusable checks like uniqueness or not null constraints applied to columns or models. These tests run as part of dbt’s workflow, making data quality checks an integral part of the transformation process.

Recent updates to dbt have also added unit tests. Much like their counterparts in the software engineering world, dbt unit tests allow you to verify the expected outputs of your models given a set of static inputs.

Embedding tests directly in the analytics pipeline ensures errors are detected early. If a test fails, dbt stops execution, allowing teams to fix upstream issues before results are consumed in downstream analyses or dashboards. The automation of these integrity checks helps uphold confidence in data products.

Version Control and Collaboration

dbt is built around the principles of modern software development, emphasizing version control and collaboration through git. Each dbt project is a directory of SQL and YAML files that can be tracked, branched, and merged via a source control system. This ensures all changes are auditable, reversible, and can be peer-reviewed before deployment, improving development quality and accountability.

Collaboration is further supported by standardized project structures and documentation generation. Multiple team members can work on models simultaneously, contributing enhancements or bug fixes without disrupting production code.

Quick Tutorial: Writing Your First SQL Model in dbt

This tutorial will show you how to create your first SQL model in dbt. Instructions are adapted from the dbt documentation.

Step 1: Create a .sql file

Start by creating a .sql file inside the models/ directory of your dbt project. Each SQL file corresponds to one model, and it must contain a single SELECT statement. The model’s name must exactly match the filename, including case sensitivity, and it's a best practice to use underscores instead of dots in names (e.g., complaints_report.sql).

Here's a simple example:

with customer_complaint_summary as (

select

customer_id,

min(complaint_date) as first_complaint_date,

max(complaint_date) as most_recent_complaint_date,

count(id) as number_of_complaints

from jaffle_shop.customer_complaints

group by customer_id

)

select

c.customer_id,

c.first_name,

c.last_name,

ccs.first_complaint_date,

ccs.most_recent_complaint_date,

ccs.number_of_complaints

from jaffle_shop.customers c

left join customer_complaint_summary ccs

on c.customer_id = ccs.customer_idStep 2: Configure and run the model

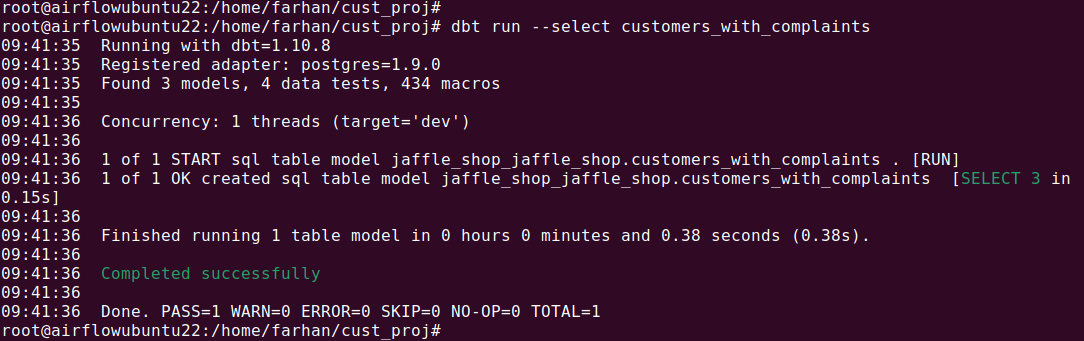

When you run dbt run, dbt will wrap this query in a CREATE VIEW AS or CREATE TABLE AS statement, depending on your model's materialization settings. By default, dbt creates views and places them in the target schema based on your active profile.

You can change how dbt builds the model using a configuration block at the top of the file:

{{ config(

materialized='table',

schema='jaffle_shop'

) }}Configurations can also be set globally in your dbt_project.yml file or scoped to directories within the models/ folder.

Step 3: Define dependencies between models

To build dependencies between models, use the ref() function instead of hardcoding table names. For example:

select

c.customer_id,

c.first_name,

c.last_name,

cc.complaint_date,

cc.complaint_text

from {{ ref('customers') }} as c

left join {{ ref('customer_complaints') }} as cc

on c.customer_id = cc.customer_idNote: It’s important to ensure that customers and customer_complaints exist in the model directory.

This ensures dbt understands model dependencies and can build a directed acyclic graph (DAG) to run models in the correct order. It also supports environment-aware builds, allowing dbt to adjust schema and table names based on your current target environment.

Once your models are defined and configured, execute dbt run to materialize them in your data warehouse. This simple workflow—writing SQL, configuring models, and running dbt—forms the foundation of the analytics engineering workflow in dbt.

Best Practices for dbt SQL Development

Here are some of the ways that developers can improve their use of dbt for SQL-based projects.

1. Maintain Modular and Reusable SQL Code

Structuring dbt projects with modular SQL code makes transformation pipelines easier to build, debug, and evolve. Instead of placing all logic in a single large model, split transformations across multiple models, each focused on a discrete task—such as cleaning raw data, applying business logic, or joining data sources.

For example, a raw sales dataset might first pass through a staging model that standardizes field names and formats. An intermediate model might then calculate key metrics, while a final model joins data with dimensions to produce a reporting-ready dataset. Each of these layers can be tested and maintained independently.

Avoid repeating business rules or filters across models. If the same logic is applied in multiple places, abstract it into macros or upstream models. This reduces maintenance overhead and minimizes the risk of logic divergence. When working in teams, modular code allows developers to work concurrently without stepping on each other’s changes.

2. Use Staged Sources

Staging models are foundational in dbt and should be used to clean and normalize raw data before applying any business logic. These models handle basic tasks such as renaming columns, casting data types, trimming whitespace, and applying consistent date formats. By standardizing input data early, downstream models become simpler and more predictable.

Prefix all staging models with stg_ to distinguish them from other transformation layers. For example, raw customer data from a CRM might be modeled as stg_crm_customers. This model would handle inconsistencies like date formatting or inconsistent string casing, while retaining a one-to-one mapping with the source table.

Staging also supports source freshness testing. By clearly defining and isolating source inputs, dbt can automatically monitor whether source tables are updated as expected. This increases transparency and helps teams detect issues early in the pipeline.

3. Choose the Correct Materialization

Selecting the right materialization in dbt depends on data volume, update frequency, and performance needs. The four primary materializations—view, table, incremental, and ephemeral—serve different purposes:

- Use views for lightweight, frequently updated models that don't require persistent storage. They’re simple to maintain and suitable for logic that changes often, but they can be slow for large datasets since queries execute on every access.

- Use tables when the data doesn’t change often or performance is a concern. Tables store results physically, offering fast access at the cost of data freshness.

- Use incremental models when dealing with large datasets that update over time. These models append or update only new data during subsequent runs, significantly reducing load times and warehouse costs. They require careful configuration to handle inserts, updates, or deletions correctly.

- Use ephemeral models for short-lived transformations reused in downstream models but not materialized in the warehouse. They are compiled as CTEs in dependent models, reducing clutter and improving query efficiency.

Each materialization balances performance, cost, and complexity. Understanding the trade-offs helps optimize model design across different data processing scenarios.

4. Use Consistent Naming Conventions

Naming conventions in dbt help signal the purpose and lifecycle stage of each model. For clarity and uniformity, use lowercase with underscores (snake_case) for all model and column names. Prefix models to indicate their role: stg_ for staging, int_ for intermediate transformations, dim_ for dimensions, and fct_ for fact tables.

For example, stg_salesforce_opportunities might flow into int_sales_pipeline, which is used in a fct_revenue model. Naming models and fields consistently enables faster navigation, better documentation, and easier collaboration.

Avoid ambiguous or overly generic names like data_table or model1. Instead, use domain-specific and descriptive terms that clearly reflect the model’s content and function. Document naming conventions in your dbt project README or development guide to help onboard new contributors and ensure alignment across teams.

5. Leverage Macros Wisely

dbt macros are a powerful mechanism for reducing repetition and centralizing complex logic, but they should be used selectively. A macro is most effective when a piece of SQL logic is repeated in multiple places or when you need to generate SQL dynamically. For example, if you calculate customer lifetime value in multiple models, implementing that calculation once in a macro ensures consistency and simplifies updates. This centralization helps avoid errors and reduces the maintenance burden.

However, excessive macro use can make SQL models harder to read and debug. When key transformation logic is hidden behind a macro, developers must navigate to the macro definition to understand what the model is doing. This adds friction during development and troubleshooting, especially for new team members. In some cases, keeping logic inline within a model makes it easier to review and maintain.

A good guideline is to avoid abstracting logic into a macro unless it’s repeated across at least two or three models or is too complex to maintain directly in the model. Macros should improve maintainability, not hide essential business logic. If you find that a macro is used only once or makes a model’s purpose less obvious, it’s better to keep the SQL explicit in the model itself.

Orchestrating dbt Data Pipelines with Dagster

Dagster is an open-source data orchestration platform with first-class support for orchestrating dbt pipelines. As a general-purpose orchestrator, Dagster allows you to go beyond just SQL transformations and seamlessly connects your dbt project with your wider data platform.

It offers teams a unified control plane for not only dbt assets, but also ingestion, transformation, and AI workflows. With a Python-native approach, it unifies SQL, Python, and more into a single testable and observable platform.

Best of all, you don’t have to choose between Dagster and dbt Cloud™ — start by integrating Dagster with existing dbt projects to unlock better scheduling, lineage, and observability. Learn more by heading to the docs on Dagster’s integration with dbt and dbt Cloud.