What are dbt Macros?

dbt (data build tool) macros are reusable, parameterized SQL and Jinja templates to automate repetitive SQL operations within a dbt project. By allowing developers to write logic once and invoke it multiple times with varying arguments, macros address redundancy, consistency, and scalability challenges in manual SQL scripting.

Macros are written in Jinja, a templating language, enabling dynamic code generation for models, tests, and snapshots. Unlike typical functions in programming languages, dbt macros operate at compile time. They generate SQL code before execution, providing flexibility and efficiency.

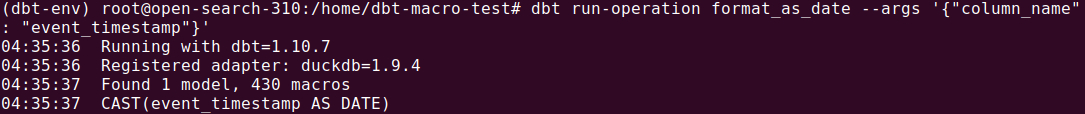

Here’s a basic example of a macro that formats a column as a date:

{% macro format_as_date(column_name) %}

CAST({{ column_name }} AS DATE)

{% endmacro %}

This macro takes a single argument, column_name, and outputs a SQL expression that casts that column to a date type. You can use it in a dbt model like this:

SELECT

id,

{{ format_as_date('event_timestamp') }}

AS event_dateFROM raw_eventsWhen compiled, this would become:

SELECT

id,

CAST(event_timestamp AS DATE)

AS event_dateFROM raw_eventsHow dbt Macros Improve SQL Workflows

dbt macros improve SQL workflows by reducing redundancy, increasing reusability, and improving code maintainability. Instead of repeating complex SQL logic in multiple places, a single macro can be written and called wherever that logic is needed. This reduces the risk of errors and makes it easier to manage changes; if the logic within a macro needs to be updated, it only has to be changed in one place.

dbt macros also allow for parameterization, enabling users to pass in dynamic values, making the code more adaptable to different contexts. This flexibility can simplify the development process, as the same macro can be applied to various models, tests, and snapshots with different parameters.

Macros also improve collaboration among data teams. Since macros abstract away complex SQL logic, they make the code easier to understand for new team members or external collaborators. By using macros, teams can standardize their SQL workflows and enforce best practices.

Understanding dbt Macro Syntax

dbt macros are written in Jinja, a templating language used to generate SQL dynamically. The essential components of dbt macro syntax include the following:

Macro definition

A macro is defined using the macros block in dbt. The syntax follows a standard Jinja function declaration, beginning with the {% macro %} tag and ending with {% endmacro %}.

Example:

{% macro my_macro(arg1, arg2) %}

-- Macro logic here

{% endmacro %}Parameters

Macros can accept parameters to make them flexible. These parameters are specified inside the parentheses following the macro name. You can pass variables to these parameters when calling the macro.

Example:

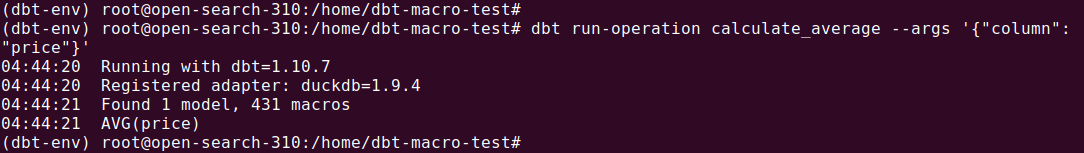

{% macro calculate_average(column) %}

AVG({{ column }})

{% endmacro %}

Jinja templating:

Macros in dbt leverage Jinja templating for dynamic code generation. Expressions like {{ variable }} or {% if condition %} are used to embed logic, variables, and control flow directly within SQL.

Example:

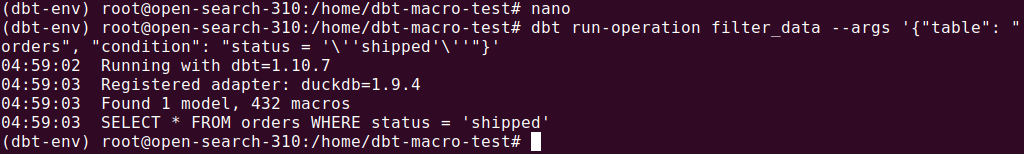

{% macro filter_data(table, condition) %}

SELECT * FROM {{ table }}

WHERE {{ condition }}

{% endmacro %}Calling a macro

To invoke a macro, you use the {{ }} syntax within dbt models or other macros. When called, the macro will generate the SQL code based on its parameters and logic.

Example:

{{ filter_data('orders', "status = 'shipped'") }}

Return values

Macros typically generate a SQL expression or statement, which is returned as a string. This output is directly embedded into the SQL being executed by dbt.

5 Ways to Execute dbt Macros

While macros are often used within models, tests, and snapshots, dbt also supports executing macros in various other contexts to extend automation and orchestration capabilities across a project.

- Inside models, tests, and snapshots: This is the most common usage. Macros are invoked within SQL files using the

{{ macro_name(arguments) }}syntax. This allows the same logic to be reused across multiple models or tests.

- CLI execution using run-operation: Macros that don’t fit naturally into models—such as utility functions for seeding data, cleaning up schemas, or altering database configurations—can be executed directly via the command line using the dbt run-operation command:

dbt run-operation macro_name --args '{"arg1": "value1"}'. This is useful for tasks like dropping stale tables, managing grants, or setting up test fixtures before a run.

- Custom materializations and hooks: Macros can be embedded in custom materializations and pre/post hooks to control behavior during model runs. For example, you can use a macro to apply grants after a model is built or log metadata before execution.

- Jinja logic in YAML and other config files: While not direct execution, macros can be referenced inside YAML files using Jinja to dynamically generate configurations for sources, tests, or exposures.

Common Use Cases and Examples for dbt Macros

Date and Time Manipulation Macros

Handling dates and timestamps in SQL can quickly become cumbersome, especially when supporting multiple data platforms. dbt macros encapsulate common date transformations and calculations, such as truncating to month, generating date ranges, or adding time intervals. These routines are wrapped in macros to promote code reuse and reduce the likelihood of logical errors.

For example, a typical macro might generate platform-specific SQL to extract the year or month from a timestamp, guaranteeing that data models behave consistently regardless of underlying data warehouse syntax. These macros are particularly useful in analytics projects that rely heavily on date-based partitioning, filtering, or rollup aggregations.

Data Quality and Validation Macros

Data quality checks are essential for reliable analytics, but repeatedly writing assertions such as checking for NULLs, unique keys, or threshold violations can lead to bloated and inconsistent code. dbt macros address this by centralizing data quality and validation logic. Teams create parameterized macros that encapsulate business rules and data validation patterns.

These macros are often consumed inside dbt tests, yielding standardized data quality enforcement in every model or staging table. By including validation checks as macros, engineers prevent the propagation of bad data and reduce technical debt associated with manual test script maintenance.

Custom Data Type Conversions and NULL Handling

Adapting SQL for custom data type conversions and ensuring proper NULL handling often introduces platform-specific code and inconsistencies. dbt macros enable teams to encapsulate these conversions, standardizing how data types are cast or how NULL values are managed across different data warehouses.

A single macro can handle differences in syntax and logic, such as converting text to integers, truncating decimals, or enforcing NOT NULL constraints. By centralizing these operations, macros prevent the proliferation of one-off fixes and unpredictable code patterns. As a result, data transformations are easier to audit and maintain.

Tutorial: How to Create and Use dbt Macros in a Model

Creating and using dbt macros can significantly improve the maintainability and scalability of your SQL codebase. In this tutorial, we’ll walk through the process of creating a simple macro and using it in your dbt project. Instructions are adapted from the dbt documentation.

Step 1: Define the Macro

The first step in using dbt macros is to define one. This involves creating a new file within the macros directory of your dbt project. The macro file can contain one or more macros, each defined using the Jinja templating syntax.

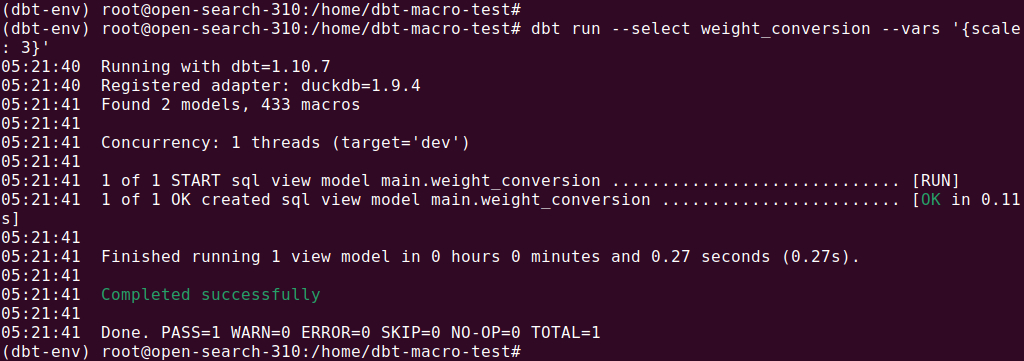

Let’s create a basic macro that converts pounds (lb) to kilograms (kg):

File: macros/lbs_to_kgs.sql

{% macro lbs_to_kgs(column_name, scale=2) %}

({{ column_name }} * 0.45359237)::numeric(16, {{ scale }})

{% endmacro %}In this example:

column_nameis the parameter passed into the macro (e.g., the column storing amounts in pounds (lbs)).scaleis an optional parameter to specify the number of decimal places to retain. It defaults to 2 if not specified.

Step 2: Use the Macro in a Model

Once your macro is defined, you can call it from any dbt model, test, or analysis file. To use the macro, simply reference it with the {{ }} syntax. For example, let’s use the lbs_to_kgs macro in a dbt model to convert a weight from pounds to kilograms:

File: models/weight_conversion.sql

select

id,

weight_lbs,

{{ lbs_to_kgs('weight_lbs', 2) }} as weight_kgs

from dataThis will be compiled into the following SQL:

select

id,

weight_lbs,

(weight_lbs * 0.45359237)::numeric(16, 2)as weight_kgs

from dataStep 3: Compile and Run the Model

To see the results of your macro in action, you need to compile your dbt project. If you are using dbt Cloud, simply click the “Compile” button. For dbt Core, run the following commands:

dbt compile

dbt run

After compilation, dbt will generate the SQL code with the macro expanded and ready to be executed in your data warehouse.

Step 4: Use Jinja for Conditional Logic and Loops

Macros in dbt aren’t limited to simple SQL transformations. You can use Jinja’s full set of control structures to build more complex logic into your macros. For example, you might write a macro to conditionally generate SQL based on certain criteria:

Example macro with conditional logic:

{% macro generate_case_statement(column_name, condition) %}

{% if condition == 'positive' %}

CASE WHEN {{ column_name }} > 0 THEN {{ column_name }} END

{% elif condition == 'negative' %}

CASE WHEN {{ column_name }} < 0 THEN {{ column_name }} END

{% else %}

{{ column_name }}

{% endif %}

{% endmacro %}In this example, the macro generates a CASE statement depending on whether the condition is 'positive' or 'negative'. You can call this macro with a column name and a condition to generate the appropriate SQL.

Usage example:

select

id,

{{ generate_case_statement('amount', 'positive') }} as positive_amount,

{{ generate_case_statement('amount', 'negative') }} as negative_amount

from app_data.paymentsThis will result in the following SQL:

select

id,

CASE WHEN amount > 0 THEN amount END as positive_amount,

CASE WHEN amount < 0 THEN amount END as negative_amount

from app_data.paymentsStep 6: Test and Debug Macros

When working with macros, it's important to test them thoroughly. To sanity check your macros, it’s a good idea to compile them from the CLI, without running them yet, to see if the SQL generated is what you expect.

For more comprehensive testing, you can use dbt’s built-in data and unit testing functionality. If the macro generates invalid SQL or doesn’t behave as expected, check the compiled SQL for clues about the issue.

When debugging, you can add print statements within the macro using Jinja’s {% do %} tag. This will print debugging information to the dbt logs, helping you identify any issues in your logic. For example:

{% macro debug_example(column_name) %}

{% do log("Debugging column: " ~ column_name, info=True) %}

{{ column_name }}

{% endmacro %}Become a Jinja Ninja: Pro Tips for Using dbt Macros

Here are some useful practices to consider when working macros in dbt.

1. Clearly Document Your Macros

Documentation is a key element of macro development. Each macro should be thoroughly explained to ensure that team members can easily understand its purpose and how to use it. Start by including a brief description of the macro’s functionality. Then, describe the parameters, specifying their expected data types, default values, and the role they play within the macro. For example, if a macro accepts a date parameter, clarify its expected format (e.g., 'YYYY-MM-DD').

Also, document the expected output, ensuring users understand what the macro returns—whether it's a SQL expression, a number, a string, or another data type. Include examples of how the macro should be called, providing context for real-world use cases. For example, demonstrate how the macro can be used in a dbt model, or under different conditions, if applicable.

2. Limit Macro Complexity

Complex macros can quickly become a liability. The more intricate a macro becomes, the harder it is to debug, test, and maintain. A single, complex macro can obscure logic that could be more clearly separated into simpler, smaller components. It's essential to keep macros focused on a single responsibility—this is often referred to as the Single Responsibility Principle, a concept borrowed from software engineering.

3. Test Macros in Isolation

Testing macros in isolation is vital to ensuring their correctness and avoid introducing bugs into your dbt project. While dbt's testing framework allows for testing in the context of your data models, it’s a best practice to validate macros independently before they are used within models.

To test a macro in isolation, you can create a separate model only for testing purposes or use dbt compile to inspect the SQL generated by the macro. This allows you to review the raw SQL output and verify that it matches your expectations. For example, if you’ve written a macro that generates a CASE statement, you should test it with different inputs to ensure it produces the correct SQL output in all scenarios.

4. Leverage Community Packages

The dbt community provides a wealth of shared resources, including macros, that can help you avoid reinventing the wheel. By using well-maintained community packages, you can build on best practices that have already been tried and tested by other developers in the field. These packages often include a range of macros that address common needs such as date handling, data transformations, or building calculated metrics.

When integrating a community macro, be sure to thoroughly review the package documentation to understand its functionality and limitations. These packages may need to be modified to meet business requirements. For example, a date manipulation macro in a community package might assume a specific date format or require adjustments to align with the data pipeline's standards.

5. Review and Refactor Macros Periodically

Refactoring is essential to maintaining the long-term health and performance of your codebase. Over time, certain macros may become obsolete, or more efficient methods may be introduced. Refactoring macros involves revisiting older logic and optimizing it based on new insights, platform updates, or changes in project requirements.

A good practice is to schedule regular code reviews or audits of your macros. During these sessions, focus on identifying macros that are overly complex, inefficient, or poorly documented. Ensure that all macros are still relevant to your current data needs, and look for opportunities to consolidate similar macros or remove redundant ones.

Orchestrating dbt Data Pipelines with Dagster

Dagster is an open-source data orchestration platform with first-class support for orchestrating dbt pipelines. As a general-purpose orchestrator, Dagster allows you to go beyond just SQL transformations and seamlessly connect your dbt project with your wider data platform.

It offers teams a unified control plane for not only dbt assets, but also ingestion, transformation, and AI workflows. With a Python-native approach, it unifies SQL, Python, and more into a single testable and observable platform.

Best of all, you don’t have to choose between Dagster and dbt Cloud™ — start by integrating Dagster with existing dbt projects to unlock better scheduling, lineage, and observability. Learn more by heading to the docs on Dagster’s integration with dbt and dbt Cloud.